Slot-consistent NLG for Task-oriented Dialogue Systems with Iterative Rectification Network

Yangming Li, Kaisheng Yao, Libo Qin, Wanxiang Che, Xiaolong Li, Ting Liu

Dialogue and Interactive Systems Long Paper

Session 1A: Jul 6

(05:00-06:00 GMT)

Session 3B: Jul 6

(13:00-14:00 GMT)

Abstract:

Data-driven approaches using neural networks have achieved promising performances in natural language generation (NLG). However, neural generators are prone to make mistakes, e.g., neglecting an input slot value and generating a redundant slot value. Prior works refer this to hallucination phenomenon. In this paper, we study slot consistency for building reliable NLG systems with all slot values of input dialogue act (DA) properly generated in output sentences. We propose Iterative Rectification Network (IRN) for improving general NLG systems to produce both correct and fluent responses. It applies a bootstrapping algorithm to sample training candidates and uses reinforcement learning to incorporate discrete reward related to slot inconsistency into training. Comprehensive studies have been conducted on multiple benchmark datasets, showing that the proposed methods have significantly reduced the slot error rate (ERR) for all strong baselines. Human evaluations also have confirmed its effectiveness.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

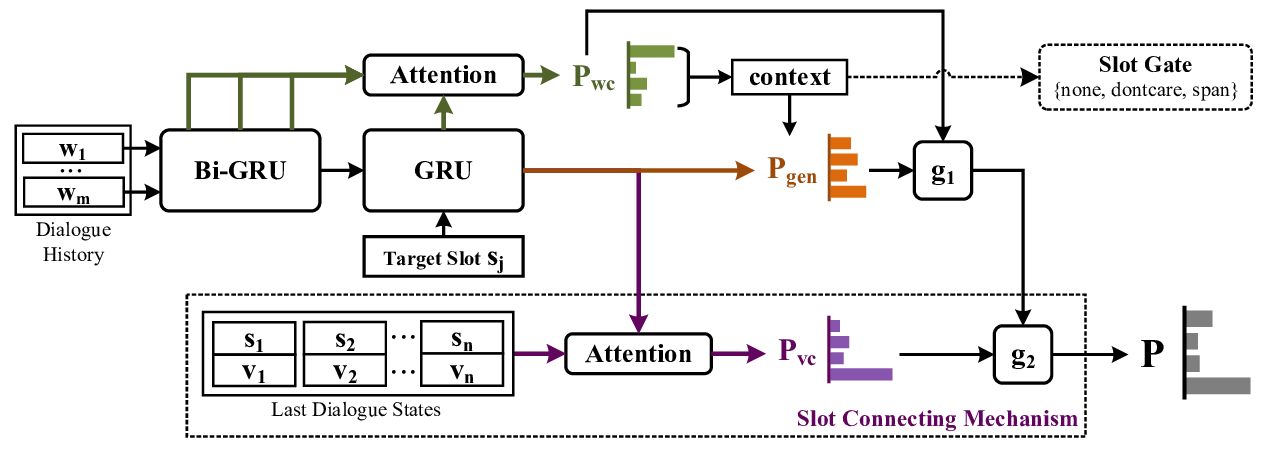

Dialogue State Tracking with Explicit Slot Connection Modeling

Yawen Ouyang, Moxin Chen, Xinyu Dai, Yinggong Zhao, Shujian Huang, Jiajun Chen,

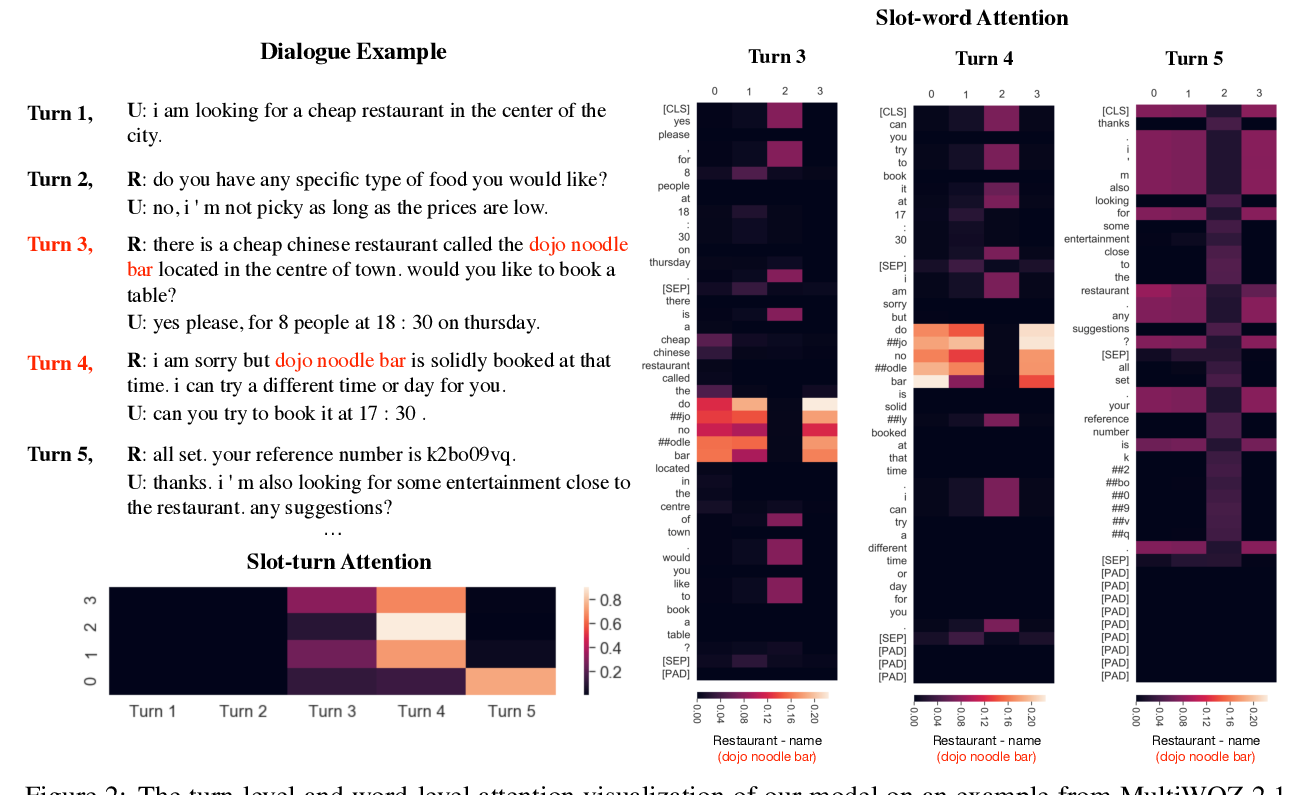

A Contextual Hierarchical Attention Network with Adaptive Objective for Dialogue State Tracking

Yong Shan, Zekang Li, Jinchao Zhang, Fandong Meng, Yang Feng, Cheng Niu, Jie Zhou,

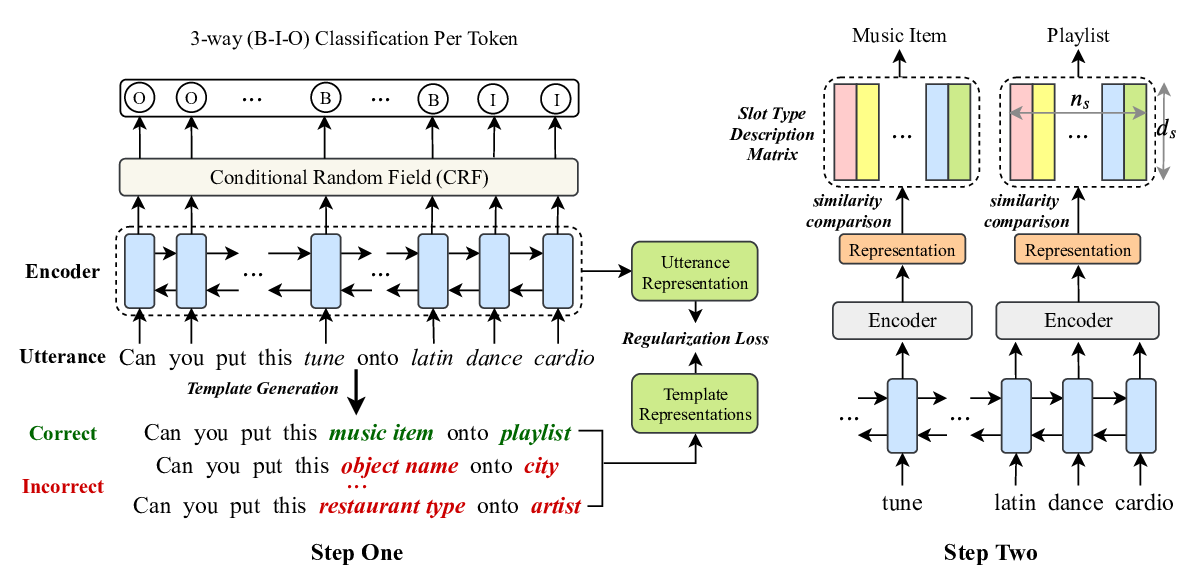

Coach: A Coarse-to-Fine Approach for Cross-domain Slot Filling

Zihan Liu, Genta Indra Winata, Peng Xu, Pascale Fung,

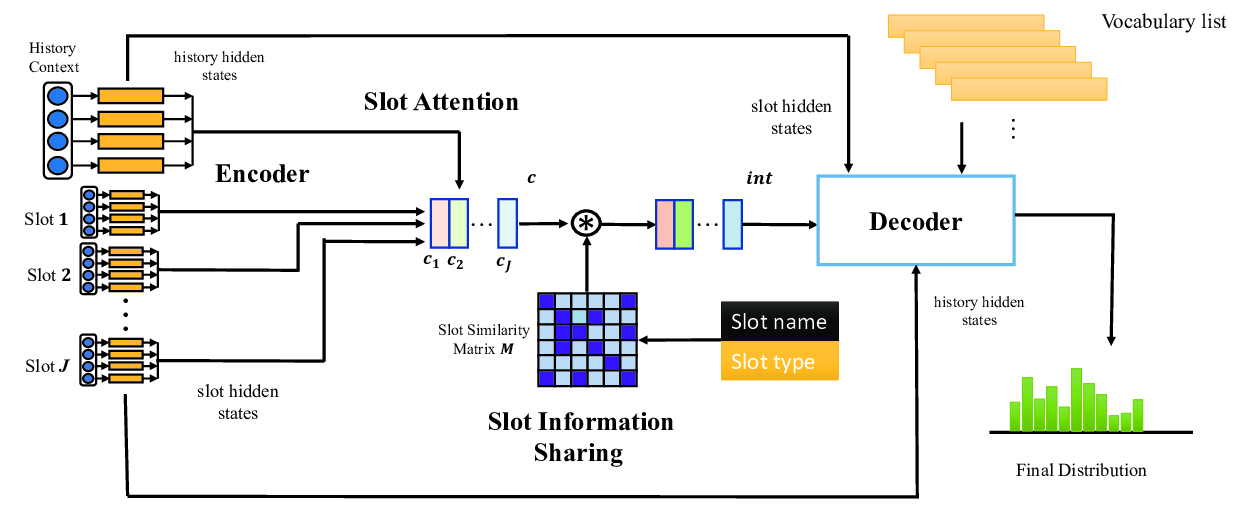

SAS: Dialogue State Tracking via Slot Attention and Slot Information Sharing

Jiaying Hu, Yan Yang, Chencai Chen, liang he, Zhou Yu,