BENTO: A Visual Platform for Building Clinical NLP Pipelines Based on CodaLab

Yonghao Jin, Fei Li, Hong Yu

System Demonstrations Demo Paper

Demo Session 4B-1: Jul 6

(17:45-18:45 GMT)

Demo Session 4B-2: Jul 7

(17:45-18:45 GMT)

Abstract:

CodaLab is an open-source web-based platform for collaborative computational research. Although CodaLab has gained popularity in the research community, its interface has limited support for creating reusable tools that can be easily applied to new datasets and composed into pipelines. In clinical domain, natural language processing (NLP) on medical notes generally involves multiple steps, like tokenization, named entity recognition, etc. Since these steps require different tools which are usually scattered in different publications, it is not easy for researchers to use them to process their own datasets. In this paper, we present BENTO, a workflow management platform with a graphic user interface (GUI) that is built on top of CodaLab, to facilitate the process of building clinical NLP pipelines. BENTO comes with a number of clinical NLP tools that have been pre-trained using medical notes and expert annotations and can be readily used for various clinical NLP tasks. It also allows researchers and developers to create their custom tools (e.g., pre-trained NLP models) and use them in a controlled and reproducible way. In addition, the GUI interface enables researchers with limited computer background to compose tools into NLP pipelines and then apply the pipelines on their own datasets in a "what you see is what you get'' (WYSIWYG) way. Although BENTO is designed for clinical NLP applications, the underlying architecture is flexible to be tailored to any other domains.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

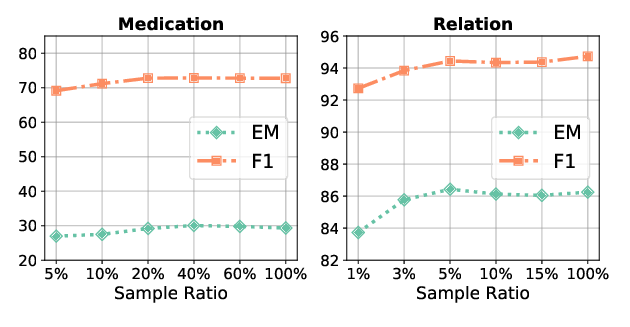

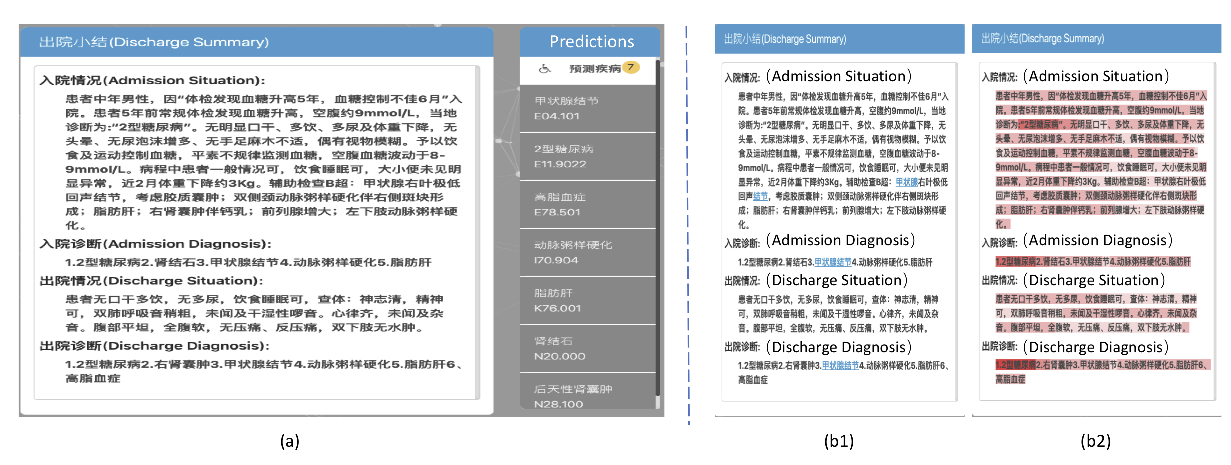

Clinical-Coder: Assigning Interpretable ICD-10 Codes to Chinese Clinical Notes

Pengfei Cao, Chenwei Yan, Xiangling Fu, Yubo Chen, Kang Liu, Jun Zhao, Shengping Liu, Weifeng Chong,

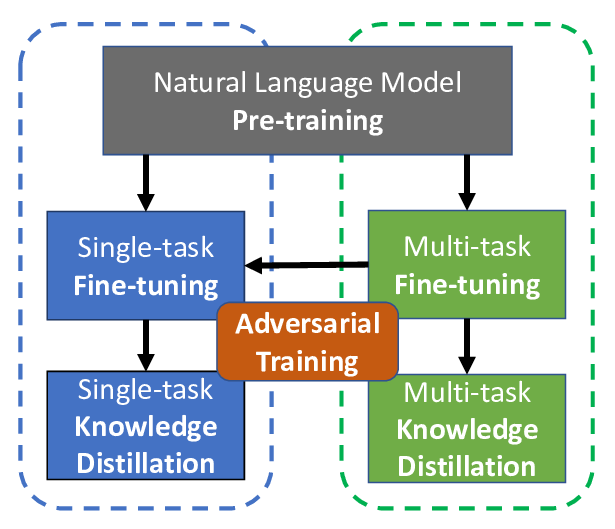

The Microsoft Toolkit of Multi-Task Deep Neural Networks for Natural Language Understanding

Xiaodong Liu, Yu Wang, Jianshu Ji, Hao Cheng, Xueyun Zhu, Emmanuel Awa, Pengcheng He, Weizhu Chen, Hoifung Poon, Guihong Cao, Jianfeng Gao,

Code-Switching Patterns Can Be an Effective Route to Improve Performance of Downstream NLP Applications: A Case Study of Humour, Sarcasm and Hate Speech Detection

Srijan Bansal, Vishal Garimella, Ayush Suhane, Jasabanta Patro, Animesh Mukherjee,

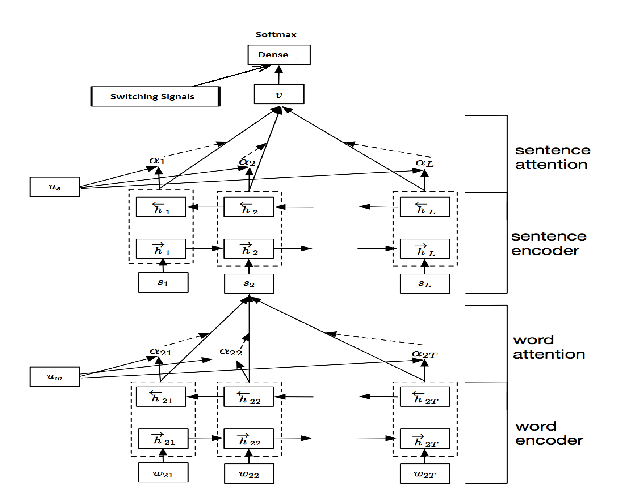

Clinical Reading Comprehension: A Thorough Analysis of the emrQA Dataset

Xiang Yue, Bernal Jimenez Gutierrez, Huan Sun,