The SOFC-Exp Corpus and Neural Approaches to Information Extraction in the Materials Science Domain

Annemarie Friedrich, Heike Adel, Federico Tomazic, Johannes Hingerl, Renou Benteau, Anika Marusczyk, Lukas Lange

Resources and Evaluation Long Paper

Session 2A: Jul 6

(08:00-09:00 GMT)

Session 3A: Jul 6

(12:00-13:00 GMT)

Abstract:

This paper presents a new challenging information extraction task in the domain of materials science. We develop an annotation scheme for marking information on experiments related to solid oxide fuel cells in scientific publications, such as involved materials and measurement conditions. With this paper, we publish our annotation guidelines, as well as our SOFC-Exp corpus consisting of 45 open-access scholarly articles annotated by domain experts. A corpus and an inter-annotator agreement study demonstrate the complexity of the suggested named entity recognition and slot filling tasks as well as high annotation quality. We also present strong neural-network based models for a variety of tasks that can be addressed on the basis of our new data set. On all tasks, using BERT embeddings leads to large performance gains, but with increasing task complexity, adding a recurrent neural network on top seems beneficial. Our models will serve as competitive baselines in future work, and analysis of their performance highlights difficult cases when modeling the data and suggests promising research directions.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

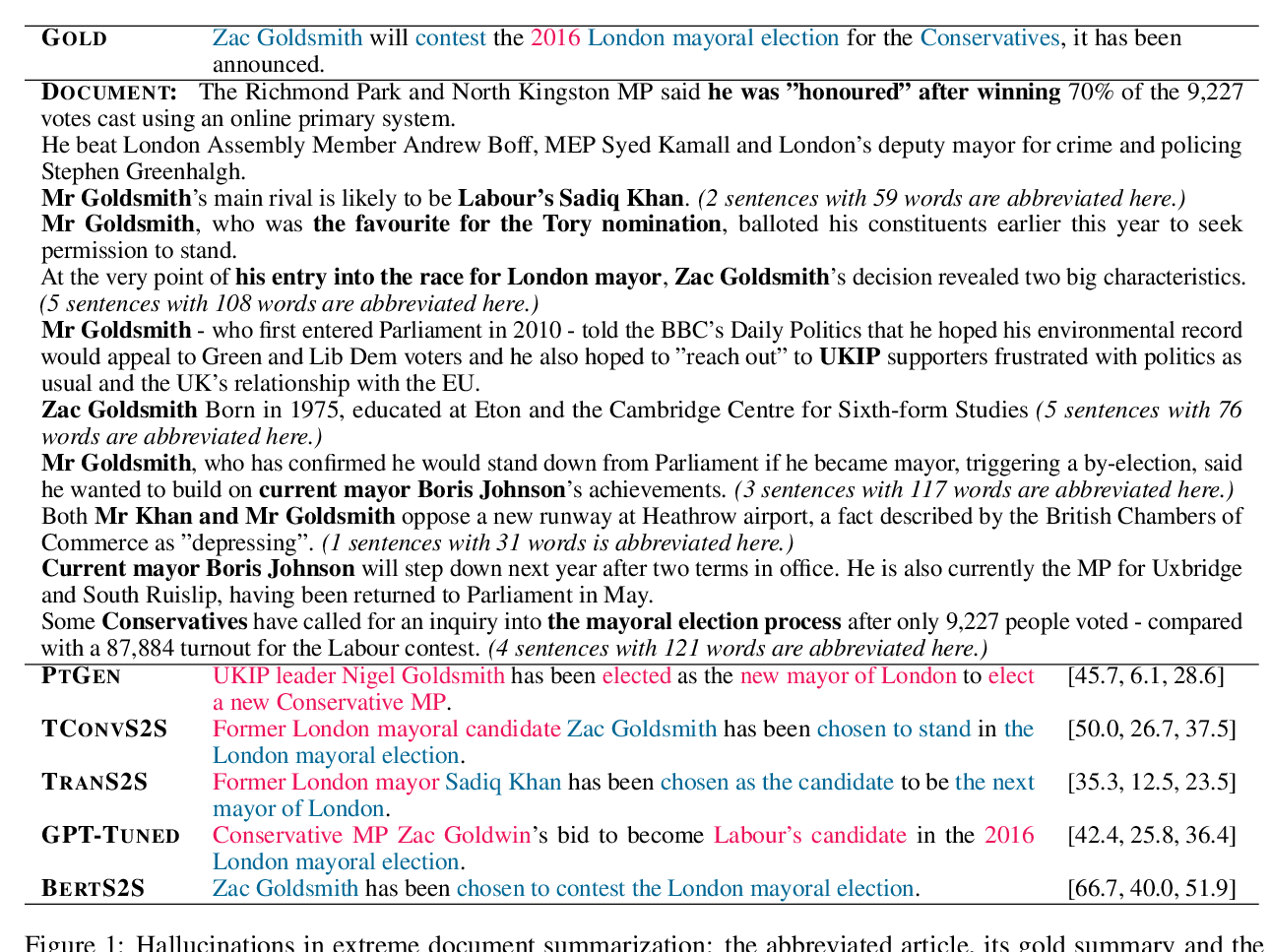

On Faithfulness and Factuality in Abstractive Summarization

Joshua Maynez, Shashi Narayan, Bernd Bohnet, Ryan McDonald,

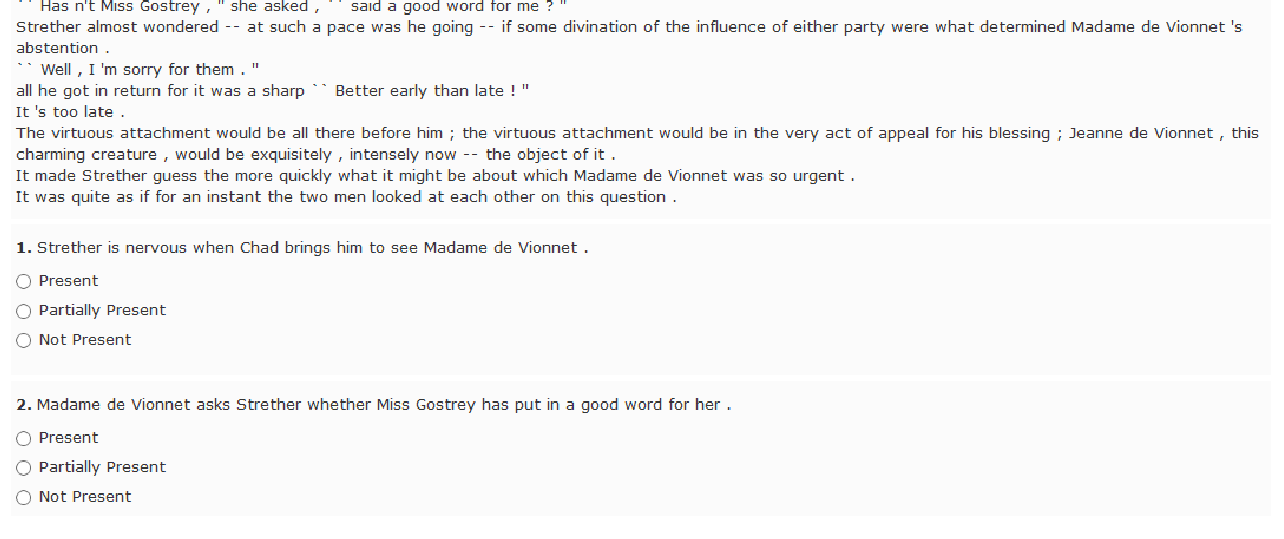

Exploring Content Selection in Summarization of Novel Chapters

Faisal Ladhak, Bryan Li, Yaser Al-Onaizan, Kathy McKeown,

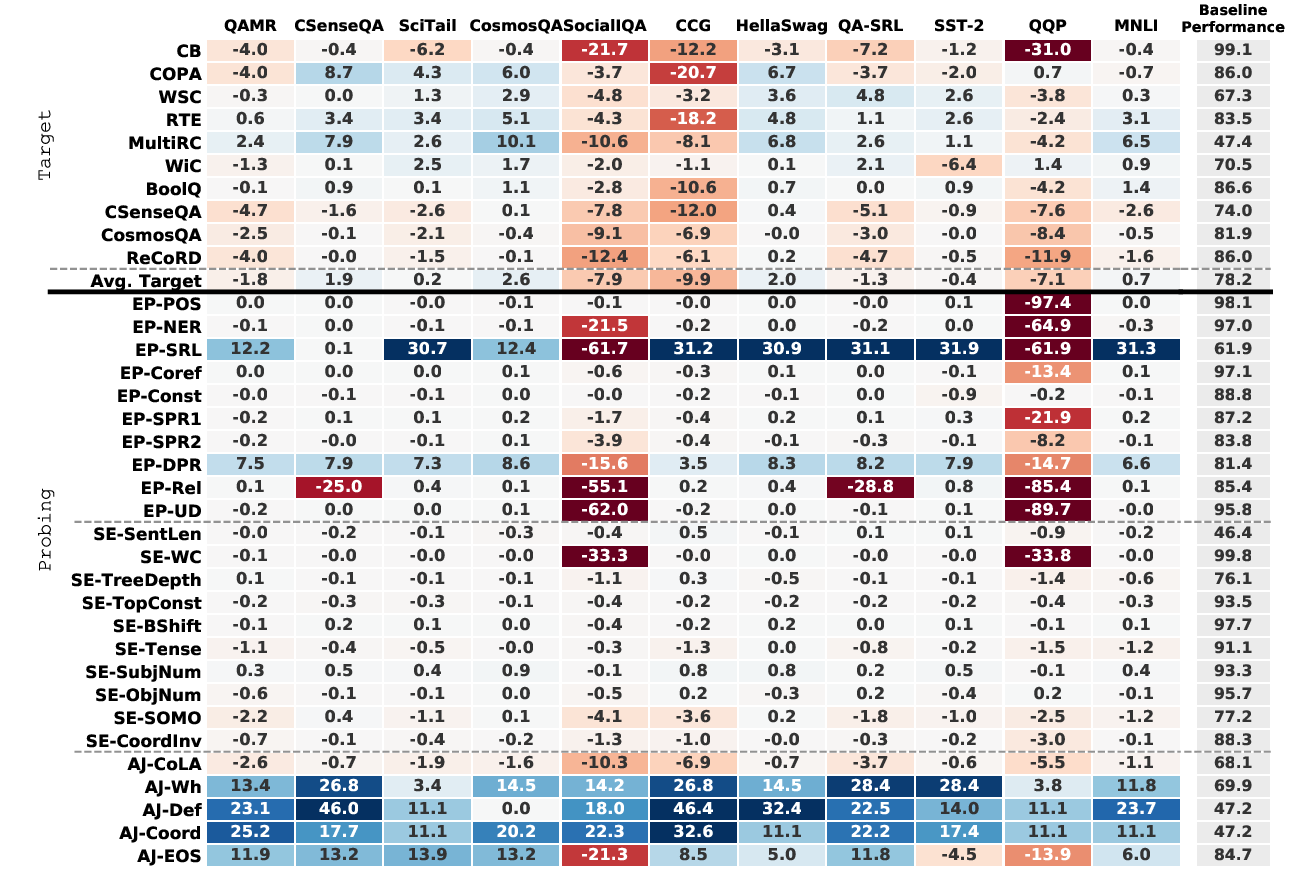

Intermediate-Task Transfer Learning with Pretrained Language Models: When and Why Does It Work?

Yada Pruksachatkun, Jason Phang, Haokun Liu, Phu Mon Htut, Xiaoyi Zhang, Richard Yuanzhe Pang, Clara Vania, Katharina Kann, Samuel R. Bowman,

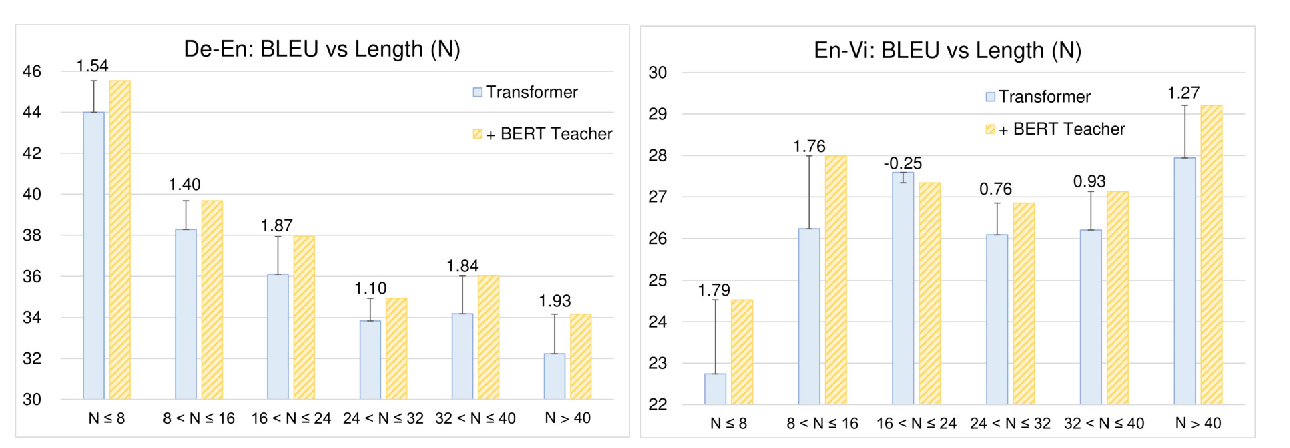

Distilling Knowledge Learned in BERT for Text Generation

Yen-Chun Chen, Zhe Gan, Yu Cheng, Jingzhou Liu, Jingjing Liu,