On Importance Sampling-Based Evaluation of Latent Language Models

Robert L Logan IV, Matt Gardner, Sameer Singh

Machine Learning for NLP Short Paper

Session 4A: Jul 6

(17:00-18:00 GMT)

Session 5A: Jul 6

(20:00-21:00 GMT)

Abstract:

Language models that use additional latent structures (e.g., syntax trees, coreference chains, knowledge graph links) provide several advantages over traditional language models. However, likelihood-based evaluation of these models is often intractable as it requires marginalizing over the latent space. Existing works avoid this issue by using importance sampling. Although this approach has asymptotic guarantees, analysis is rarely conducted on the effect of decisions such as sample size and choice of proposal distribution on the reported estimates. In this paper, we carry out this analysis for three models: RNNG, EntityNLM, and KGLM. In addition, we elucidate subtle differences in how importance sampling is applied in these works that can have substantial effects on the final estimates, as well as provide theoretical results which reinforce the validity of this technique.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

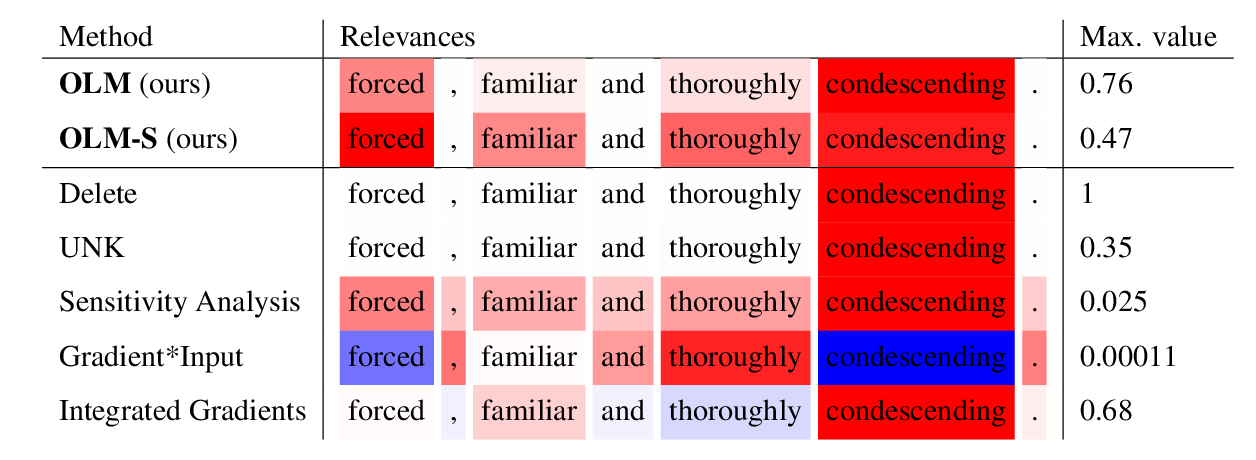

Considering Likelihood in NLP Classification Explanations with Occlusion and Language Modeling

David Harbecke, Christoph Alt,

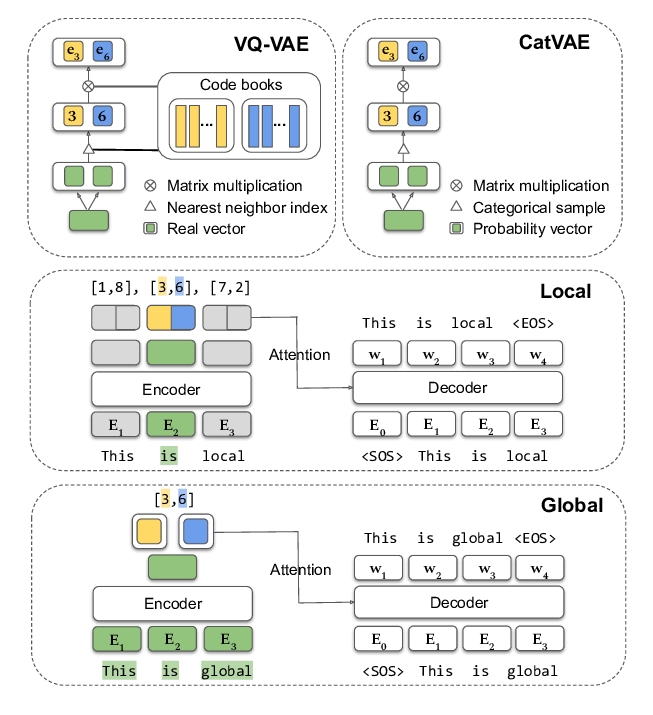

Discrete Latent Variable Representations for Low-Resource Text Classification

Shuning Jin, Sam Wiseman, Karl Stratos, Karen Livescu,

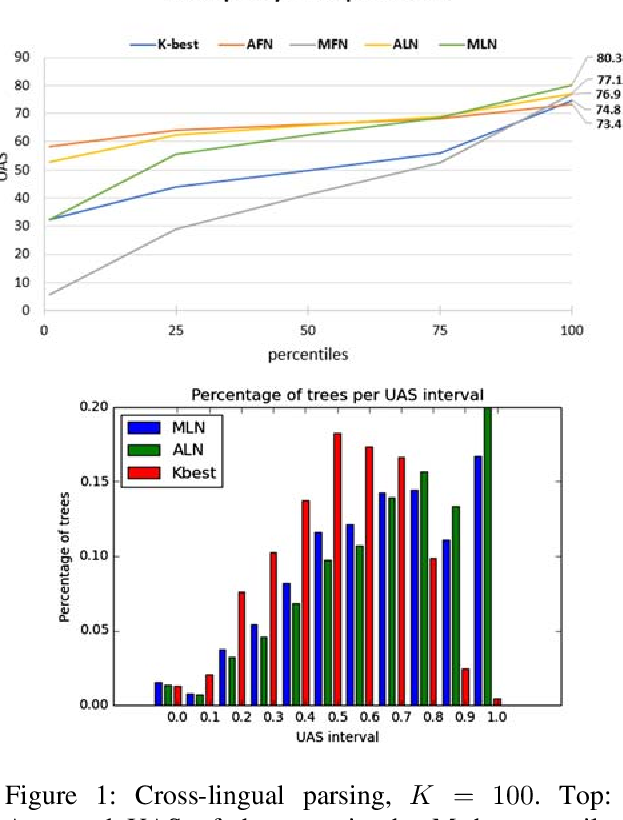

Perturbation Based Learning for Structured NLP tasks with Application to Dependency Parsing

Amichay Doitch, Ram Yazdi, Tamir Hazan, Roi Reichart,

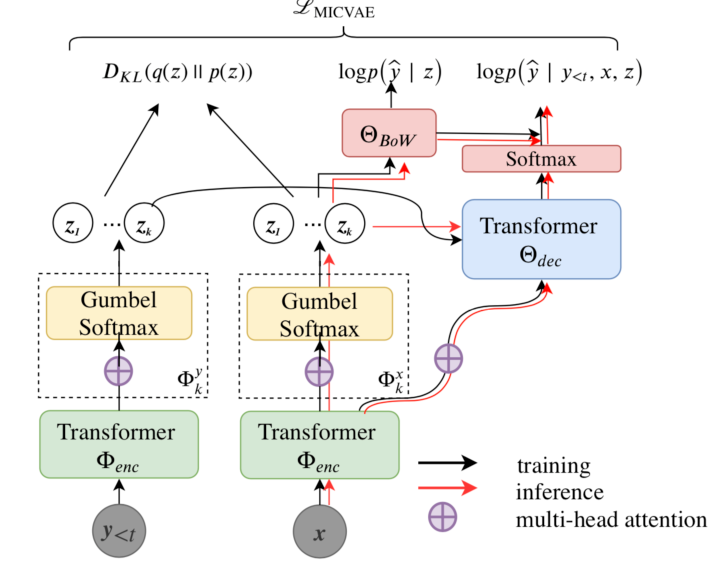

Addressing Posterior Collapse with Mutual Information for Improved Variational Neural Machine Translation

Arya D. McCarthy, Xian Li, Jiatao Gu, Ning Dong,