A Girl Has A Name: Detecting Authorship Obfuscation

Asad Mahmood, Zubair Shafiq, Padmini Srinivasan

NLP Applications Long Paper

Session 4A: Jul 6

(17:00-18:00 GMT)

Session 5B: Jul 6

(21:00-22:00 GMT)

Abstract:

Authorship attribution aims to identify the author of a text based on the stylometric analysis. Authorship obfuscation, on the other hand, aims to protect against authorship attribution by modifying a text’s style. In this paper, we evaluate the stealthiness of state-of-the-art authorship obfuscation methods under an adversarial threat model. An obfuscator is stealthy to the extent an adversary finds it challenging to detect whether or not a text modified by the obfuscator is obfuscated – a decision that is key to the adversary interested in authorship attribution. We show that the existing authorship obfuscation methods are not stealthy as their obfuscated texts can be identified with an average F1 score of 0.87. The reason for the lack of stealthiness is that these obfuscators degrade text smoothness, as ascertained by neural language models, in a detectable manner. Our results highlight the need to develop stealthy authorship obfuscation methods that can better protect the identity of an author seeking anonymity.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

A Geometry-Inspired Attack for Generating Natural Language Adversarial Examples

Zhao Meng, Roger Wattenhofer,

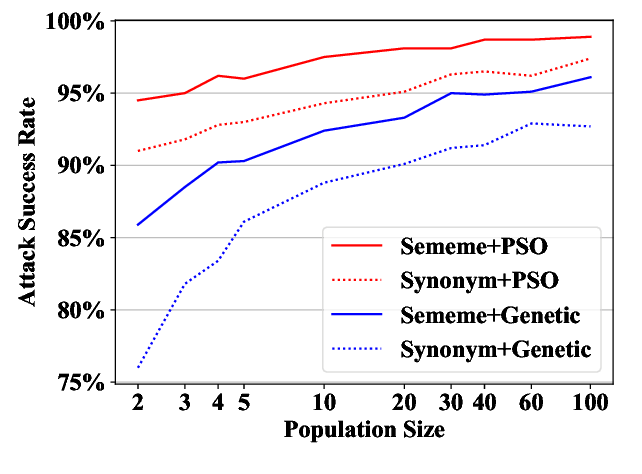

Word-level Textual Adversarial Attacking as Combinatorial Optimization

Yuan Zang, Fanchao Qi, Chenghao Yang, Zhiyuan Liu, Meng Zhang, Qun Liu, Maosong Sun,

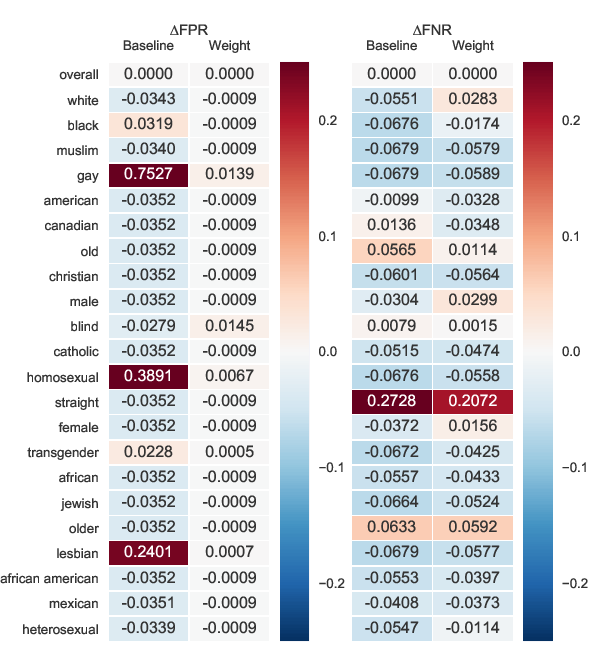

Demographics Should Not Be the Reason of Toxicity: Mitigating Discrimination in Text Classifications with Instance Weighting

Guanhua Zhang, Bing Bai, Junqi Zhang, Kun Bai, Conghui Zhu, Tiejun Zhao,

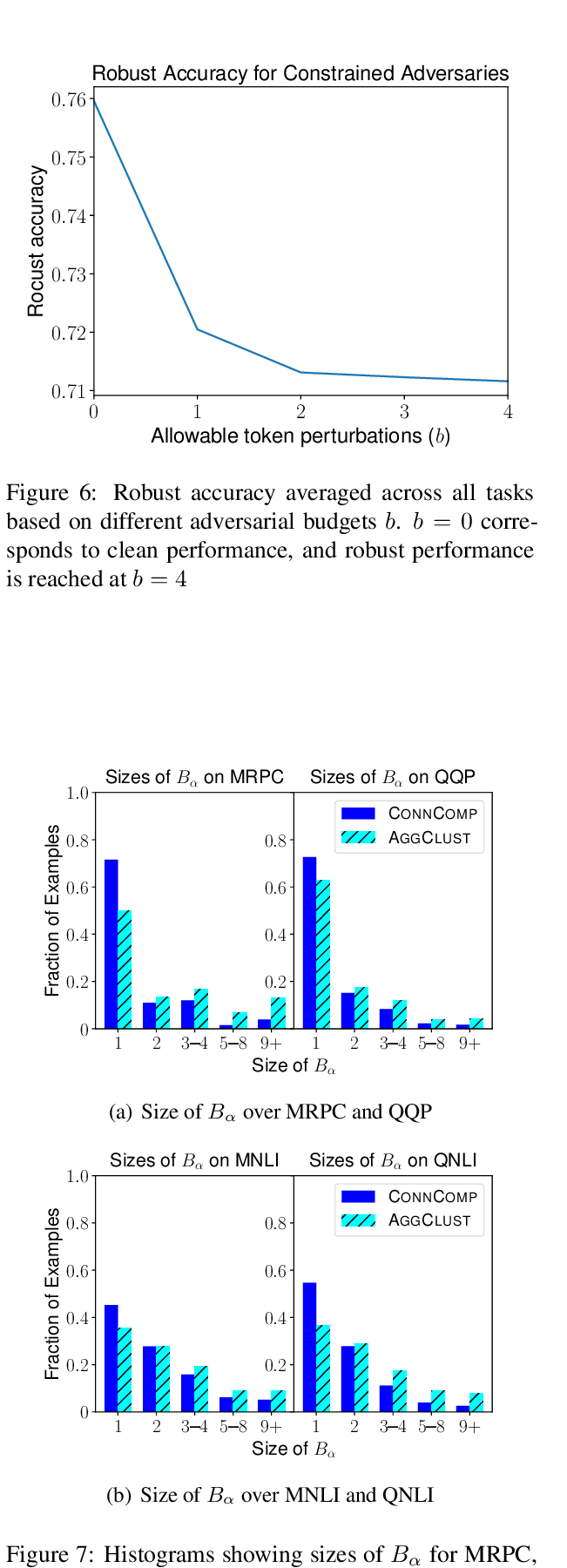

Robust Encodings: A Framework for Combating Adversarial Typos

Erik Jones, Robin Jia, Aditi Raghunathan, Percy Liang,