Meta-Transfer Learning for Code-Switched Speech Recognition

Genta Indra Winata, Samuel Cahyawijaya, Zhaojiang Lin, Zihan Liu, Peng Xu, Pascale Fung

Speech and Multimodality Short Paper

Session 6B: Jul 7

(06:00-07:00 GMT)

Session 8B: Jul 7

(13:00-14:00 GMT)

Abstract:

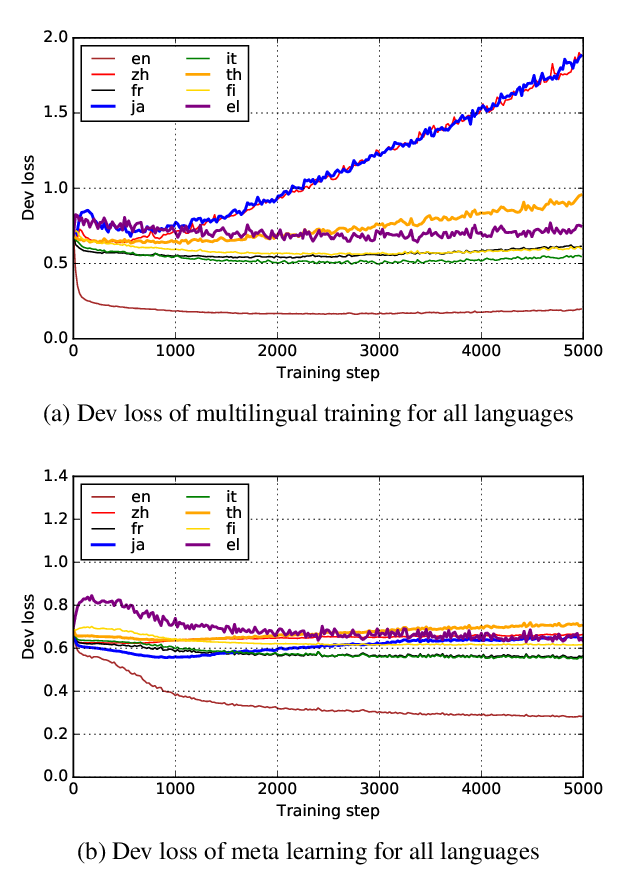

An increasing number of people in the world today speak a mixed-language as a result of being multilingual. However, building a speech recognition system for code-switching remains difficult due to the availability of limited resources and the expense and significant effort required to collect mixed-language data. We therefore propose a new learning method, meta-transfer learning, to transfer learn on a code-switched speech recognition system in a low-resource setting by judiciously extracting information from high-resource monolingual datasets. Our model learns to recognize individual languages, and transfer them so as to better recognize mixed-language speech by conditioning the optimization on the code-switching data. Based on experimental results, our model outperforms existing baselines on speech recognition and language modeling tasks, and is faster to converge.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

GLUECoS: An Evaluation Benchmark for Code-Switched NLP

Simran Khanuja, Sandipan Dandapat, Anirudh Srinivasan, Sunayana Sitaram, Monojit Choudhury,

From English to Code-Switching: Transfer Learning with Strong Morphological Clues

Gustavo Aguilar, Thamar Solorio,

Hypernymy Detection for Low-Resource Languages via Meta Learning

Changlong Yu, Jialong Han, Haisong Zhang, Wilfred Ng,

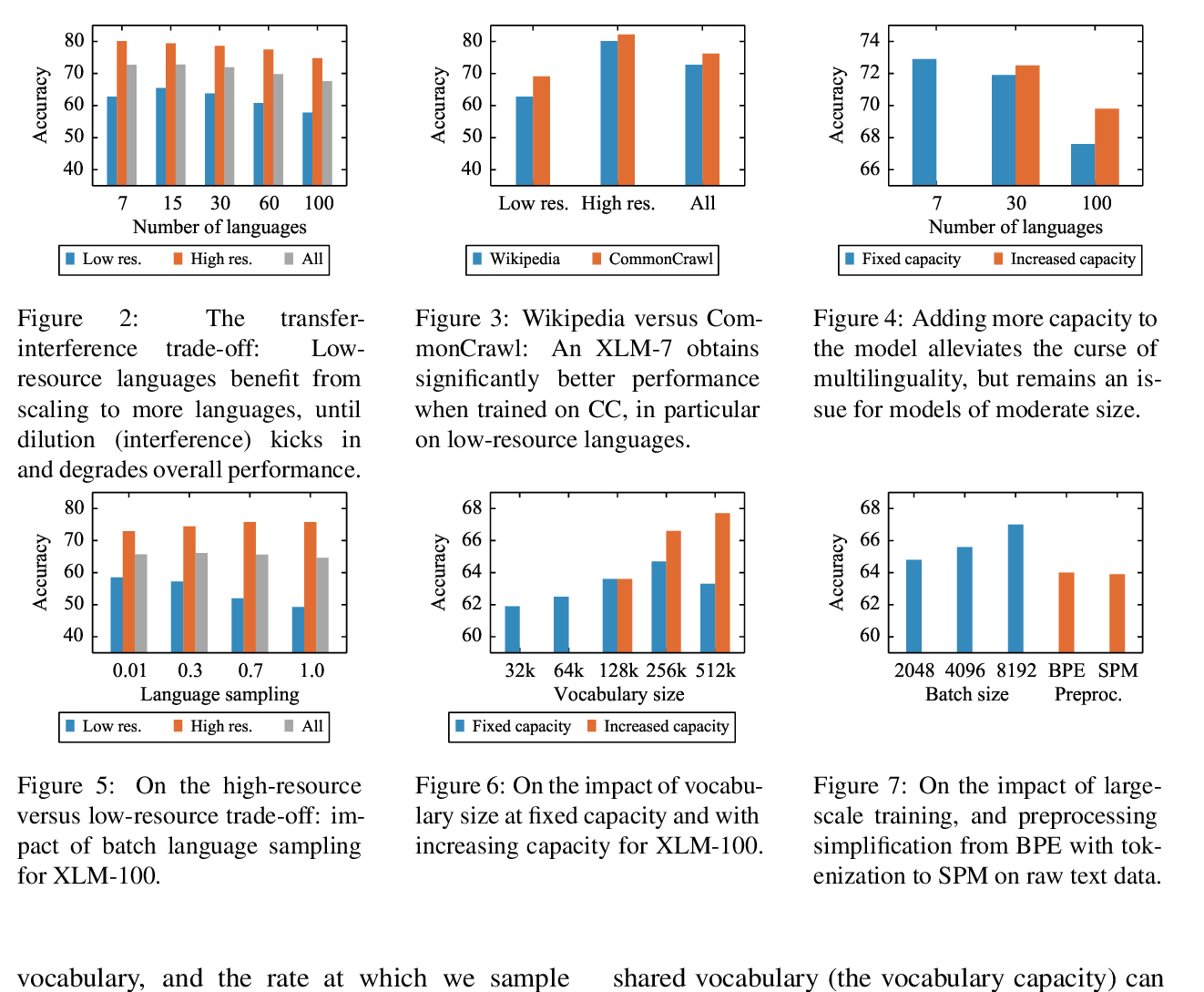

Unsupervised Cross-lingual Representation Learning at Scale

Alexis Conneau, Kartikay Khandelwal, Naman Goyal, Vishrav Chaudhary, Guillaume Wenzek, Francisco Guzmán, Edouard Grave, Myle Ott, Luke Zettlemoyer, Veselin Stoyanov,