Do Neural Language Models Show Preferences for Syntactic Formalisms?

Artur Kulmizev, Vinit Ravishankar, Mostafa Abdou, Joakim Nivre

Syntax: Tagging, Chunking and Parsing Long Paper

Session 7A: Jul 7

(08:00-09:00 GMT)

Session 8A: Jul 7

(12:00-13:00 GMT)

Abstract:

Recent work on the interpretability of deep neural language models has concluded that many properties of natural language syntax are encoded in their representational spaces. However, such studies often suffer from limited scope by focusing on a single language and a single linguistic formalism. In this study, we aim to investigate the extent to which the semblance of syntactic structure captured by language models adheres to a surface-syntactic or deep syntactic style of analysis, and whether the patterns are consistent across different languages. We apply a probe for extracting directed dependency trees to BERT and ELMo models trained on 13 different languages, probing for two different syntactic annotation styles: Universal Dependencies (UD), prioritizing deep syntactic relations, and Surface-Syntactic Universal Dependencies (SUD), focusing on surface structure. We find that both models exhibit a preference for UD over SUD --- with interesting variations across languages and layers --- and that the strength of this preference is correlated with differences in tree shape.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

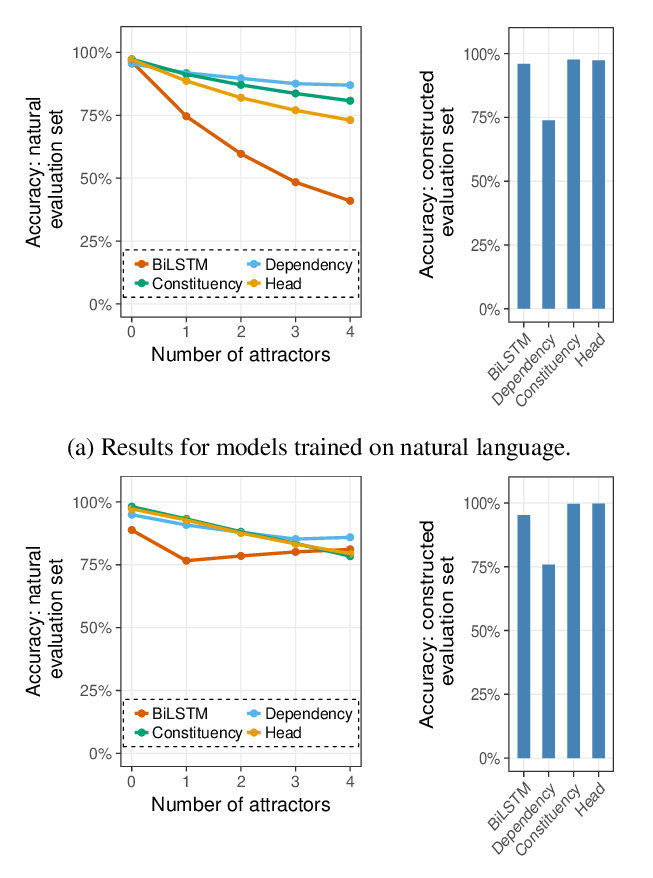

Does Syntax Need to Grow on Trees? Sources of Hierarchical Inductive Bias in Sequence-to-Sequence Networks

R. Thomas McCoy, Robert Frank, Tal Linzen,

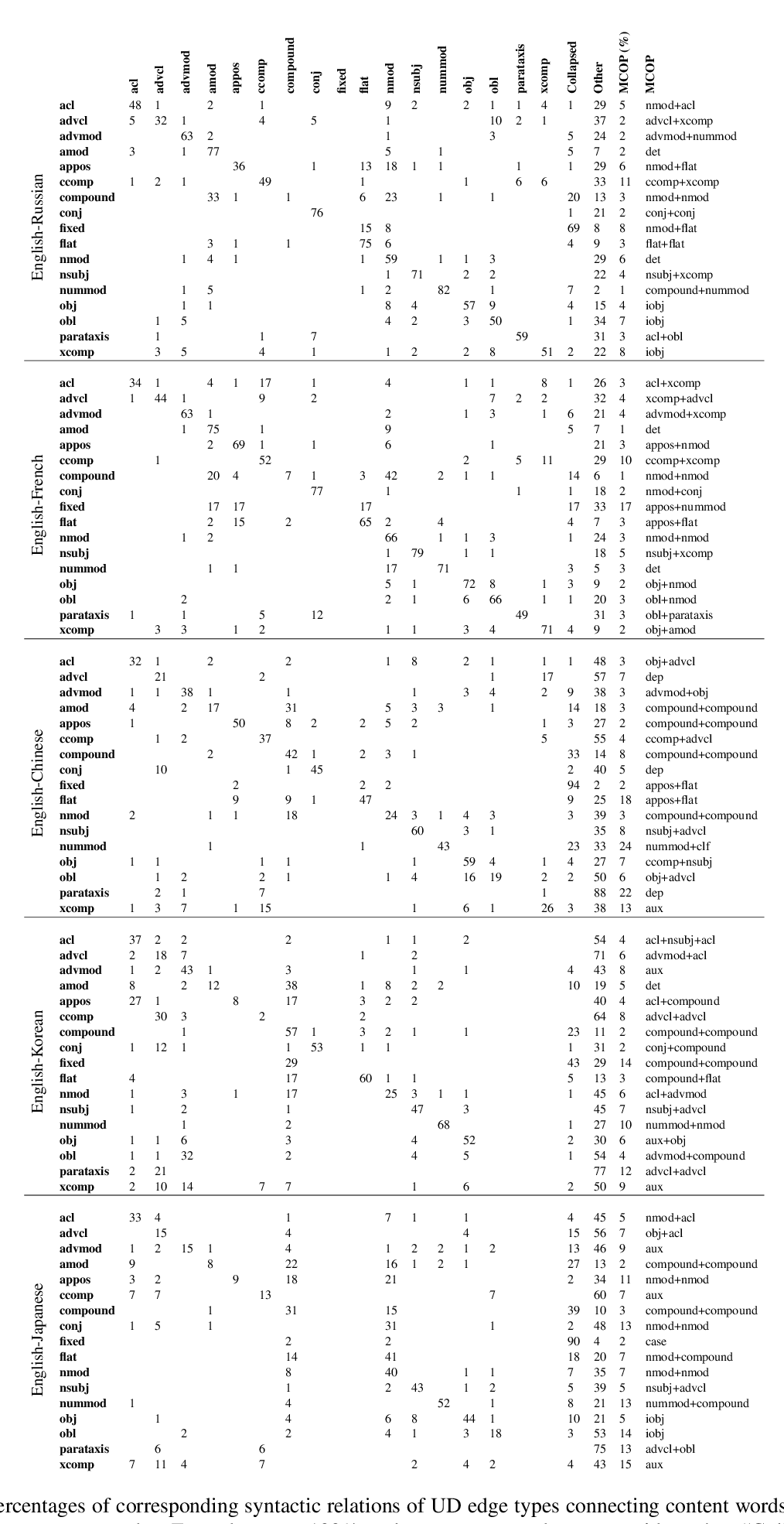

Fine-Grained Analysis of Cross-Linguistic Syntactic Divergences

Dmitry Nikolaev, Ofir Arviv, Taelin Karidi, Neta Kenneth, Veronika Mitnik, Lilja Maria Saeboe, Omri Abend,

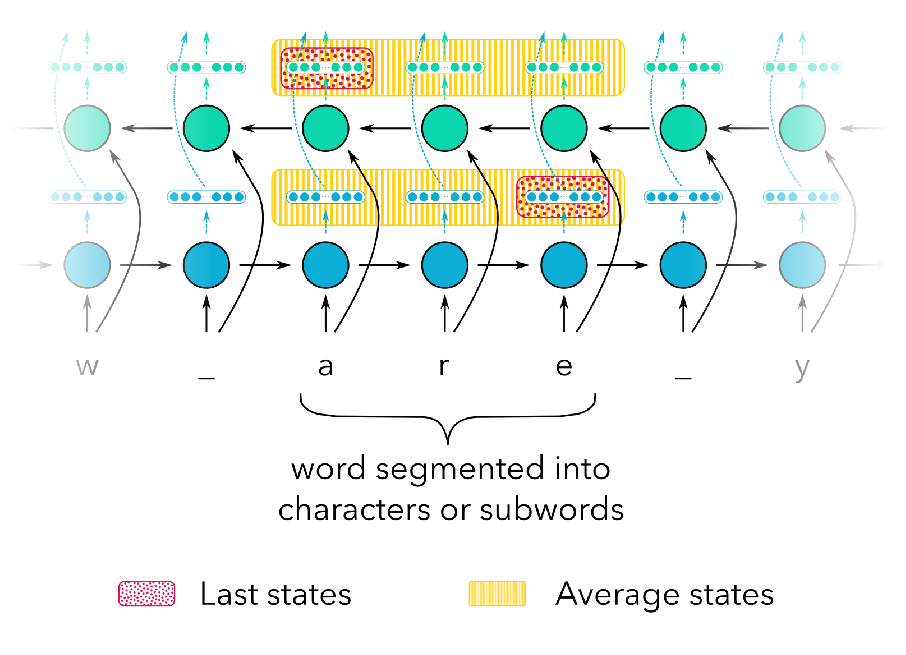

On the Linguistic Representational Power of Neural Machine Translation Models

Yonatan Belinkov, Nadir Durrani, Fahim Dalvi, Hassan Sajjad, James Glass,

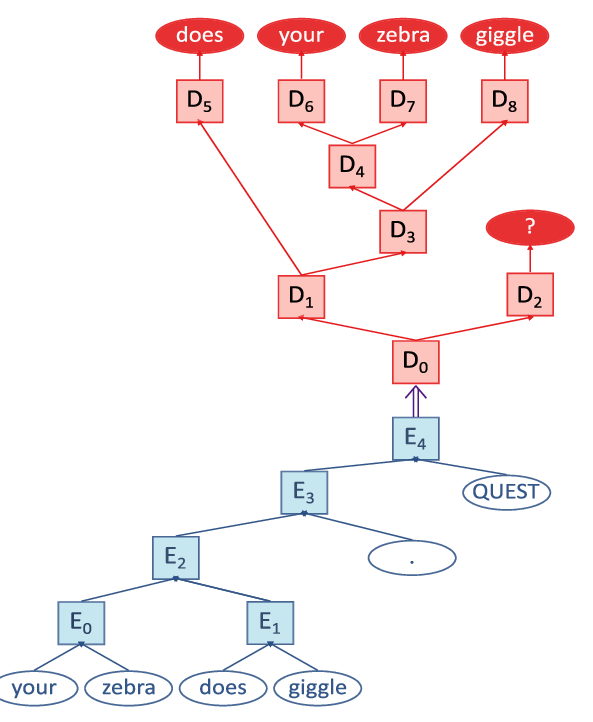

Representations of Syntax [MASK] Useful: Effects of Constituency and Dependency Structure in Recursive LSTMs

Michael Lepori, Tal Linzen, R. Thomas McCoy,