Does Syntax Need to Grow on Trees? Sources of Hierarchical Inductive Bias in Sequence-to-Sequence Networks

R. Thomas McCoy, Robert Frank, Tal Linzen

Interpretability and Analysis of Models for NLP TACL Paper

Session 8B: Jul 7

(13:00-14:00 GMT)

Session 9B: Jul 7

(18:00-19:00 GMT)

Abstract:

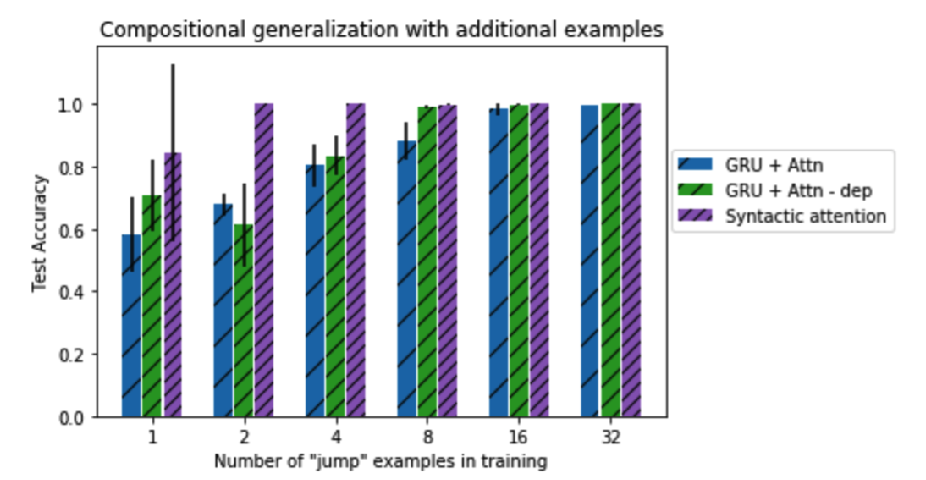

Learners that are exposed to the same training data might generalize differently due to differing inductive biases. In neural network models, inductive biases could in theory arise from any aspect of the model architecture. We investigate which architectural factors affect the generalization behavior of neural sequence-to-sequence models trained on two syntactic tasks, English question formation and English tense reinflection. For both tasks, the training set is consistent with a generalization based on hierarchical structure and a generalization based on linear order. All architectural factors that we investigated qualitatively affected how models generalized, including factors with no clear connection to hierarchical structure. For example, LSTMs and GRUs displayed qualitatively different inductive biases. However, the only factor that consistently contributed a hierarchical bias across tasks was the use of a tree-structured model rather than a model with sequential recurrence, suggesting that human-like syntactic generalization requires architectural syntactic structure.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

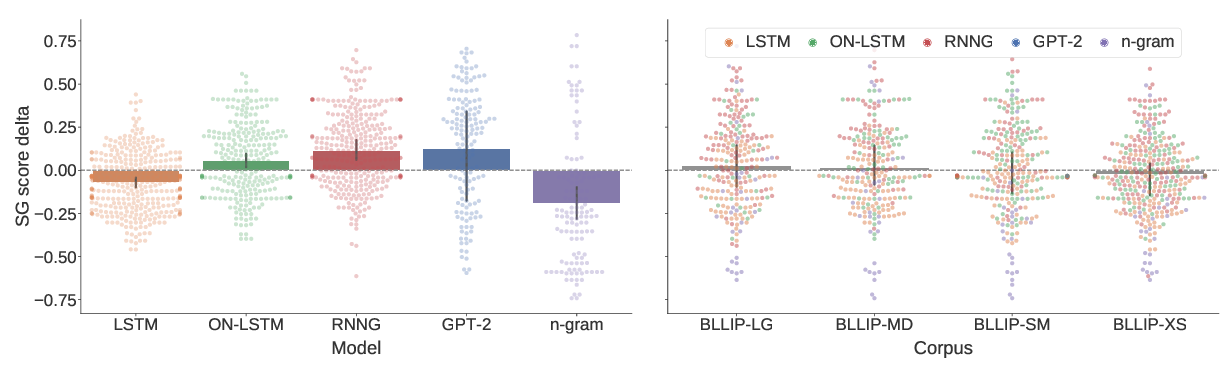

A Systematic Assessment of Syntactic Generalization in Neural Language Models

Jennifer Hu, Jon Gauthier, Peng Qian, Ethan Wilcox, Roger Levy,

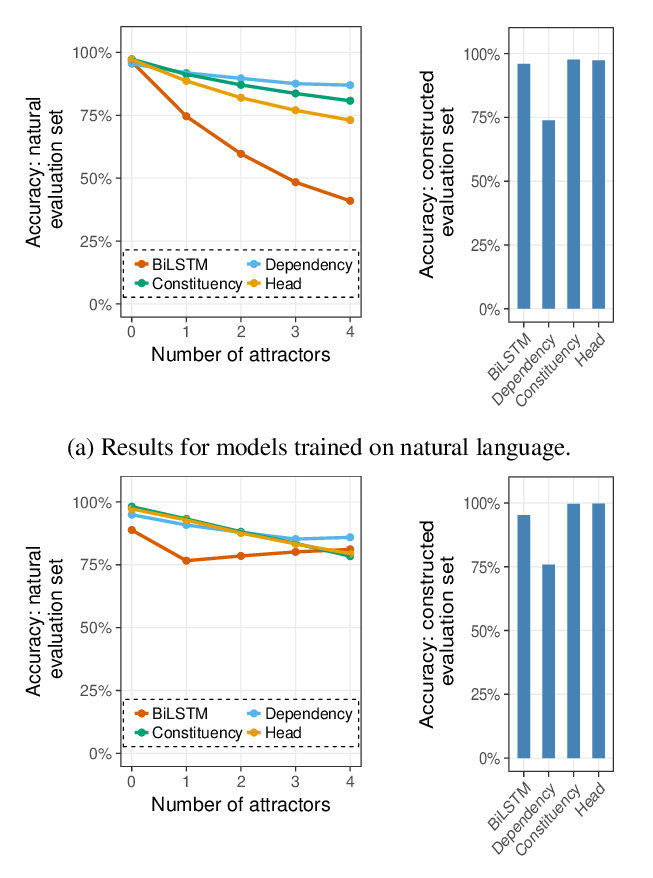

Representations of Syntax [MASK] Useful: Effects of Constituency and Dependency Structure in Recursive LSTMs

Michael Lepori, Tal Linzen, R. Thomas McCoy,

Compositional Generalization by Factorizing Alignment and Translation

Jacob Russin, Jason Jo, Randall O'Reilly, Yoshua Bengio,

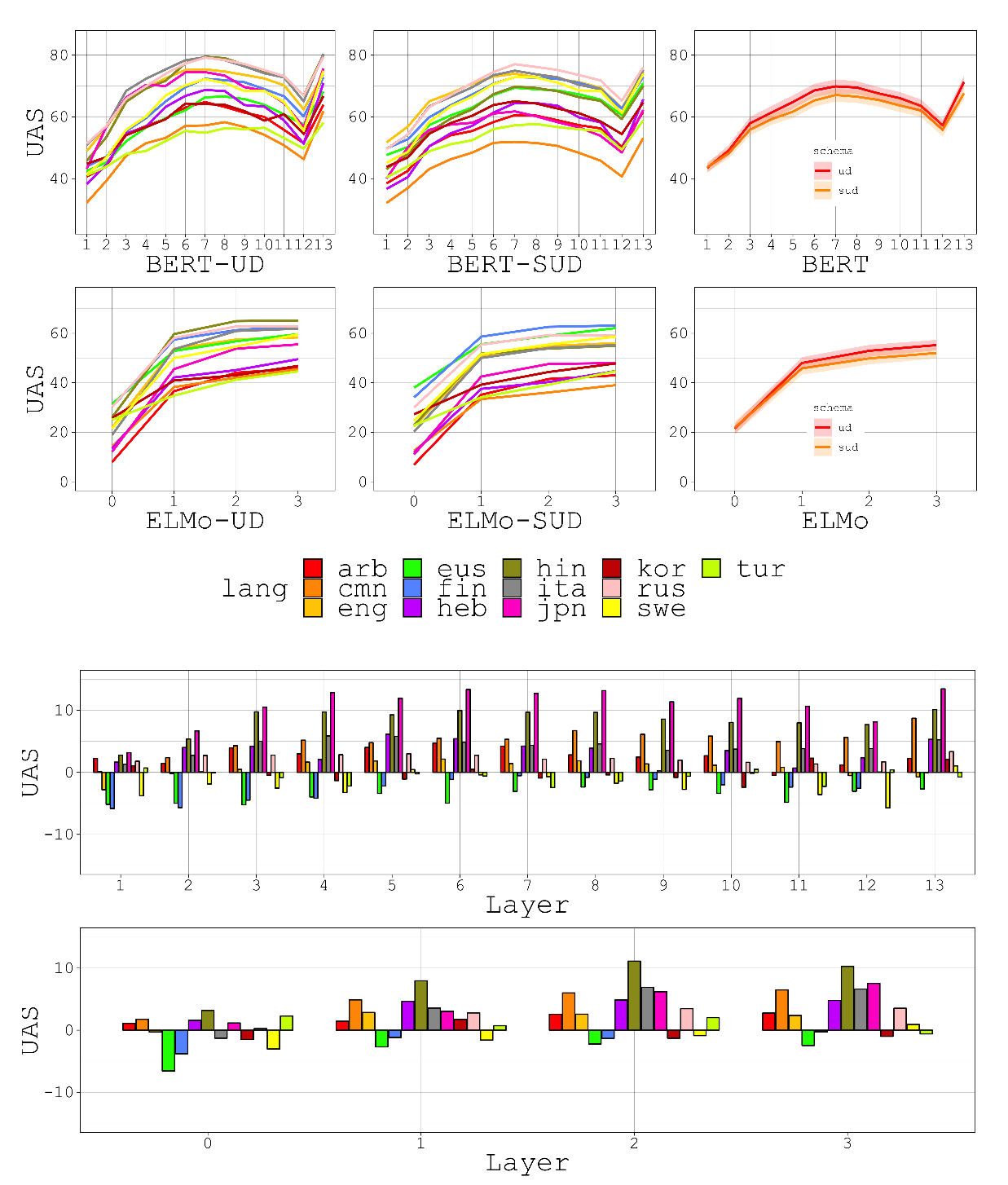

Do Neural Language Models Show Preferences for Syntactic Formalisms?

Artur Kulmizev, Vinit Ravishankar, Mostafa Abdou, Joakim Nivre,