Finding Universal Grammatical Relations in Multilingual BERT

Ethan A. Chi, John Hewitt, Christopher D. Manning

Interpretability and Analysis of Models for NLP Long Paper

Session 9B: Jul 7

(18:00-19:00 GMT)

Session 10A: Jul 7

(20:00-21:00 GMT)

Abstract:

Recent work has found evidence that Multilingual BERT (mBERT), a transformer-based multilingual masked language model, is capable of zero-shot cross-lingual transfer, suggesting that some aspects of its representations are shared cross-lingually. To better understand this overlap, we extend recent work on finding syntactic trees in neural networks' internal representations to the multilingual setting. We show that subspaces of mBERT representations recover syntactic tree distances in languages other than English, and that these subspaces are approximately shared across languages. Motivated by these results, we present an unsupervised analysis method that provides evidence mBERT learns representations of syntactic dependency labels, in the form of clusters which largely agree with the Universal Dependencies taxonomy. This evidence suggests that even without explicit supervision, multilingual masked language models learn certain linguistic universals.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

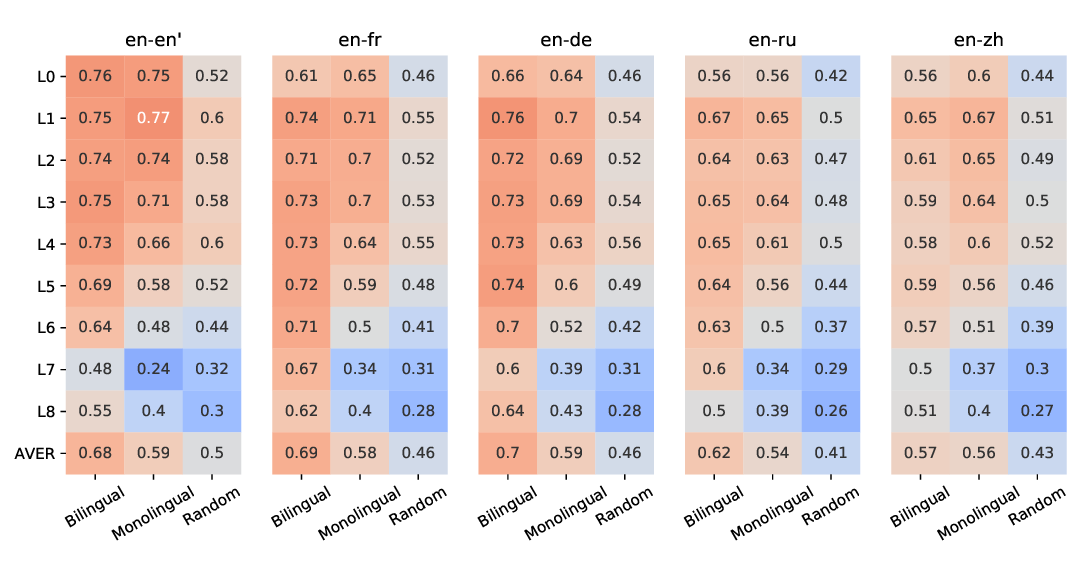

On the Cross-lingual Transferability of Monolingual Representations

Mikel Artetxe, Sebastian Ruder, Dani Yogatama,

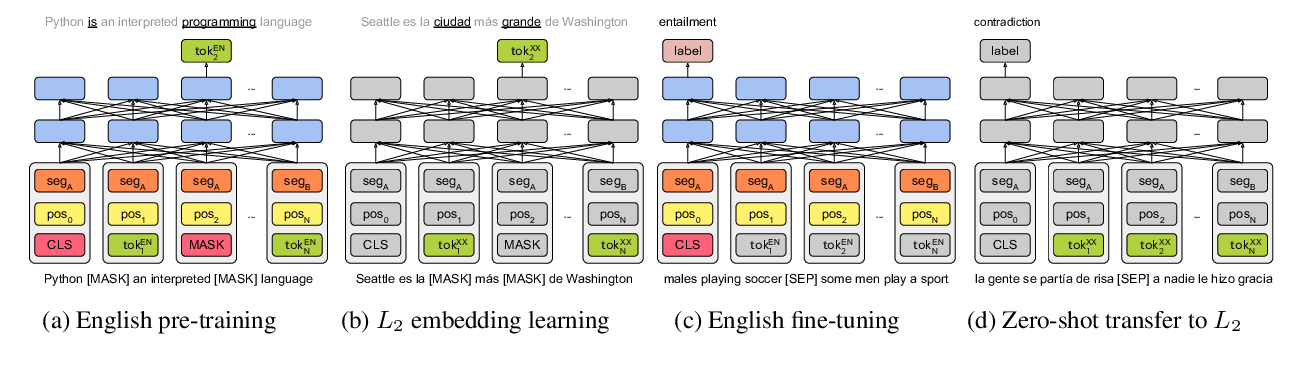

Emerging Cross-lingual Structure in Pretrained Language Models

Alexis Conneau, Shijie Wu, Haoran Li, Luke Zettlemoyer, Veselin Stoyanov,

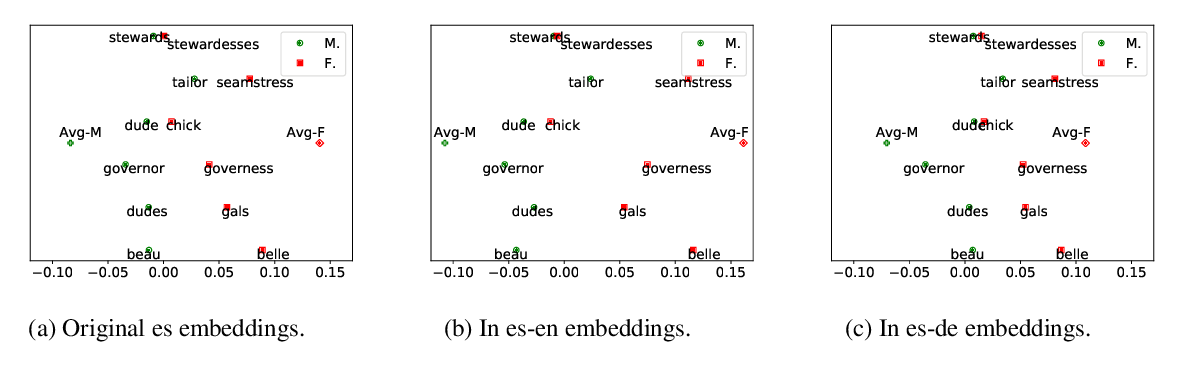

Gender Bias in Multilingual Embeddings and Cross-Lingual Transfer

Jieyu Zhao, Subhabrata Mukherjee, Saghar Hosseini, Kai-Wei Chang, Ahmed Hassan Awadallah,

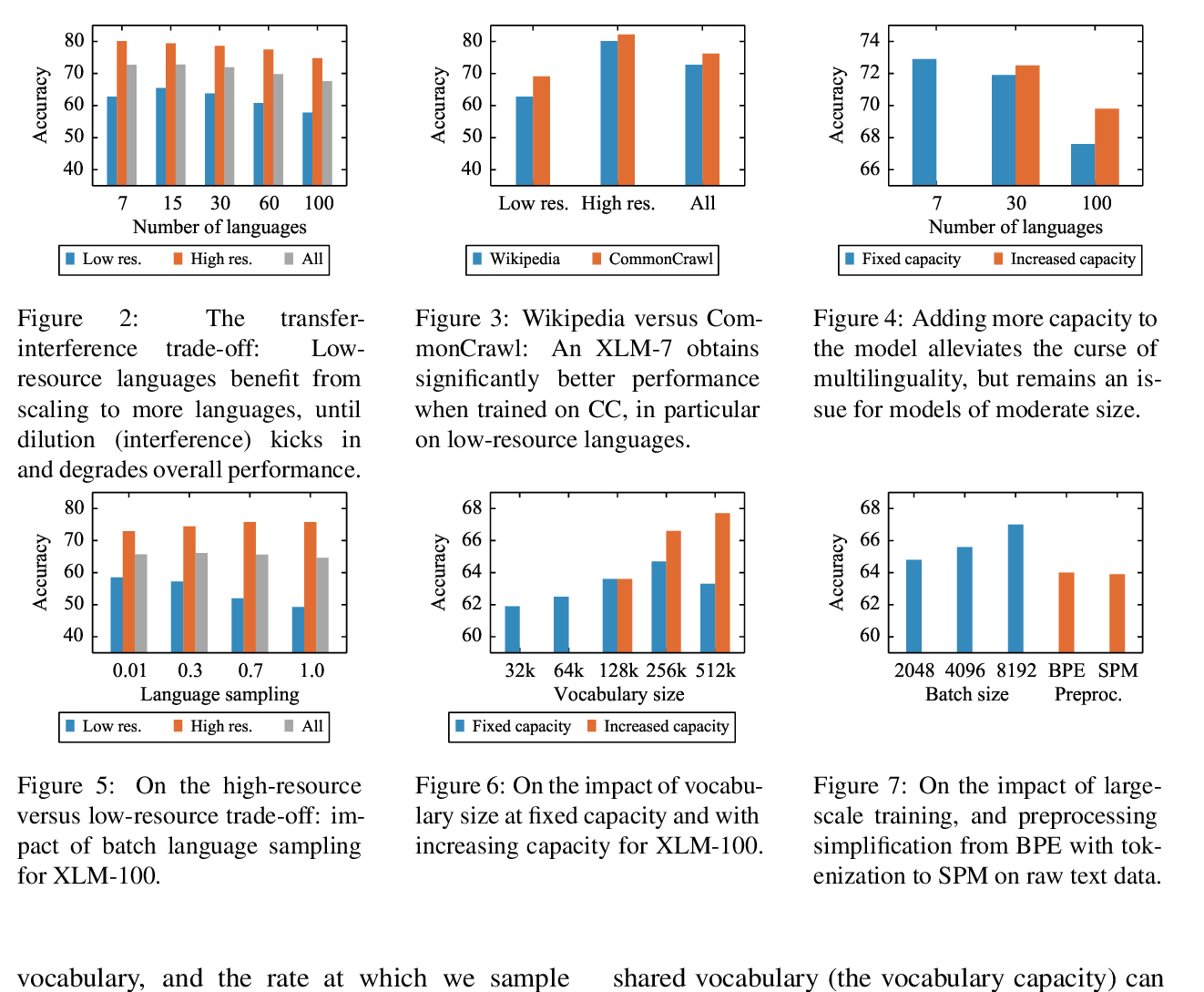

Unsupervised Cross-lingual Representation Learning at Scale

Alexis Conneau, Kartikay Khandelwal, Naman Goyal, Vishrav Chaudhary, Guillaume Wenzek, Francisco Guzmán, Edouard Grave, Myle Ott, Luke Zettlemoyer, Veselin Stoyanov,