To Test Machine Comprehension, Start by Defining Comprehension

Jesse Dunietz, Greg Burnham, Akash Bharadwaj, Owen Rambow, Jennifer Chu-Carroll, Dave Ferrucci

Theme Long Paper

Session 13B: Jul 8

(13:00-14:00 GMT)

Session 15B: Jul 8

(21:00-22:00 GMT)

Abstract:

Many tasks aim to measure machine reading comprehension (MRC), often focusing on question types presumed to be difficult. Rarely, however, do task designers start by considering what systems should in fact comprehend. In this paper we make two key contributions. First, we argue that existing approaches do not adequately define comprehension; they are too unsystematic about what content is tested. Second, we present a detailed definition of comprehension—a "Template of Understanding"—for a widely useful class of texts, namely short narratives. We then conduct an experiment that strongly suggests existing systems are not up to the task of narrative understanding as we define it.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

STARC: Structured Annotations for Reading Comprehension

Yevgeni Berzak, Jonathan Malmaud, Roger Levy,

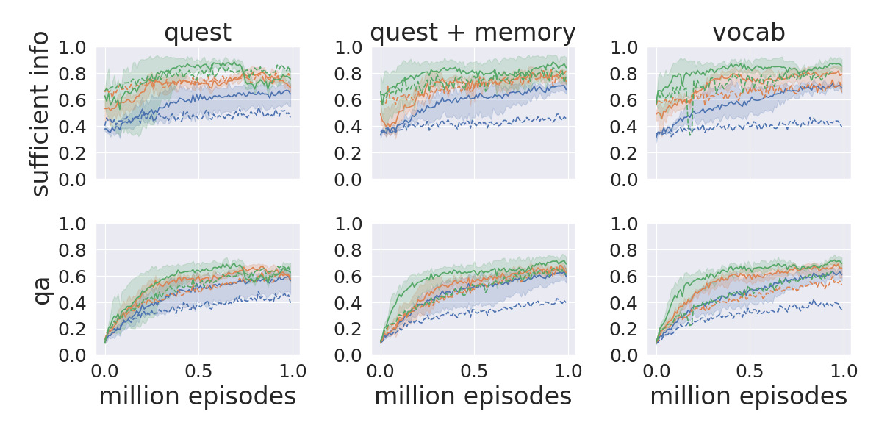

Interactive Machine Comprehension with Information Seeking Agents

Xingdi Yuan, Jie Fu, Marc-Alexandre Côté, Yi Tay, Chris Pal, Adam Trischler,

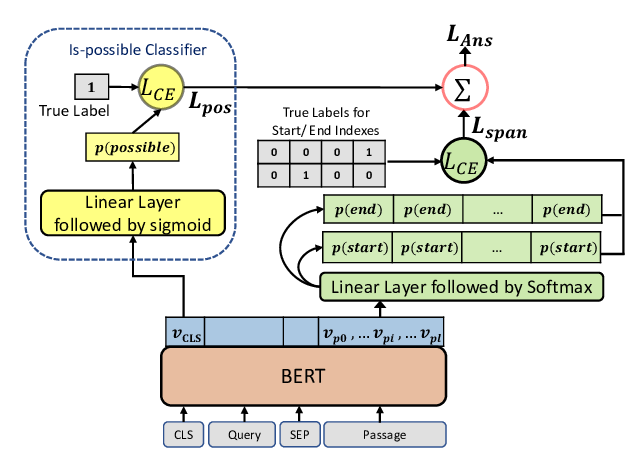

Span Selection Pre-training for Question Answering

Michael Glass, Alfio Gliozzo, Rishav Chakravarti, Anthony Ferritto, Lin Pan, G P Shrivatsa Bhargav, Dinesh Garg, Avi Sil,

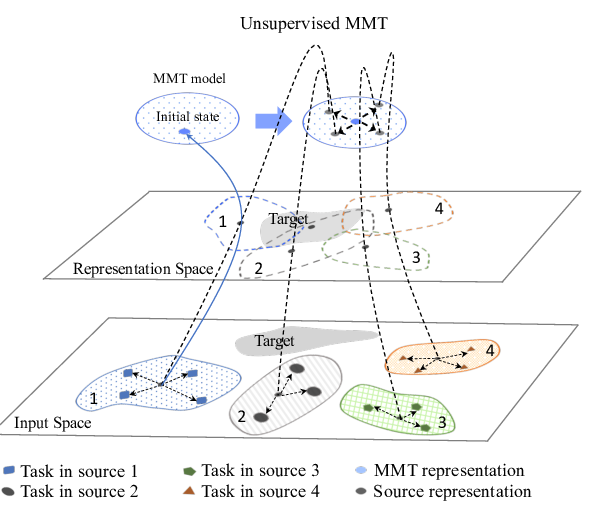

Multi-source Meta Transfer for Low Resource Multiple-Choice Question Answering

Ming Yan, Hao Zhang, Di Jin, Joey Tianyi Zhou,