Checkpoint Reranking: An Approach to Select Better Hypothesis for Neural Machine Translation Systems

Vinay Pandramish, Dipti Misra Sharma

Student Research Workshop SRW Paper

Session 9A: Jul 7

(17:00-18:00 GMT)

Session 15A: Jul 8

(20:00-21:00 GMT)

Abstract:

In this paper, we propose a method of re-ranking the outputs of Neural Machine Translation (NMT) systems. After the decoding process, we select a few last iteration outputs in the training process as the N-best list. After training a Neural Machine Translation (NMT) baseline system, it has been observed that these iteration outputs have an oracle score higher than baseline up to 1.01 BLEU points compared to the last iteration of the trained system.We come up with a ranking mechanism by solely focusing on the decoder's ability to generate distinct tokens and without the usage of any language model or data. With this method, we achieved a translation improvement up to +0.16 BLEU points over baseline.We also evaluate our approach by applying the coverage penalty to the training process.In cases of moderate coverage penalty, the oracle scores are higher than the final iteration up to +0.99 BLEU points, and our algorithm gives an improvement up to +0.17 BLEU points.With excessive penalty, there is a decrease in translation quality compared to the baseline system. Still, an increase in oracle scores up to +1.30 is observed with the re-ranking algorithm giving an improvement up to +0.15 BLEU points is found in case of excessive penalty.The proposed re-ranking method is a generic one and can be extended to other language pairs as well.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

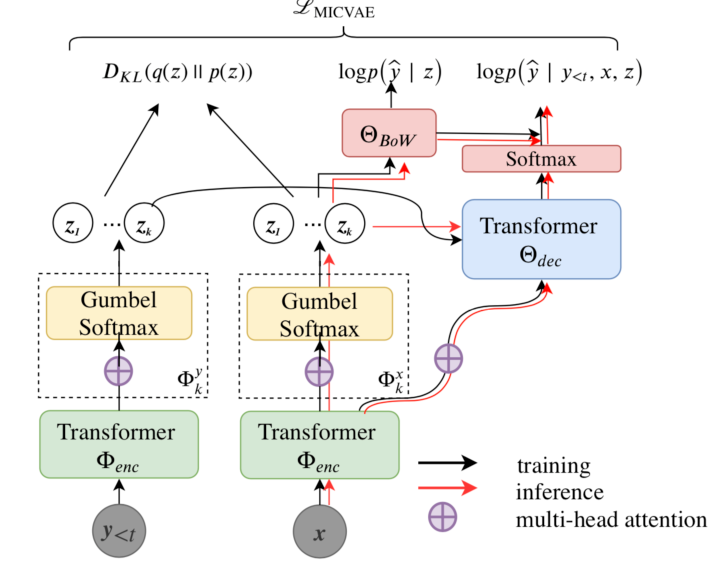

Addressing Posterior Collapse with Mutual Information for Improved Variational Neural Machine Translation

Arya D. McCarthy, Xian Li, Jiatao Gu, Ning Dong,

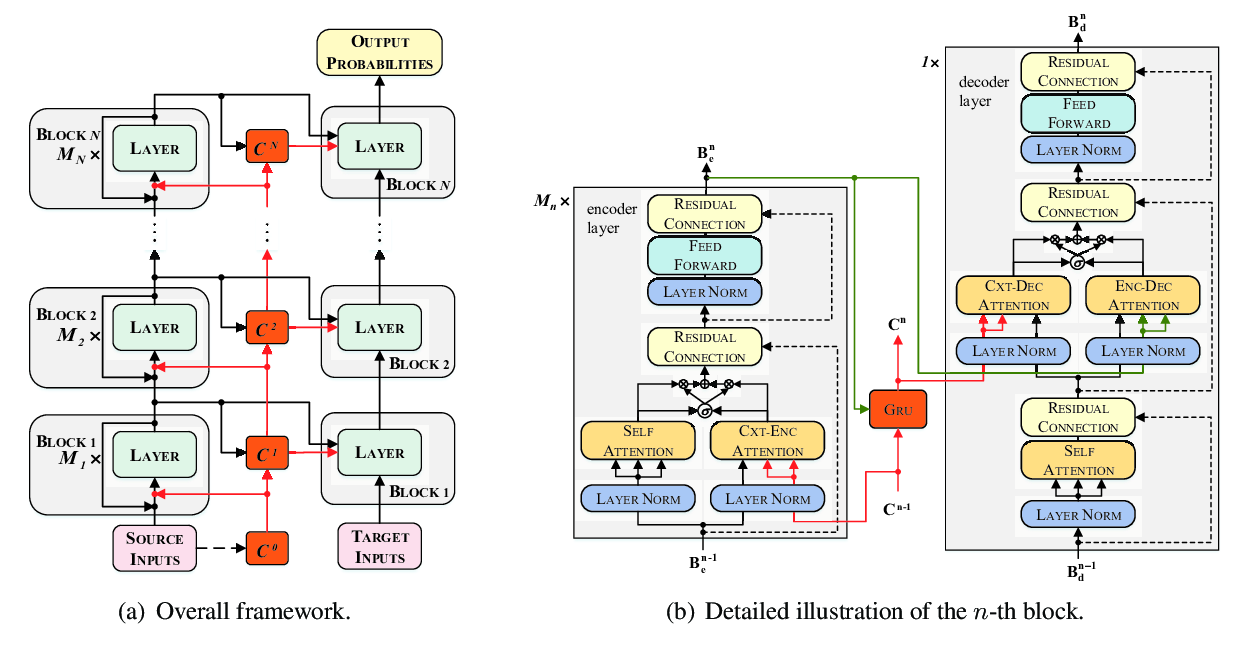

Multiscale Collaborative Deep Models for Neural Machine Translation

Xiangpeng Wei, Heng Yu, Yue Hu, Yue Zhang, Rongxiang Weng, Weihua Luo,

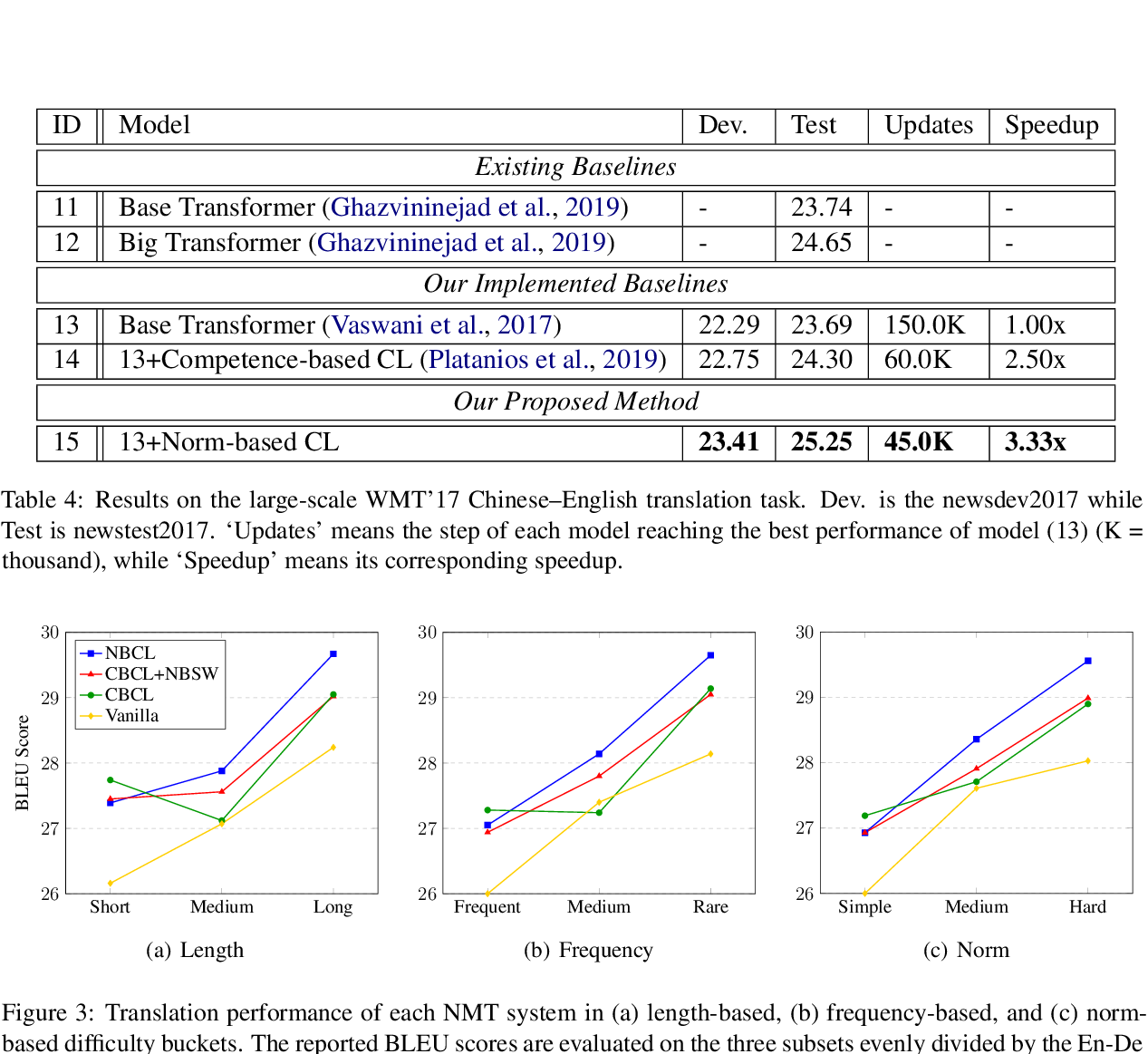

Norm-Based Curriculum Learning for Neural Machine Translation

Xuebo Liu, Houtim Lai, Derek F. Wong, Lidia S. Chao,

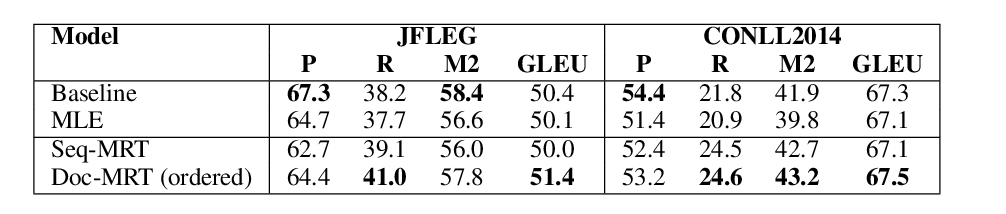

Using Context in Neural Machine Translation Training Objectives

Danielle Saunders, Felix Stahlberg, Bill Byrne,