Machine Learning-Driven Language Assessment

Burr Settles, Masato Hagiwara, Geoffrey T. LaFlair

NLP Applications TACL Paper

Session 14B: Jul 8

(18:00-19:00 GMT)

Session 15B: Jul 8

(21:00-22:00 GMT)

Abstract:

We describe a method for rapidly creating language proficiency assessments, and provide experimental evidence that such tests can be valid, reliable, and secure. Our approach is the first to use machine learning and natural language processing to induce proficiency scales based on a given standard, and then use linguistic models to estimate item difficulty directly for computer-adaptive testing. This alleviates the need for expensive pilot testing with human subjects. We used these methods to develop an online proficiency exam called the Duolingo English Test, and demonstrate that its scores align significantly with other high-stakes English assessments. Furthermore, our approach produces test scores that are highly reliable, while generating item banks large enough to satisfy security requirements.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

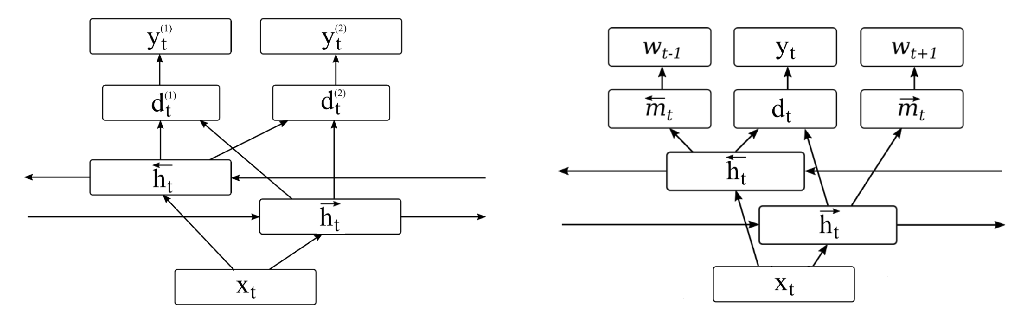

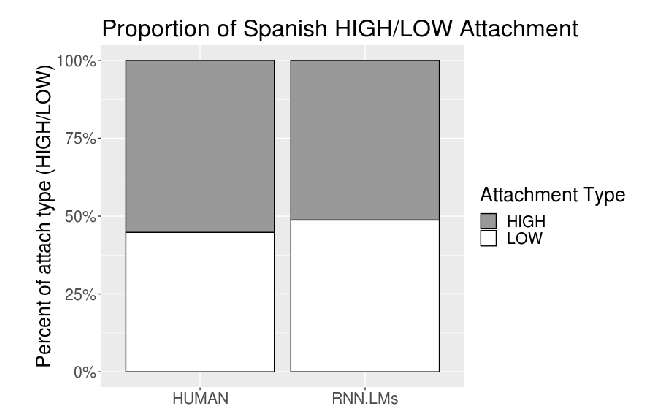

Recurrent Neural Network Language Models Always Learn English-Like Relative Clause Attachment

Forrest Davis, Marten van Schijndel,

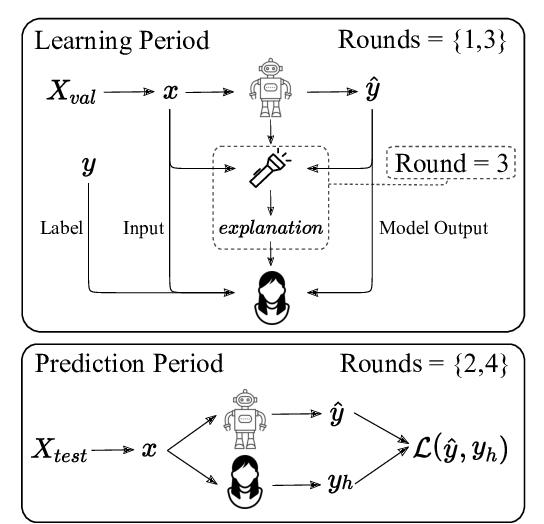

Evaluating Explainable AI: Which Algorithmic Explanations Help Users Predict Model Behavior?

Peter Hase, Mohit Bansal,

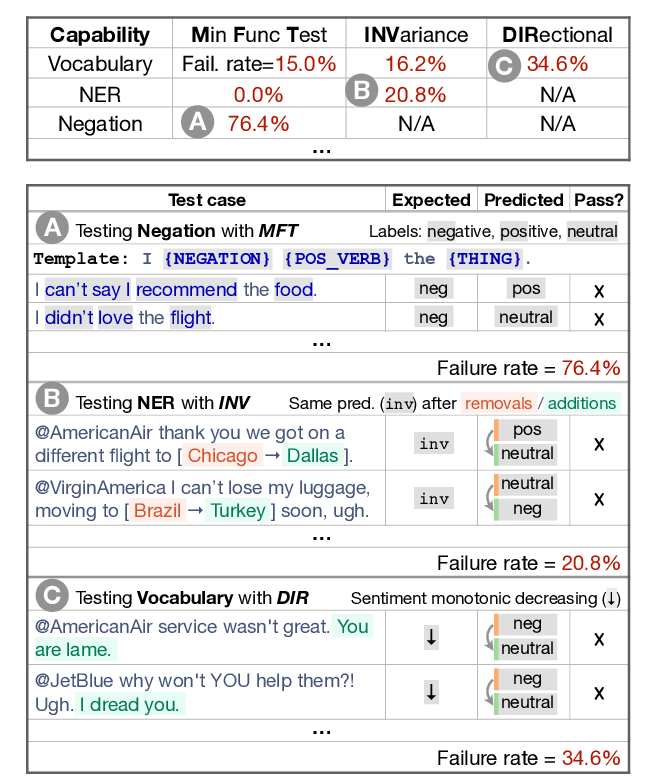

Beyond Accuracy: Behavioral Testing of NLP Models with CheckList

Marco Tulio Ribeiro, Tongshuang Wu, Carlos Guestrin, Sameer Singh,

Investigating the effect of auxiliary objectives for the automated grading of learner English speech transcriptions

Hannah Craighead, Andrew Caines, Paula Buttery, Helen Yannakoudakis,