jiant: A Software Toolkit for Research on General-Purpose Text Understanding Models

Yada Pruksachatkun, Phil Yeres, Haokun Liu, Jason Phang, Phu Mon Htut, Alex Wang, Ian Tenney, Samuel R. Bowman

System Demonstrations Demo Paper

Demo Session 4C-1: Jul 6

(18:30-19:30 GMT)

Demo Session 5C-1: Jul 6

(21:30-22:30 GMT)

Abstract:

We introduce jiant, an open source toolkit for conducting multitask and transfer learning experiments on English NLU tasks. jiant enables modular and configuration driven experimentation with state-of-the-art models and a broad set of tasks for probing, transfer learning, and multitask training experiments. jiant implements over 50 NLU tasks, including all GLUE and SuperGLUE benchmark tasks. We demonstrate that jiant reproduces published performance on a variety of tasks and models, e.g., RoBERTa and BERT.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

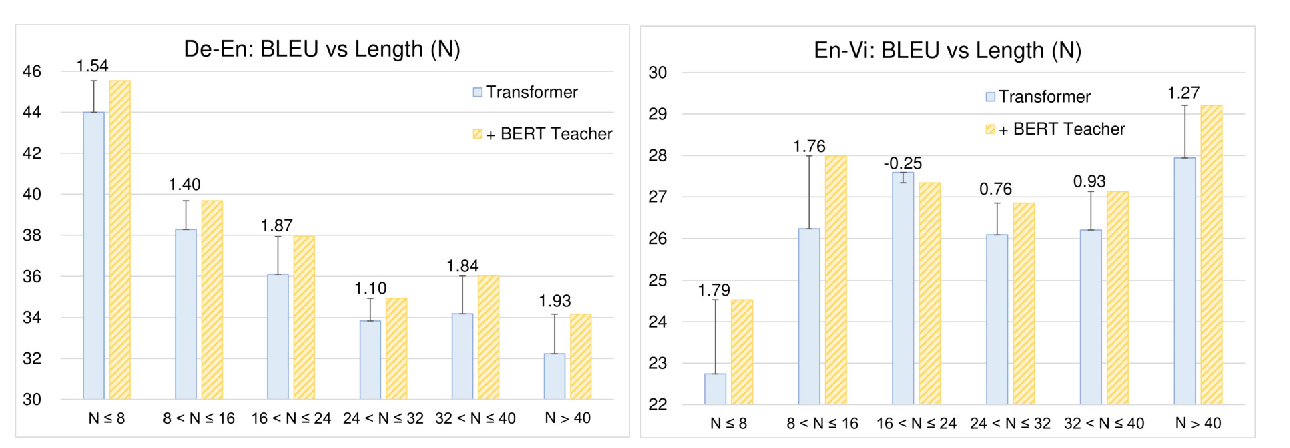

Distilling Knowledge Learned in BERT for Text Generation

Yen-Chun Chen, Zhe Gan, Yu Cheng, Jingzhou Liu, Jingjing Liu,

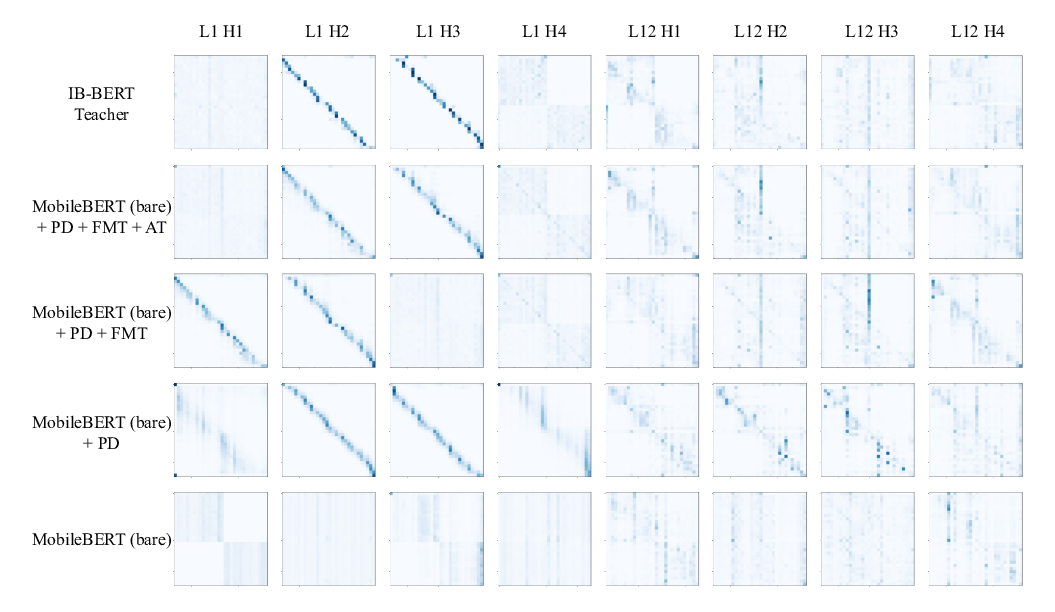

MobileBERT: a Compact Task-Agnostic BERT for Resource-Limited Devices

Zhiqing Sun, Hongkun Yu, Xiaodan Song, Renjie Liu, Yiming Yang, Denny Zhou,

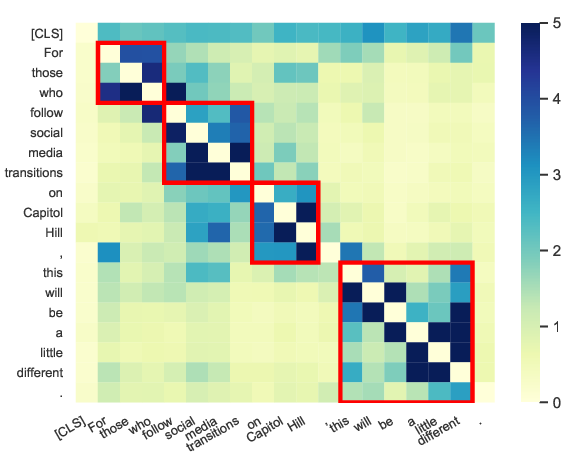

Perturbed Masking: Parameter-free Probing for Analyzing and Interpreting BERT

Zhiyong Wu, Yun Chen, Ben Kao, Qun Liu,

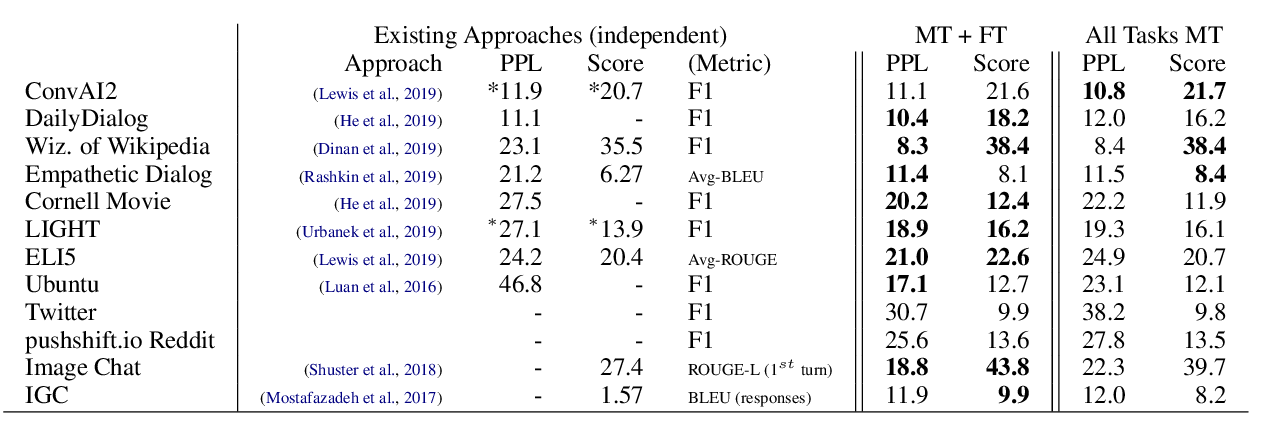

The Dialogue Dodecathlon: Open-Domain Knowledge and Image Grounded Conversational Agents

Kurt Shuster, Da JU, Stephen Roller, Emily Dinan, Y-Lan Boureau, Jason Weston,