The Dialogue Dodecathlon: Open-Domain Knowledge and Image Grounded Conversational Agents

Kurt Shuster, Da JU, Stephen Roller, Emily Dinan, Y-Lan Boureau, Jason Weston

Dialogue and Interactive Systems Long Paper

Session 4B: Jul 6

(18:00-19:00 GMT)

Session 5B: Jul 6

(21:00-22:00 GMT)

Abstract:

We introduce dodecaDialogue: a set of 12 tasks that measures if a conversational agent can communicate engagingly with personality and empathy, ask questions, answer questions by utilizing knowledge resources, discuss topics and situations, and perceive and converse about images. By multi-tasking on such a broad large-scale set of data, we hope to both move towards and measure progress in producing a single unified agent that can perceive, reason and converse with humans in an open-domain setting. We show that such multi-tasking improves over a BERT pre-trained baseline, largely due to multi-tasking with very large dialogue datasets in a similar domain, and that the multi-tasking in general provides gains to both text and image-based tasks using several metrics in both the fine-tune and task transfer settings. We obtain state-of-the-art results on many of the tasks, providing a strong baseline for this challenge.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

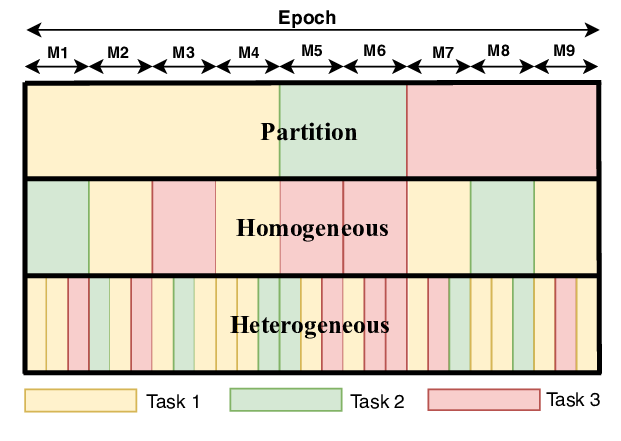

Dynamic Sampling Strategies for Multi-Task Reading Comprehension

Ananth Gottumukkala, Dheeru Dua, Sameer Singh, Matt Gardner,

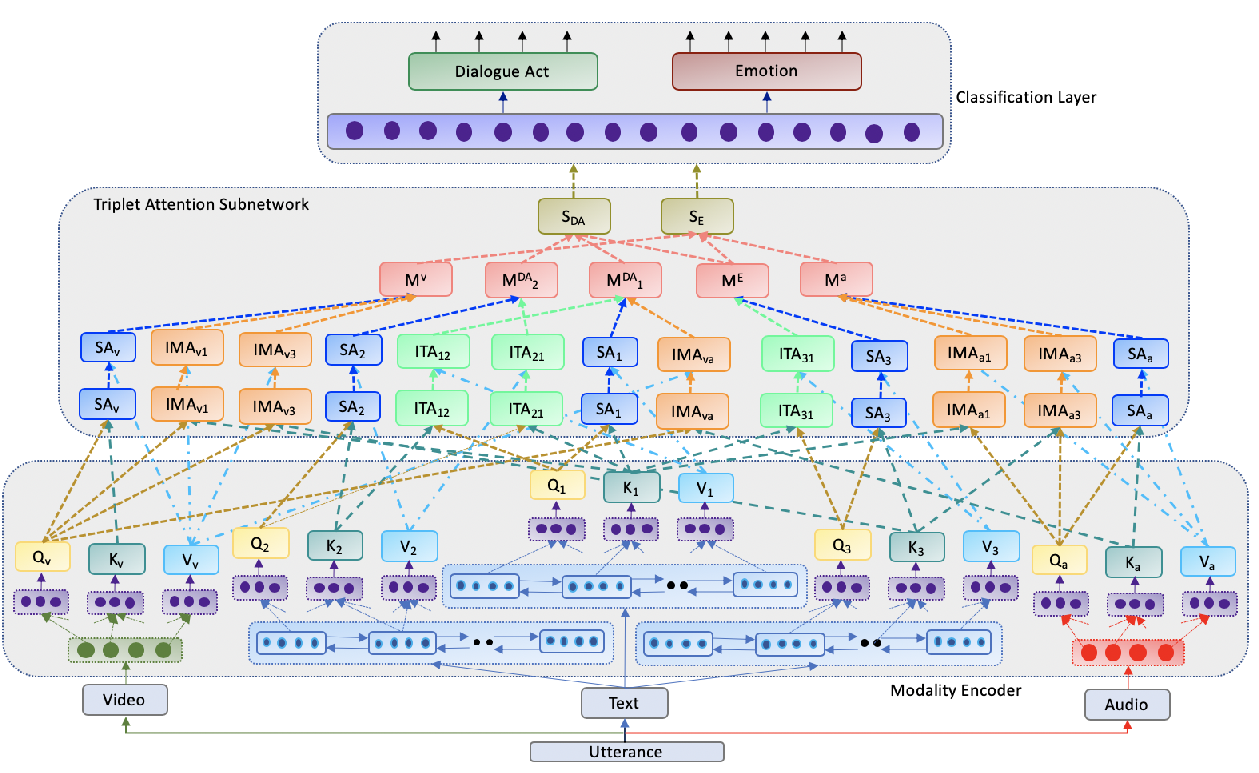

Towards Emotion-aided Multi-modal Dialogue Act Classification

Tulika Saha, Aditya Patra, Sriparna Saha, Pushpak Bhattacharyya,

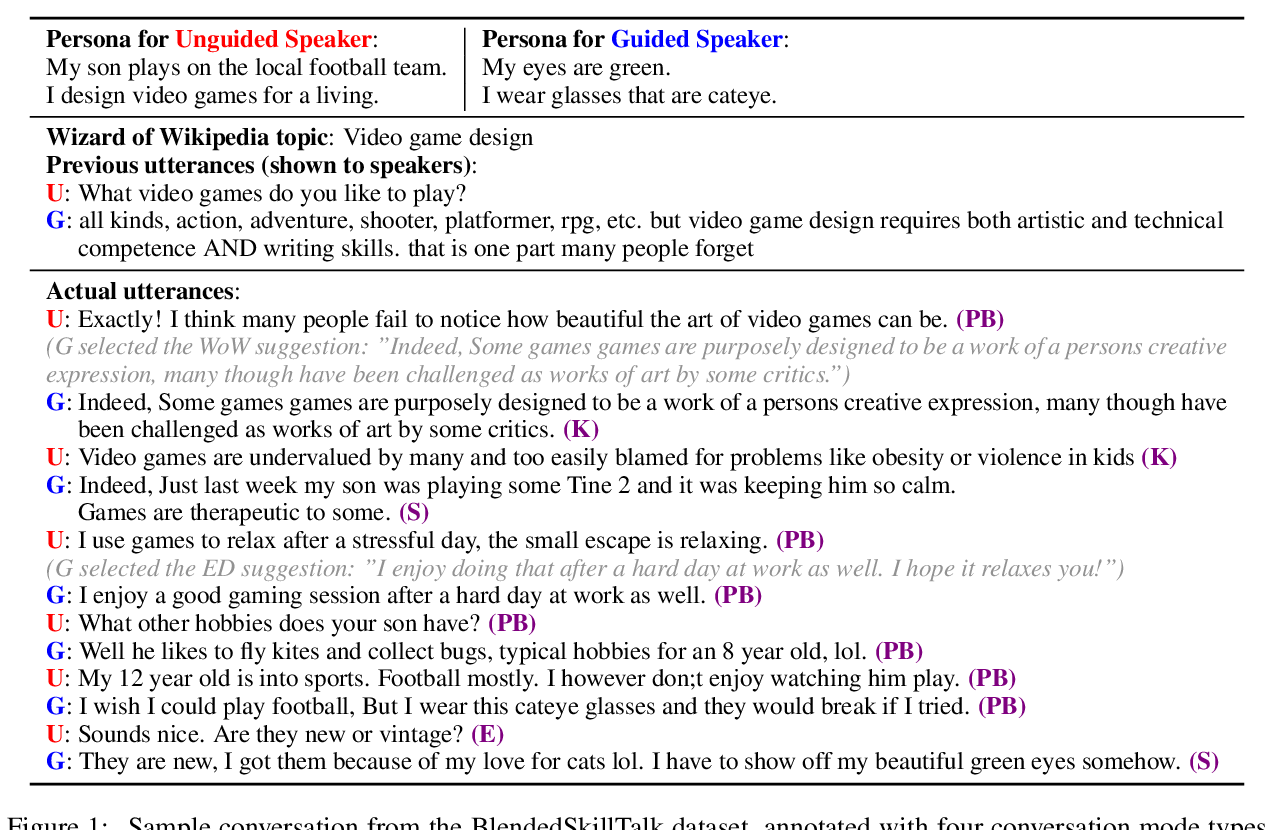

Can You Put it All Together: Evaluating Conversational Agents' Ability to Blend Skills

Eric Michael Smith, Mary Williamson, Kurt Shuster, Jason Weston, Y-Lan Boureau,