Perturbed Masking: Parameter-free Probing for Analyzing and Interpreting BERT

Zhiyong Wu, Yun Chen, Ben Kao, Qun Liu

Interpretability and Analysis of Models for NLP Long Paper

Session 7B: Jul 7

(09:00-10:00 GMT)

Session 8B: Jul 7

(13:00-14:00 GMT)

Abstract:

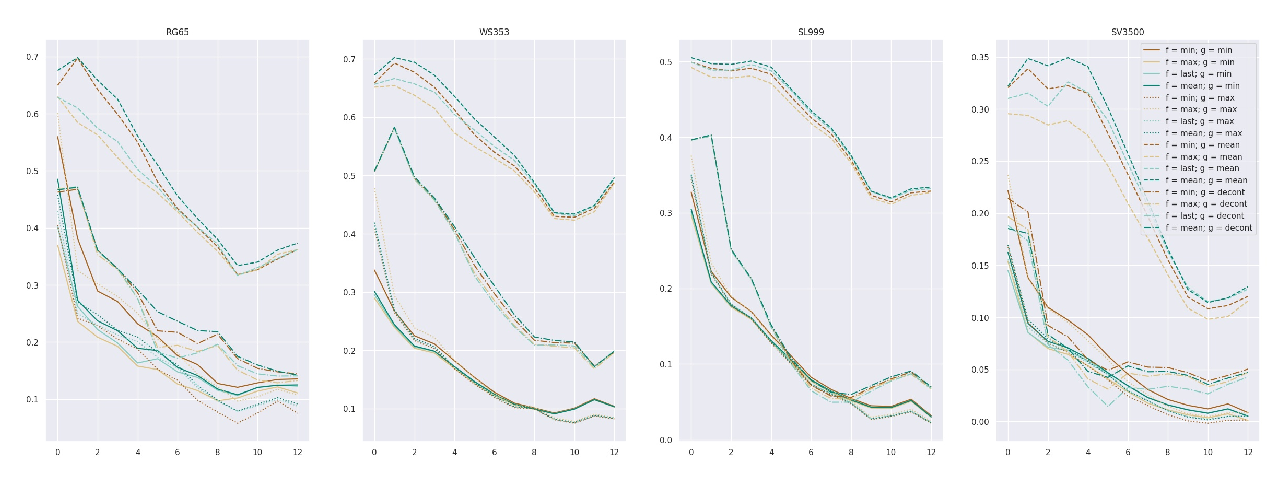

By introducing a small set of additional parameters, a probe learns to solve specific linguistic tasks (e.g., dependency parsing) in a supervised manner using feature representations (e.g., contextualized embeddings). The effectiveness of such probing tasks is taken as evidence that the pre-trained model encodes linguistic knowledge. However, this approach of evaluating a language model is undermined by the uncertainty of the amount of knowledge that is learned by the probe itself. Complementary to those works, we propose a parameter-free probing technique for analyzing pre-trained language models (e.g., BERT). Our method does not require direct supervision from the probing tasks, nor do we introduce additional parameters to the probing process. Our experiments on BERT show that syntactic trees recovered from BERT using our method are significantly better than linguistically-uninformed baselines. We further feed the empirically induced dependency structures into a downstream sentiment classification task and find its improvement compatible with or even superior to a human-designed dependency schema.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Information-Theoretic Probing for Linguistic Structure

Tiago Pimentel, Josef Valvoda, Rowan Hall Maudslay, Ran Zmigrod, Adina Williams, Ryan Cotterell,

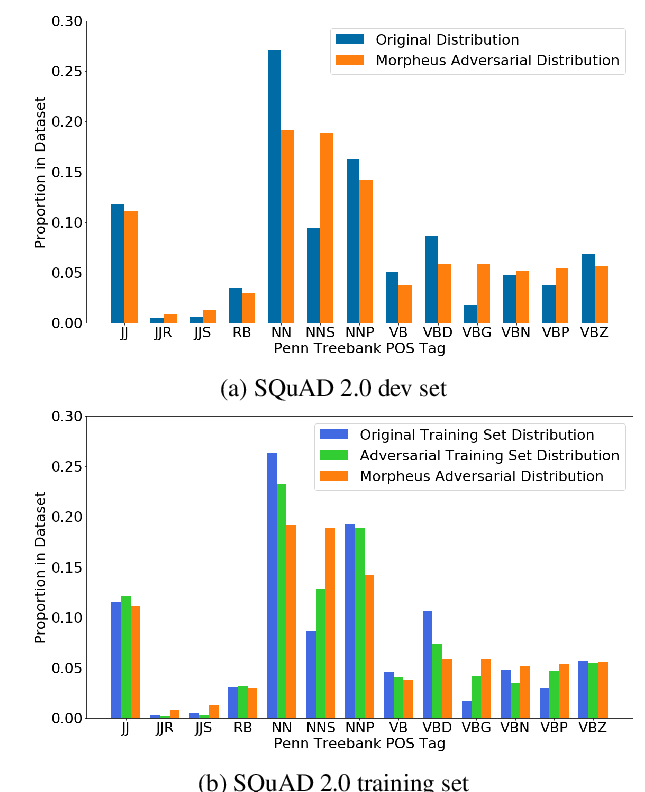

It’s Morphin’ Time! Combating Linguistic Discrimination with Inflectional Perturbations

Samson Tan, Shafiq Joty, Min-Yen Kan, Richard Socher,

Interpreting Pretrained Contextualized Representations via Reductions to Static Embeddings

Rishi Bommasani, Kelly Davis, Claire Cardie,

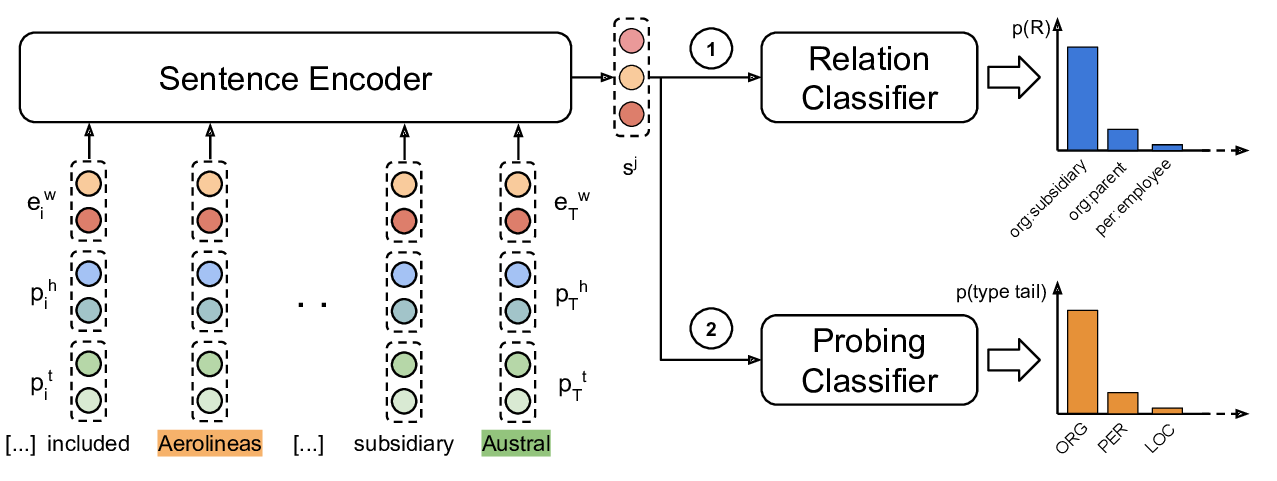

Probing Linguistic Features of Sentence-Level Representations in Relation Extraction

Christoph Alt, Aleksandra Gabryszak, Leonhard Hennig,