KLEJ: Comprehensive Benchmark for Polish Language Understanding

Piotr Rybak, Robert Mroczkowski, Janusz Tracz, Ireneusz Gawlik

Resources and Evaluation Long Paper

Session 2A: Jul 6

(08:00-09:00 GMT)

Session 3A: Jul 6

(12:00-13:00 GMT)

Abstract:

In recent years, a series of Transformer-based models unlocked major improvements in general natural language understanding (NLU) tasks. Such a fast pace of research would not be possible without general NLU benchmarks, which allow for a fair comparison of the proposed methods. However, such benchmarks are available only for a handful of languages. To alleviate this issue, we introduce a comprehensive multi-task benchmark for the Polish language understanding, accompanied by an online leaderboard. It consists of a diverse set of tasks, adopted from existing datasets for named entity recognition, question-answering, textual entailment, and others. We also introduce a new sentiment analysis task for the e-commerce domain, named Allegro Reviews (AR). To ensure a common evaluation scheme and promote models that generalize to different NLU tasks, the benchmark includes datasets from varying domains and applications. Additionally, we release HerBERT, a Transformer-based model trained specifically for the Polish language, which has the best average performance and obtains the best results for three out of nine tasks. Finally, we provide an extensive evaluation, including several standard baselines and recently proposed, multilingual Transformer-based models.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

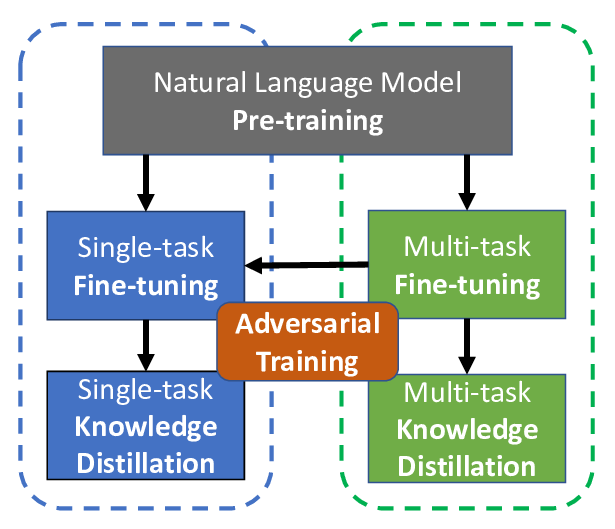

The Microsoft Toolkit of Multi-Task Deep Neural Networks for Natural Language Understanding

Xiaodong Liu, Yu Wang, Jianshu Ji, Hao Cheng, Xueyun Zhu, Emmanuel Awa, Pengcheng He, Weizhu Chen, Hoifung Poon, Guihong Cao, Jianfeng Gao,

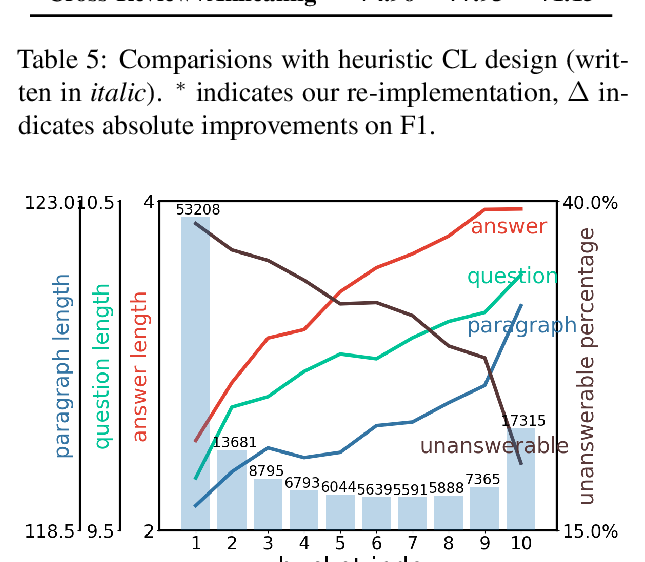

Curriculum Learning for Natural Language Understanding

Benfeng Xu, Licheng Zhang, Zhendong Mao, Quan Wang, Hongtao Xie, Yongdong Zhang,

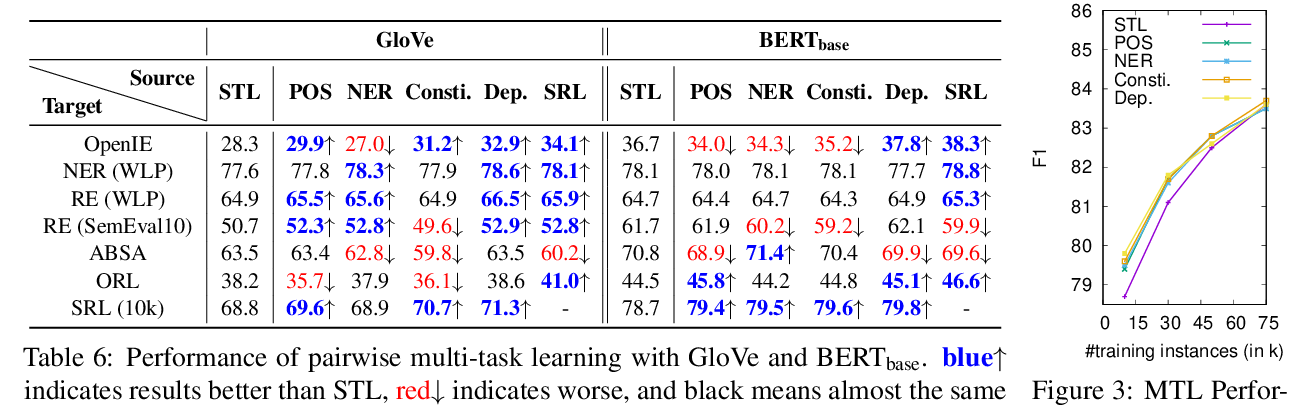

Generalizing Natural Language Analysis through Span-relation Representations

Zhengbao Jiang, Wei Xu, Jun Araki, Graham Neubig,

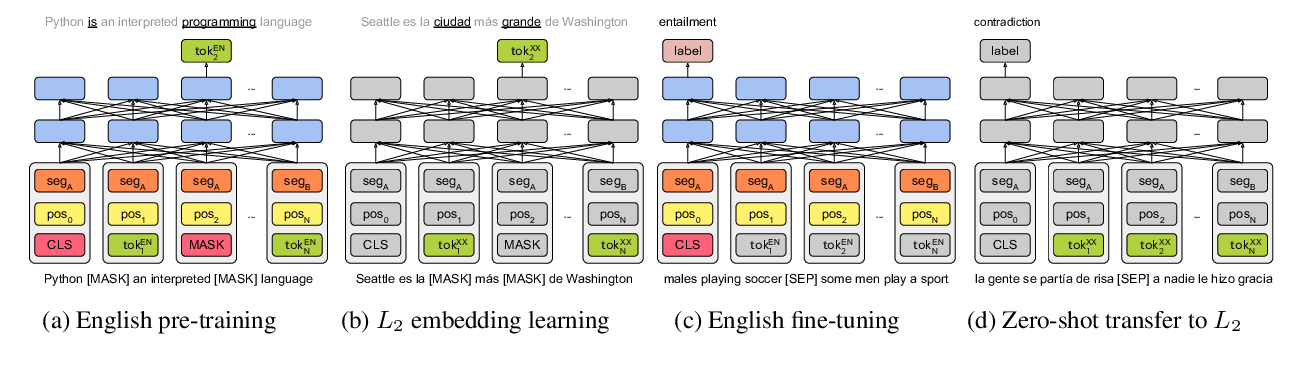

On the Cross-lingual Transferability of Monolingual Representations

Mikel Artetxe, Sebastian Ruder, Dani Yogatama,