Zero-Shot Transfer Learning with Synthesized Data for Multi-Domain Dialogue State Tracking

Giovanni Campagna, Agata Foryciarz, Mehrad Moradshahi, Monica Lam

Dialogue and Interactive Systems Long Paper

Session 1A: Jul 6

(05:00-06:00 GMT)

Session 4A: Jul 6

(17:00-18:00 GMT)

Abstract:

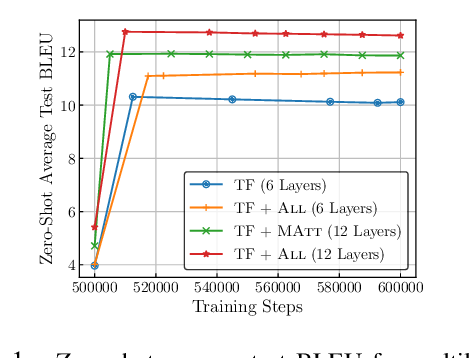

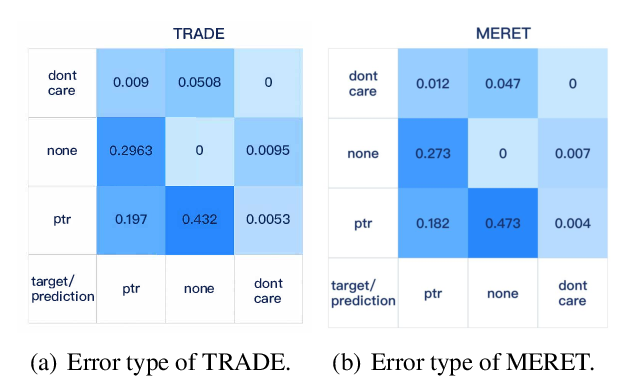

Zero-shot transfer learning for multi-domain dialogue state tracking can allow us to handle new domains without incurring the high cost of data acquisition. This paper proposes new zero-short transfer learning technique for dialogue state tracking where the in-domain training data are all synthesized from an abstract dialogue model and the ontology of the domain. We show that data augmentation through synthesized data can improve the accuracy of zero-shot learning for both the TRADE model and the BERT-based SUMBT model on the MultiWOZ 2.1 dataset. We show training with only synthesized in-domain data on the SUMBT model can reach about 2/3 of the accuracy obtained with the full training dataset. We improve the zero-shot learning state of the art on average across domains by 21%.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Improving Massively Multilingual Neural Machine Translation and Zero-Shot Translation

Biao Zhang, Philip Williams, Ivan Titov, Rico Sennrich,

Meta-Reinforced Multi-Domain State Generator for Dialogue Systems

Yi Huang, Junlan Feng, Min Hu, Xiaoting Wu, Xiaoyu Du, Shuo Ma,

Language to Network: Conditional Parameter Adaptation with Natural Language Descriptions

Tian Jin, Zhun Liu, Shengjia Yan, Alexandre Eichenberger, Louis-Philippe Morency,