Attend, Translate and Summarize: An Efficient Method for Neural Cross-Lingual Summarization

Junnan Zhu, Yu Zhou, Jiajun Zhang, Chengqing Zong

Summarization Long Paper

Session 2A: Jul 6

(08:00-09:00 GMT)

Session 3B: Jul 6

(13:00-14:00 GMT)

Abstract:

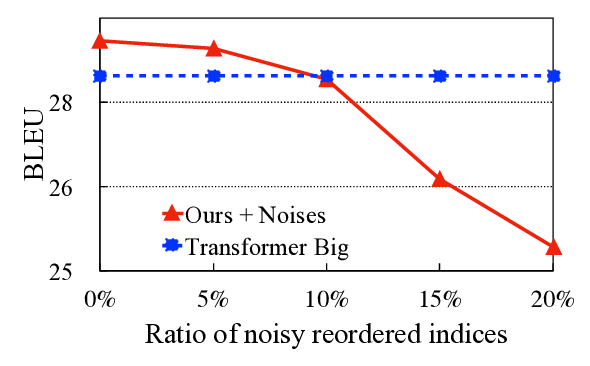

Cross-lingual summarization aims at summarizing a document in one language (e.g., Chinese) into another language (e.g., English). In this paper, we propose a novel method inspired by the translation pattern in the process of obtaining a cross-lingual summary. We first attend to some words in the source text, then translate them into the target language, and summarize to get the final summary. Specifically, we first employ the encoder-decoder attention distribution to attend to the source words. Second, we present three strategies to acquire the translation probability, which helps obtain the translation candidates for each source word. Finally, each summary word is generated either from the neural distribution or from the translation candidates of source words. Experimental results on Chinese-to-English and English-to-Chinese summarization tasks have shown that our proposed method can significantly outperform the baselines, achieving comparable performance with the state-of-the-art.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

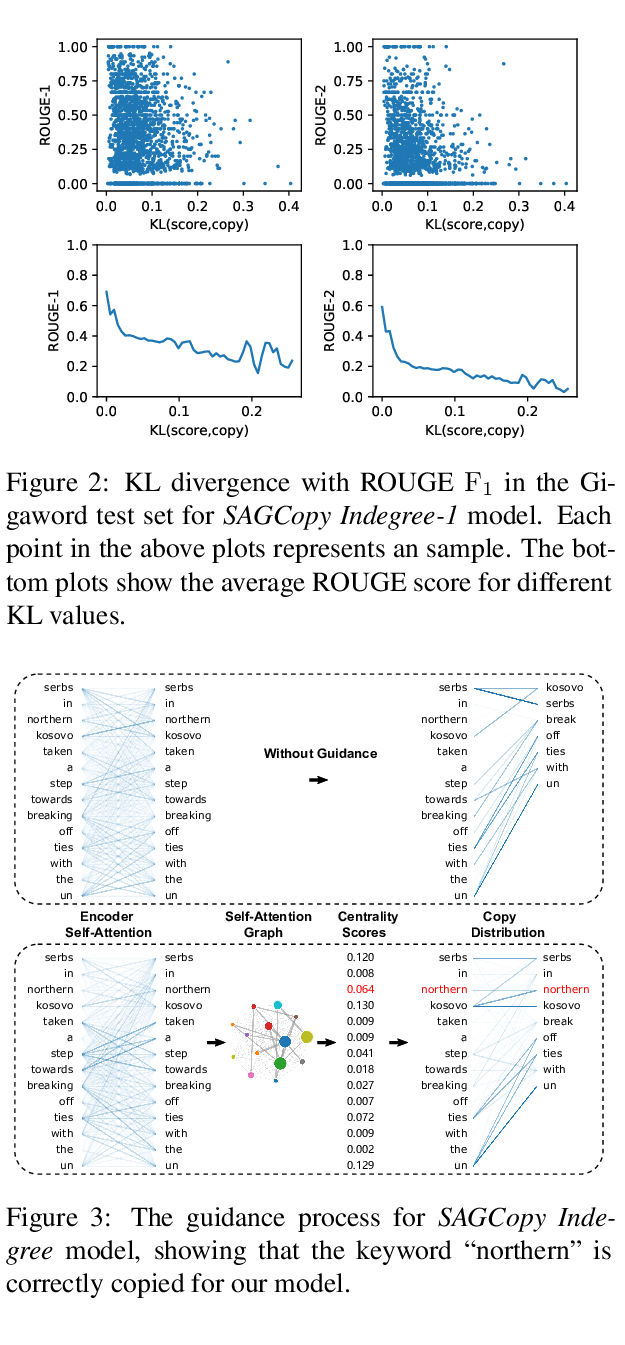

Self-Attention Guided Copy Mechanism for Abstractive Summarization

Song Xu, Haoran Li, Peng Yuan, Youzheng Wu, Xiaodong He, Bowen Zhou,

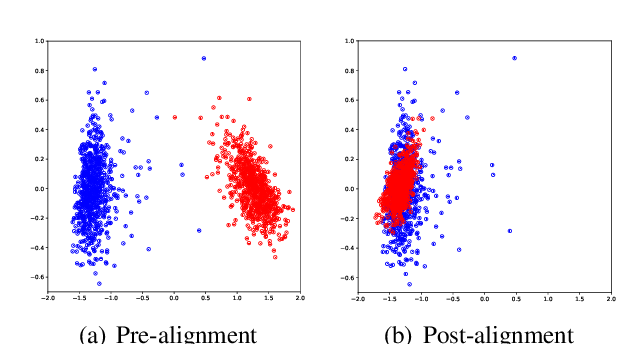

Jointly Learning to Align and Summarize for Neural Cross-Lingual Summarization

Yue Cao, Hui Liu, Xiaojun Wan,

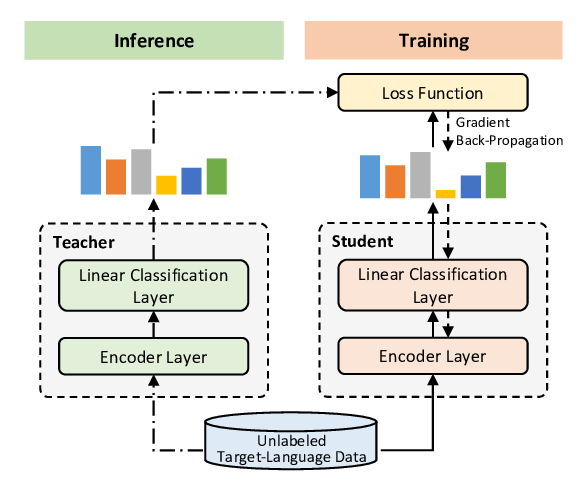

Single-/Multi-Source Cross-Lingual NER via Teacher-Student Learning on Unlabeled Data in Target Language

Qianhui Wu, Zijia Lin, Börje Karlsson, Jian-Guang Lou, Biqing Huang,