Named Entity Recognition without Labelled Data: A Weak Supervision Approach

Pierre Lison, Jeremy Barnes, Aliaksandr Hubin, Samia Touileb

Information Extraction Long Paper

Session 2B: Jul 6

(09:00-10:00 GMT)

Session 3B: Jul 6

(13:00-14:00 GMT)

Abstract:

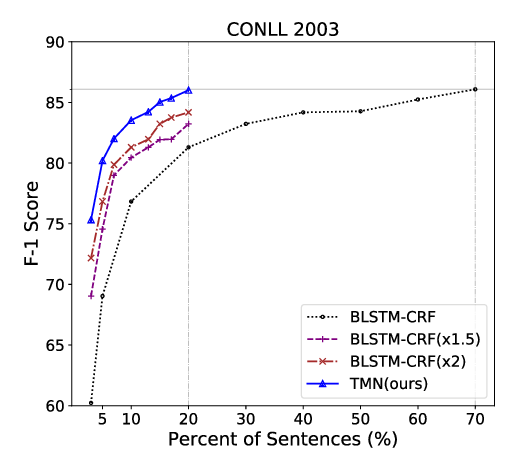

Named Entity Recognition (NER) performance often degrades rapidly when applied to target domains that differ from the texts observed during training. When in-domain labelled data is available, transfer learning techniques can be used to adapt existing NER models to the target domain. But what should one do when there is no hand-labelled data for the target domain? This paper presents a simple but powerful approach to learn NER models in the absence of labelled data through weak supervision. The approach relies on a broad spectrum of labelling functions to automatically annotate texts from the target domain. These annotations are then merged together using a hidden Markov model which captures the varying accuracies and confusions of the labelling functions. A sequence labelling model can finally be trained on the basis of this unified annotation. We evaluate the approach on two English datasets (CoNLL 2003 and news articles from Reuters and Bloomberg) and demonstrate an improvement of about 7 percentage points in entity-level F1 scores compared to an out-of-domain neural NER model.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

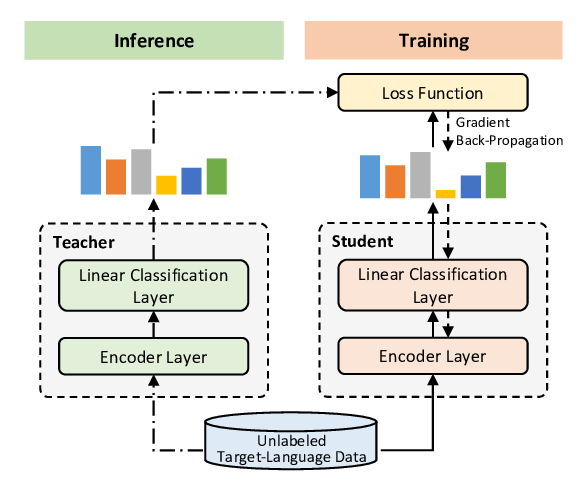

Single-/Multi-Source Cross-Lingual NER via Teacher-Student Learning on Unlabeled Data in Target Language

Qianhui Wu, Zijia Lin, Börje Karlsson, Jian-Guang Lou, Biqing Huang,

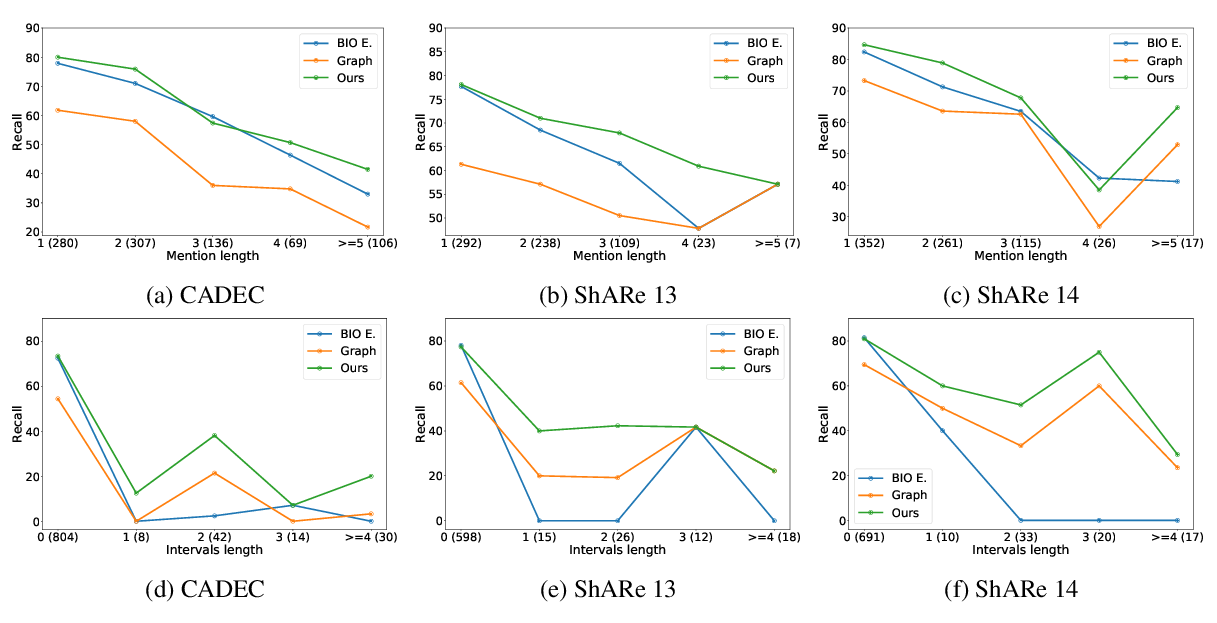

An Effective Transition-based Model for Discontinuous NER

Xiang Dai, Sarvnaz Karimi, Ben Hachey, Cecile Paris,

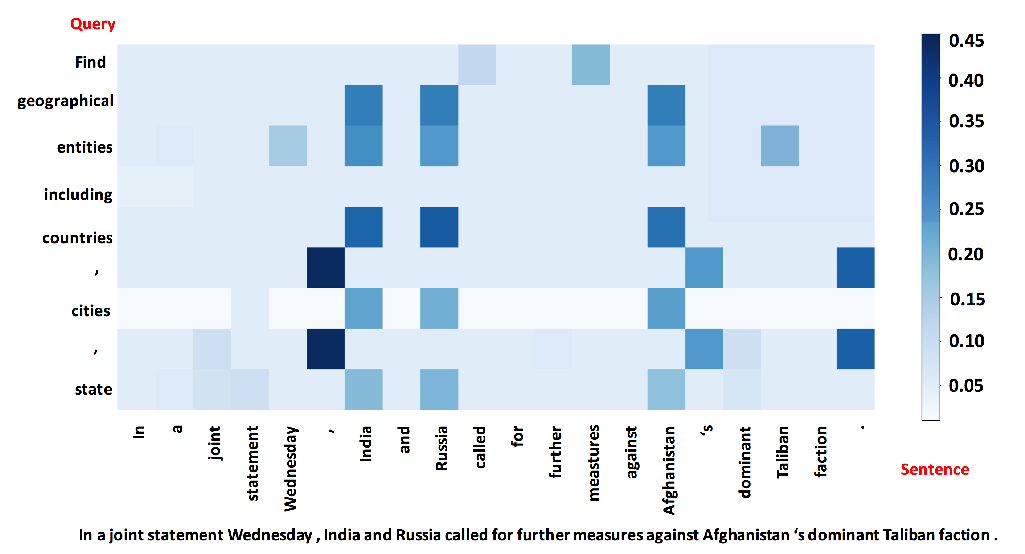

A Unified MRC Framework for Named Entity Recognition

Xiaoya Li, Jingrong Feng, Yuxian Meng, Qinghong Han, Fei Wu, Jiwei Li,

TriggerNER: Learning with Entity Triggers as Explanations for Named Entity Recognition

Bill Yuchen Lin, Dong-Ho Lee, Ming Shen, Ryan Moreno, Xiao Huang, Prashant Shiralkar, Xiang Ren,