Bilingual Dictionary Based Neural Machine Translation without Using Parallel Sentences

Xiangyu Duan, Baijun Ji, Hao Jia, Min Tan, Min Zhang, Boxing Chen, Weihua Luo, Yue Zhang

Machine Translation Long Paper

Session 2B: Jul 6

(09:00-10:00 GMT)

Session 3B: Jul 6

(13:00-14:00 GMT)

Abstract:

In this paper, we propose a new task of machine translation (MT), which is based on no parallel sentences but can refer to a ground-truth bilingual dictionary. Motivated by the ability of a monolingual speaker learning to translate via looking up the bilingual dictionary, we propose the task to see how much potential an MT system can attain using the bilingual dictionary and large scale monolingual corpora, while is independent on parallel sentences. We propose anchored training (AT) to tackle the task. AT uses the bilingual dictionary to establish anchoring points for closing the gap between source language and target language. Experiments on various language pairs show that our approaches are significantly better than various baselines, including dictionary-based word-by-word translation, dictionary-supervised cross-lingual word embedding transformation, and unsupervised MT. On distant language pairs that are hard for unsupervised MT to perform well, AT performs remarkably better, achieving performances comparable to supervised SMT trained on more than 4M parallel sentences.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

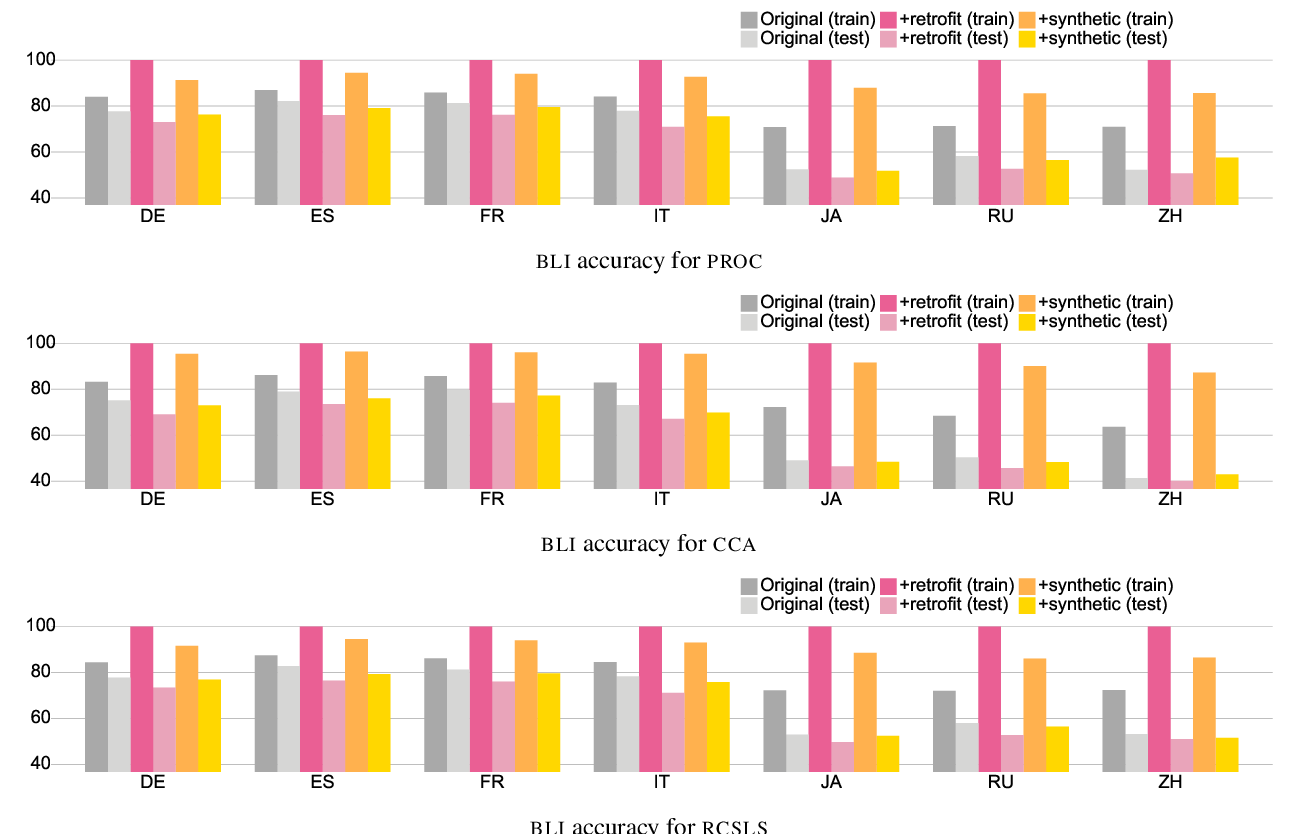

Why Overfitting Isn't Always Bad: Retrofitting Cross-Lingual Word Embeddings to Dictionaries

Mozhi Zhang, Yoshinari Fujinuma, Michael J. Paul, Jordan Boyd-Graber,

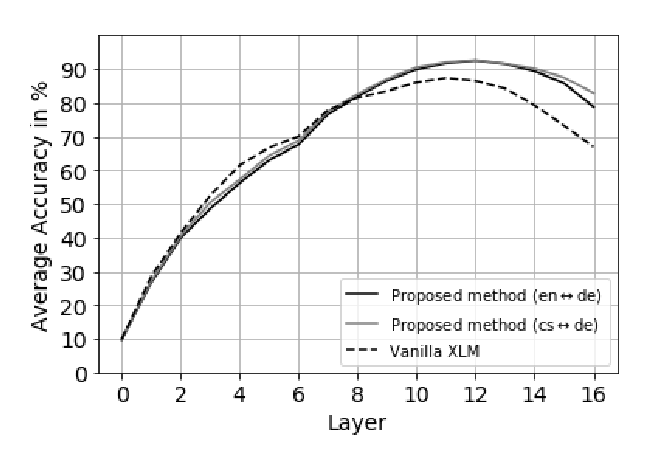

Unsupervised Multilingual Sentence Embeddings for Parallel Corpus Mining

Ivana Kvapilíková, Mikel Artetxe, Gorka Labaka, Eneko Agirre, Ondřej Bojar,

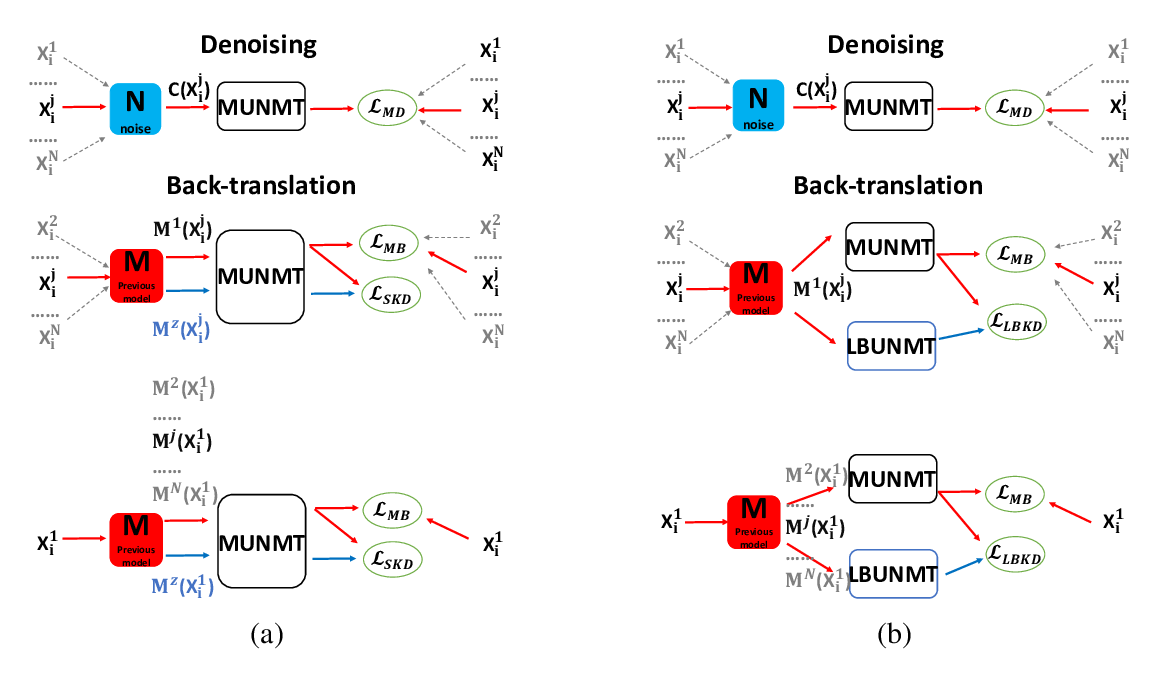

Knowledge Distillation for Multilingual Unsupervised Neural Machine Translation

Haipeng Sun, Rui Wang, Kehai Chen, Masao Utiyama, Eiichiro Sumita, Tiejun Zhao,

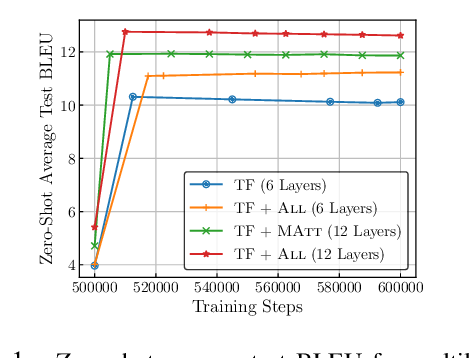

Improving Massively Multilingual Neural Machine Translation and Zero-Shot Translation

Biao Zhang, Philip Williams, Ivan Titov, Rico Sennrich,