How Does Selective Mechanism Improve Self-Attention Networks?

Xinwei Geng, Longyue Wang, Xing Wang, Bing Qin, Ting Liu, Zhaopeng Tu

Machine Learning for NLP Long Paper

Session 6A: Jul 7

(05:00-06:00 GMT)

Session 7A: Jul 7

(08:00-09:00 GMT)

Abstract:

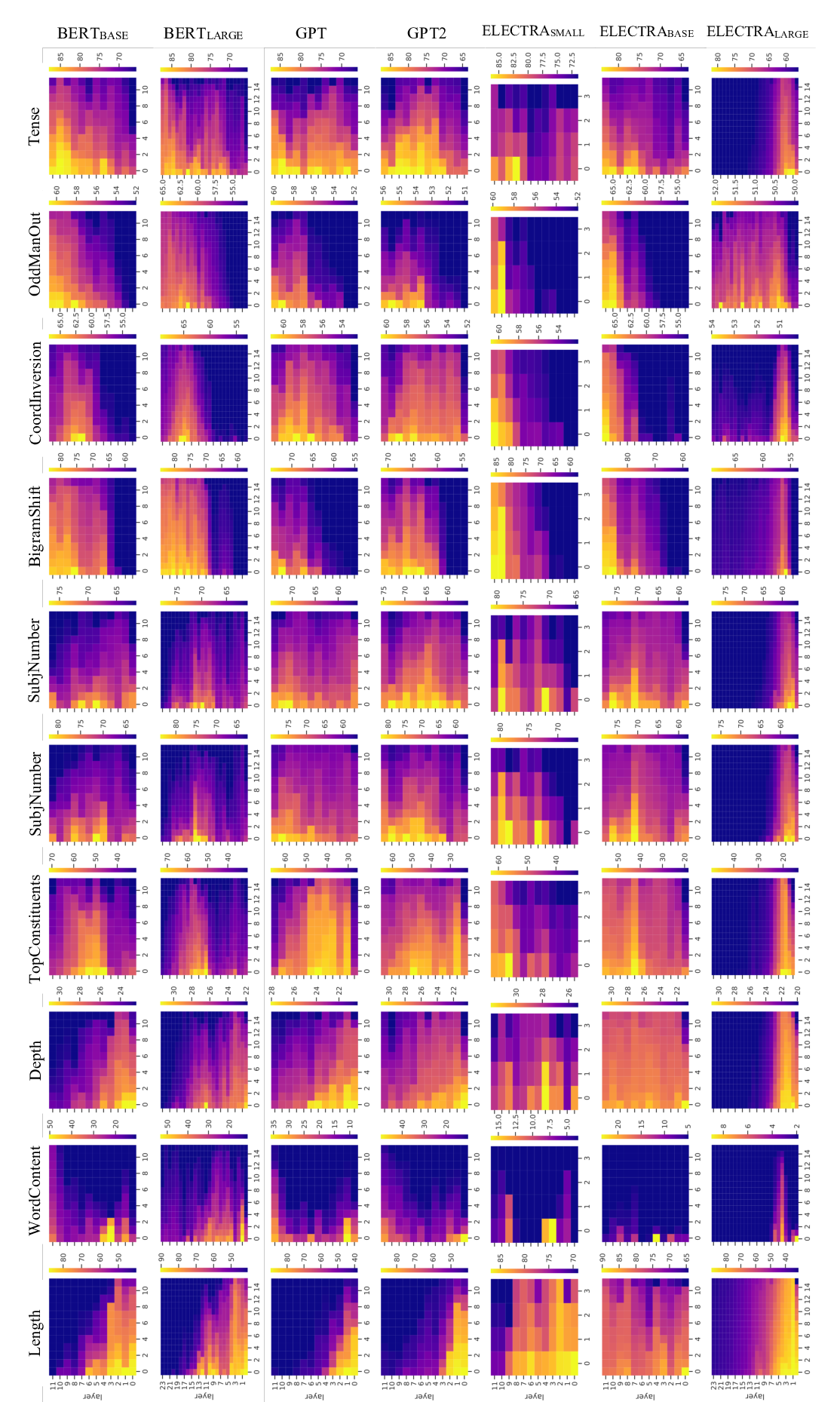

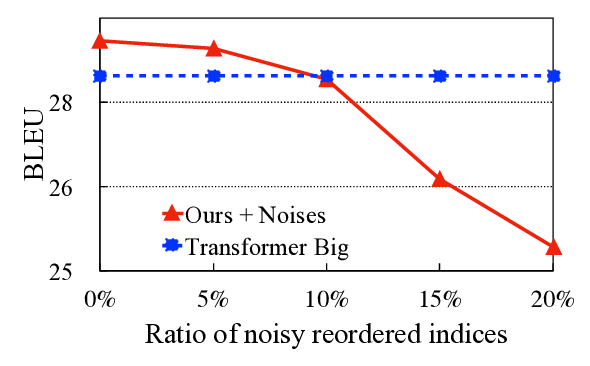

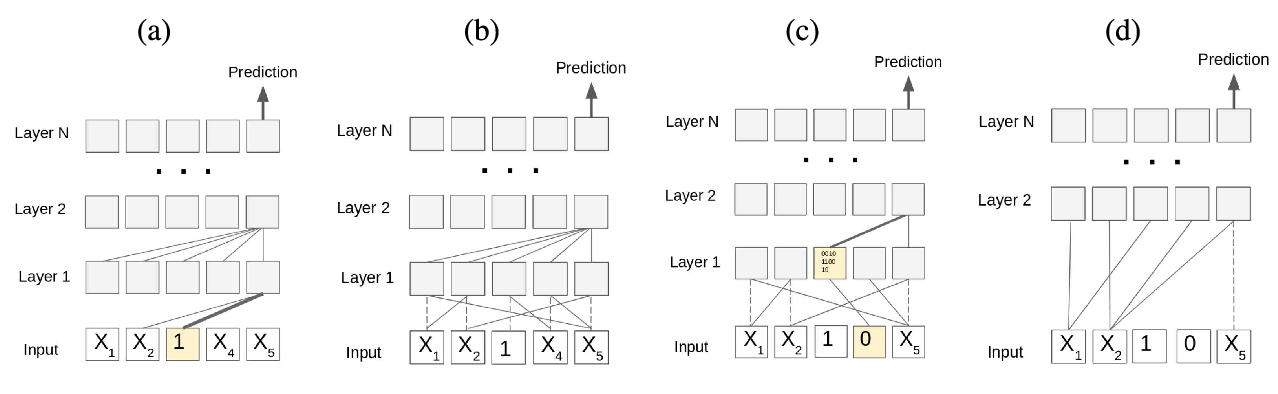

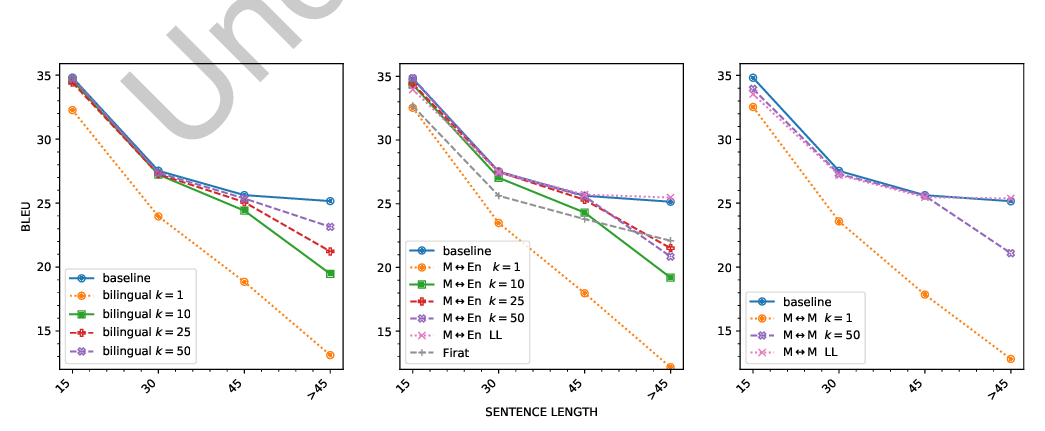

Self-attention networks (SANs) with selective mechanism has produced substantial improvements in various NLP tasks by concentrating on a subset of input words. However, the underlying reasons for their strong performance have not been well explained. In this paper, we bridge the gap by assessing the strengths of selective SANs (SSANs), which are implemented with a flexible and universal Gumbel-Softmax. Experimental results on several representative NLP tasks, including natural language inference, semantic role labelling, and machine translation, show that SSANs consistently outperform the standard SANs. Through well-designed probing experiments, we empirically validate that the improvement of SSANs can be attributed in part to mitigating two commonly-cited weaknesses of SANs: word order encoding and structure modeling. Specifically, the selective mechanism improves SANs by paying more attention to content words that contribute to the meaning of the sentence.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

A Systematic Study of Inner-Attention-Based Sentence Representations in Multilingual Neural Machine Translation

Raúl Vázquez, Alessandro Raganato, Mathias Creutz, Jörg Tiedemann,

Roles and Utilization of Attention Heads in Transformer-based Neural Language Models

Jae-young Jo, Sung-Hyon Myaeng,