On the Inference Calibration of Neural Machine Translation

Shuo Wang, Zhaopeng Tu, Shuming Shi, Yang Liu

Machine Translation Long Paper

Session 6A: Jul 7

(05:00-06:00 GMT)

Session 7A: Jul 7

(08:00-09:00 GMT)

Abstract:

Confidence calibration, which aims to make model predictions equal to the true correctness measures, is important for neural machine translation (NMT) because it is able to offer useful indicators of translation errors in the generated output. While prior studies have shown that NMT models trained with label smoothing are well-calibrated on the ground-truth training data, we find that miscalibration still remains a severe challenge for NMT during inference due to the discrepancy between training and inference. By carefully designing experiments on three language pairs, our work provides in-depth analyses of the correlation between calibration and translation performance as well as linguistic properties of miscalibration and reports a number of interesting findings that might help humans better analyze, understand and improve NMT models. Based on these observations, we further propose a new graduated label smoothing method that can improve both inference calibration and translation performance.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

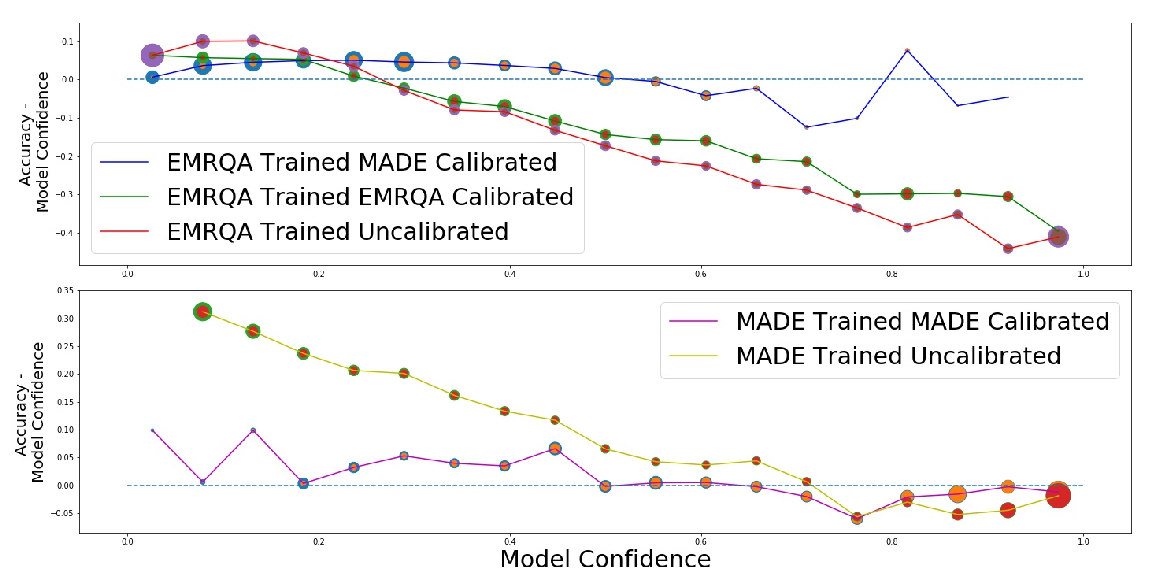

Calibrating Structured Output Predictors for Natural Language Processing

Abhyuday Jagannatha, Hong Yu,

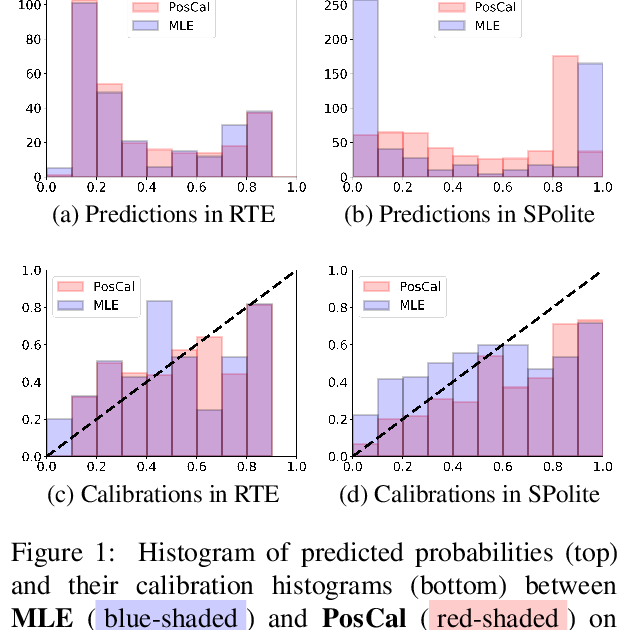

Posterior Calibrated Training on Sentence Classification Tasks

Taehee Jung, Dongyeop Kang, Hua Cheng, Lucas Mentch, Thomas Schaaf,

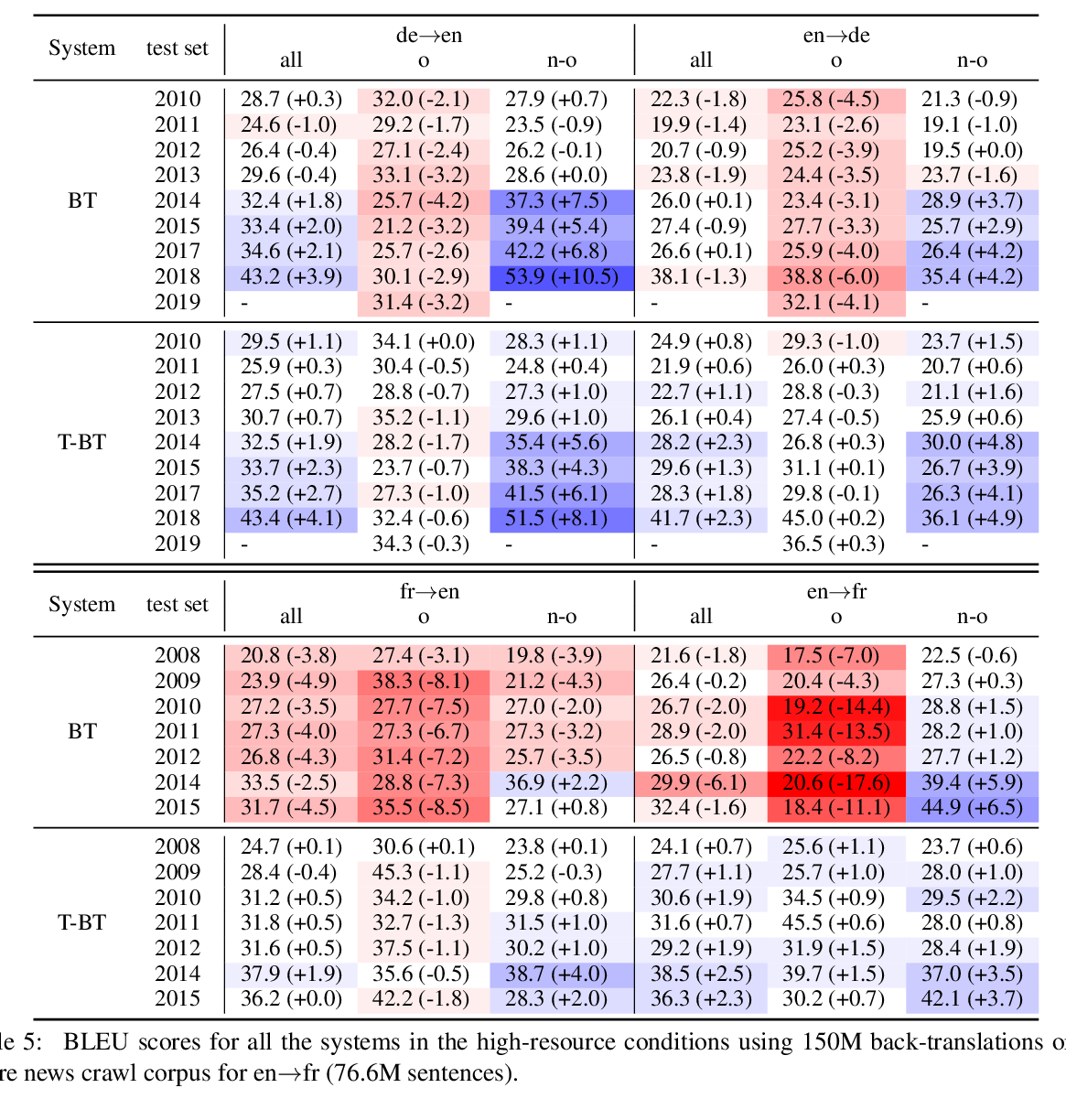

Tagged Back-translation Revisited: Why Does It Really Work?

Benjamin Marie, Raphael Rubino, Atsushi Fujita,

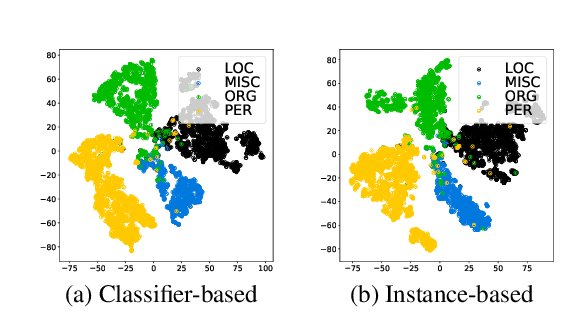

Instance-Based Learning of Span Representations: A Case Study through Named Entity Recognition

Hiroki Ouchi, Jun Suzuki, Sosuke Kobayashi, Sho Yokoi, Tatsuki Kuribayashi, Ryuto Konno, Kentaro Inui,