On the Encoder-Decoder Incompatibility in Variational Text Modeling and Beyond

Chen Wu, Prince Zizhuang Wang, William Yang Wang

Machine Learning for NLP Long Paper

Session 6B: Jul 7

(06:00-07:00 GMT)

Session 8B: Jul 7

(13:00-14:00 GMT)

Abstract:

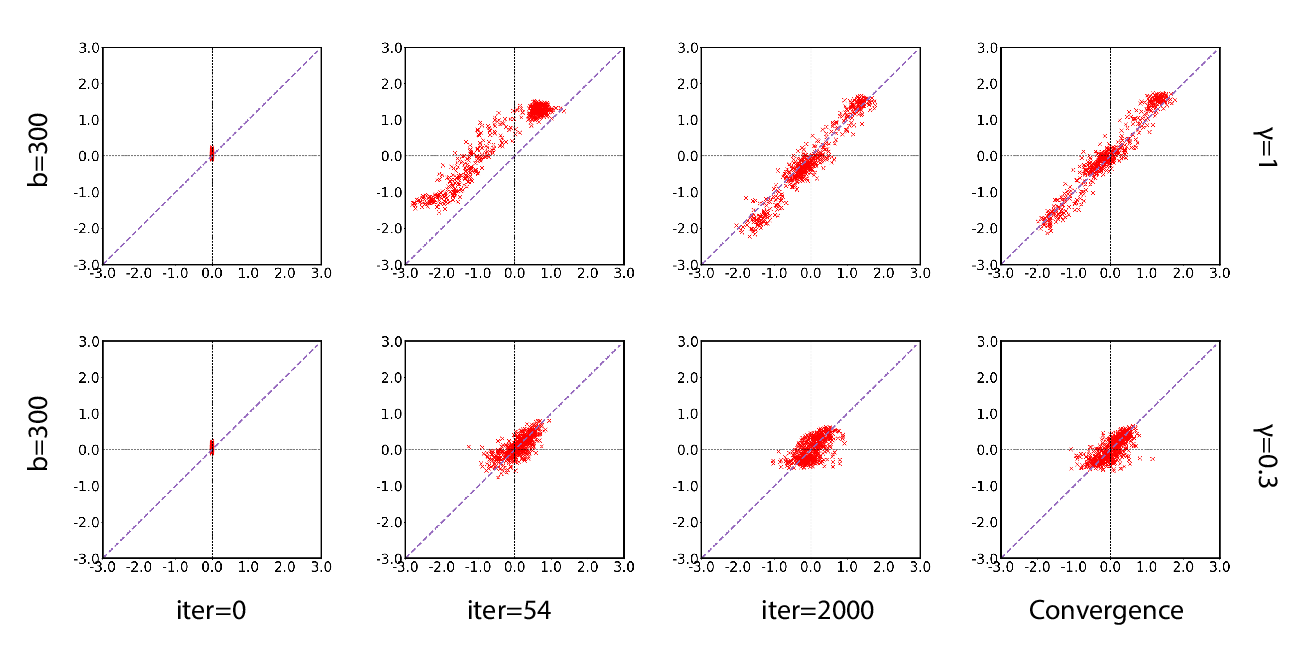

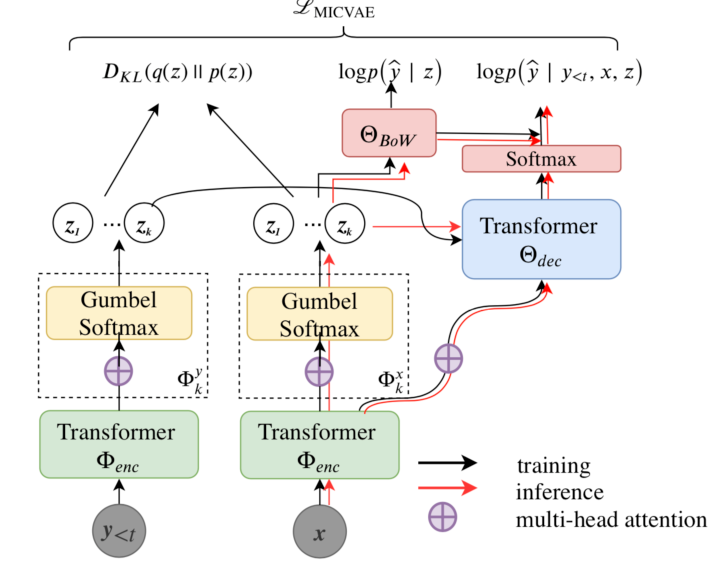

Variational autoencoders (VAEs) combine latent variables with amortized variational inference, whose optimization usually converges into a trivial local optimum termed posterior collapse, especially in text modeling. By tracking the optimization dynamics, we observe the encoder-decoder incompatibility that leads to poor parameterizations of the data manifold. We argue that the trivial local optimum may be avoided by improving the encoder and decoder parameterizations since the posterior network is part of a transition map between them. To this end, we propose Coupled-VAE, which couples a VAE model with a deterministic autoencoder with the same structure and improves the encoder and decoder parameterizations via encoder weight sharing and decoder signal matching. We apply the proposed Coupled-VAE approach to various VAE models with different regularization, posterior family, decoder structure, and optimization strategy. Experiments on benchmark datasets (i.e., PTB, Yelp, and Yahoo) show consistently improved results in terms of probability estimation and richness of the latent space. We also generalize our method to conditional language modeling and propose Coupled-CVAE, which largely improves the diversity of dialogue generation on the Switchboard dataset.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

A Batch Normalized Inference Network Keeps the KL Vanishing Away

Qile Zhu, Wei Bi, Xiaojiang Liu, Xiyao Ma, Xiaolin Li, Dapeng Wu,

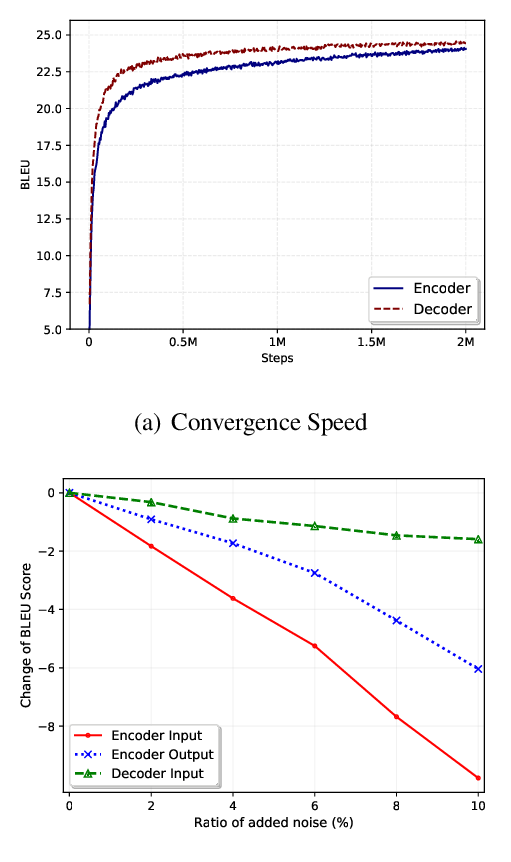

Addressing Posterior Collapse with Mutual Information for Improved Variational Neural Machine Translation

Arya D. McCarthy, Xian Li, Jiatao Gu, Ning Dong,

Variational Neural Machine Translation with Normalizing Flows

Hendra Setiawan, Matthias Sperber, Udhyakumar Nallasamy, Matthias Paulik,

Jointly Masked Sequence-to-Sequence Model for Non-Autoregressive Neural Machine Translation

Junliang Guo, Linli Xu, Enhong Chen,