SAFER: A Structure-free Approach for Certified Robustness to Adversarial Word Substitutions

Mao Ye, Chengyue Gong, Qiang Liu

Machine Learning for NLP Short Paper

Session 6B: Jul 7

(06:00-07:00 GMT)

Session 10B: Jul 7

(21:00-22:00 GMT)

Abstract:

State-of-the-art NLP models can often be fooled by human-unaware transformations such as synonymous word substitution. For security reasons, it is of critical importance to develop models with certified robustness that can provably guarantee that the prediction is can not be altered by any possible synonymous word substitution. In this work, we propose a certified robust method based on a new randomized smoothing technique, which constructs a stochastic ensemble by applying random word substitutions on the input sentences, and leverage the statistical properties of the ensemble to provably certify the robustness. Our method is simple and structure-free in that it only requires the black-box queries of the model outputs, and hence can be applied to any pre-trained models (such as BERT) and any types of models (world-level or subword-level). Our method significantly outperforms recent state-of-the-art methods for certified robustness on both IMDB and Amazon text classification tasks. To the best of our knowledge, we are the first work to achieve certified robustness on large systems such as BERT with practically meaningful certified accuracy.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

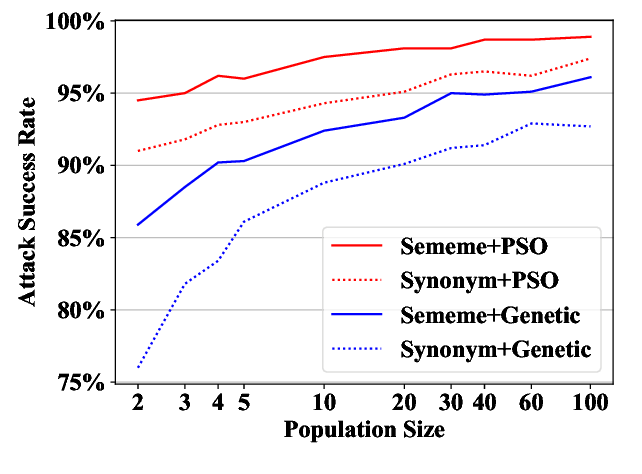

Word-level Textual Adversarial Attacking as Combinatorial Optimization

Yuan Zang, Fanchao Qi, Chenghao Yang, Zhiyuan Liu, Meng Zhang, Qun Liu, Maosong Sun,

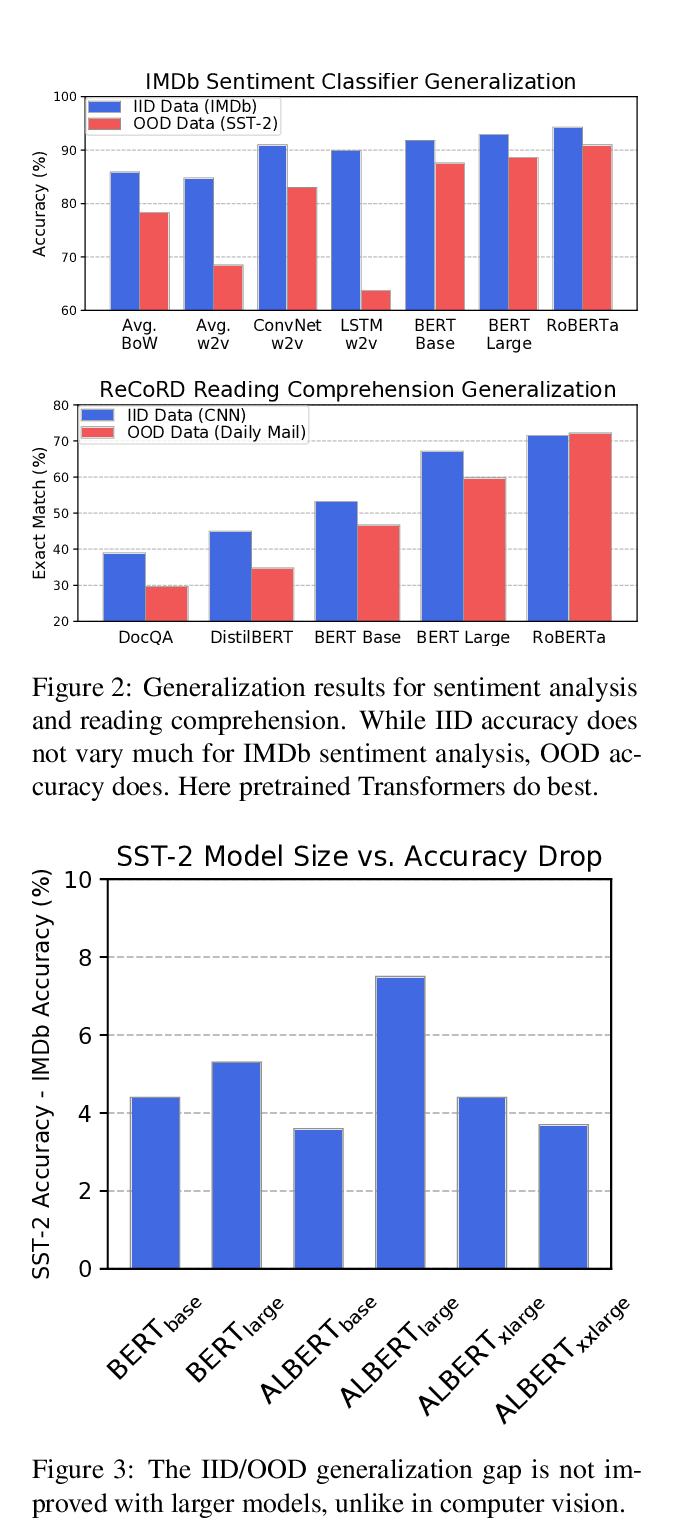

Pretrained Transformers Improve Out-of-Distribution Robustness

Dan Hendrycks, Xiaoyuan Liu, Eric Wallace, Adam Dziedzic, Rishabh Krishnan, Dawn Song,

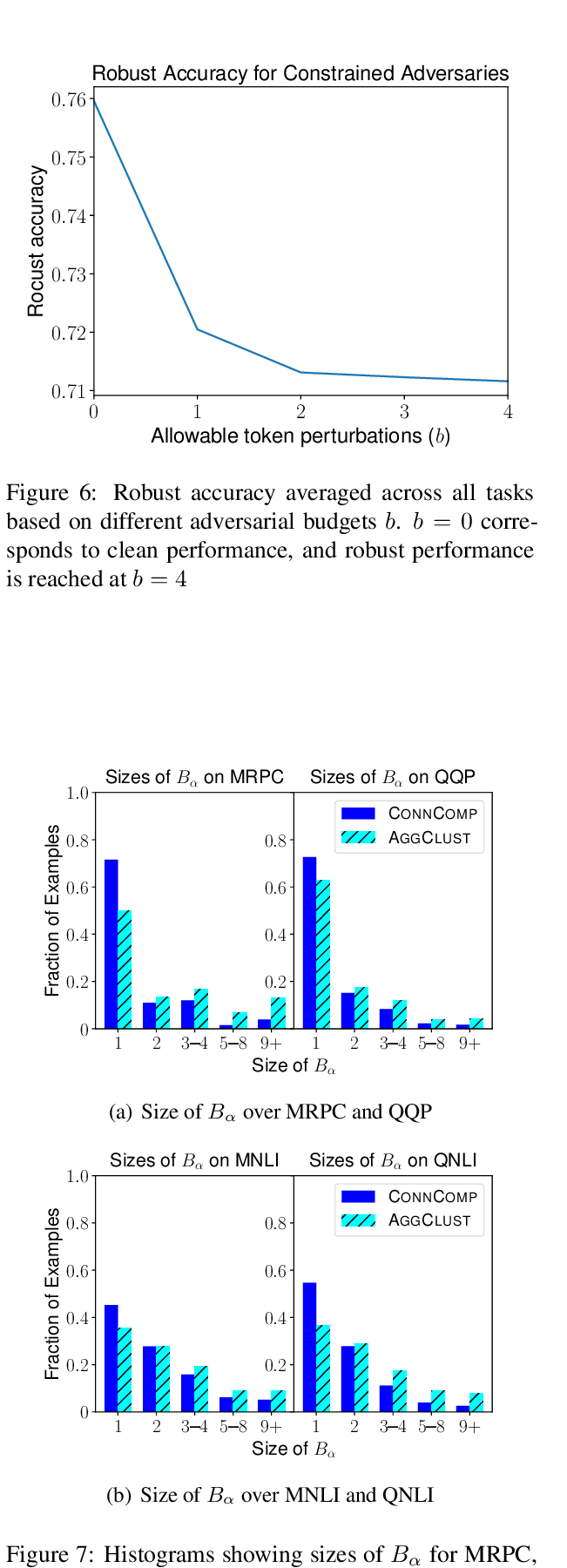

Robust Encodings: A Framework for Combating Adversarial Typos

Erik Jones, Robin Jia, Aditi Raghunathan, Percy Liang,

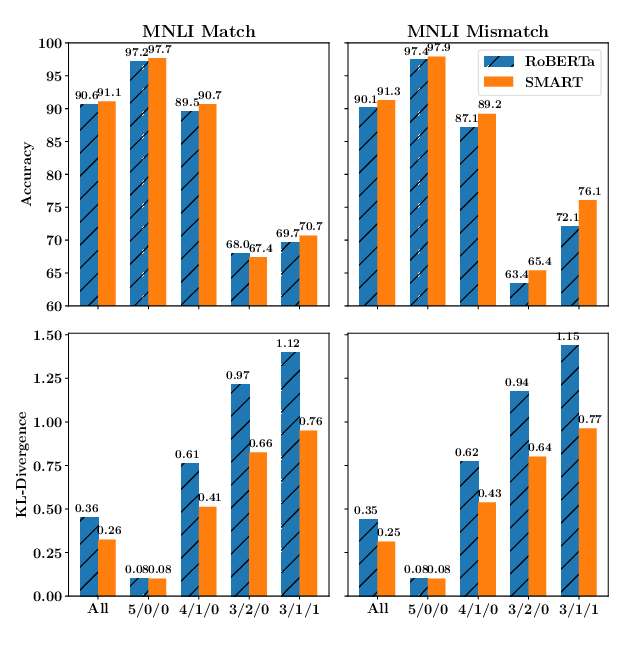

SMART: Robust and Efficient Fine-Tuning for Pre-trained Natural Language Models through Principled Regularized Optimization

Haoming Jiang, Pengcheng He, Weizhu Chen, Xiaodong Liu, Jianfeng Gao, Tuo Zhao,