BiRRE: Learning Bidirectional Residual Relation Embeddings for Supervised Hypernymy Detection

Chengyu Wang, Xiaofeng He

Semantics: Lexical Long Paper

Session 6B: Jul 7

(06:00-07:00 GMT)

Session 7A: Jul 7

(08:00-09:00 GMT)

Abstract:

The hypernymy detection task has been addressed under various frameworks. Previously, the design of unsupervised hypernymy scores has been extensively studied. In contrast, supervised classifiers, especially distributional models, leverage the global contexts of terms to make predictions, but are more likely to suffer from ``lexical memorization''. In this work, we revisit supervised distributional models for hypernymy detection. Rather than taking embeddings of two terms as classification inputs, we introduce a representation learning framework named Bidirectional Residual Relation Embeddings (BiRRE). In this model, a term pair is represented by a BiRRE vector as features for hypernymy classification, which models the possibility of a term being mapped to another in the embedding space by hypernymy relations. A Latent Projection Model with Negative Regularization (LPMNR) is proposed to simulate how hypernyms and hyponyms are generated by neural language models, and to generate BiRRE vectors based on bidirectional residuals of projections. Experiments verify BiRRE outperforms strong baselines over various evaluation frameworks.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Learning Lexical Subspaces in a Distributional Vector Space

Kushal Arora, Aishik Chakraborty, Jackie Chi Kit Cheung,

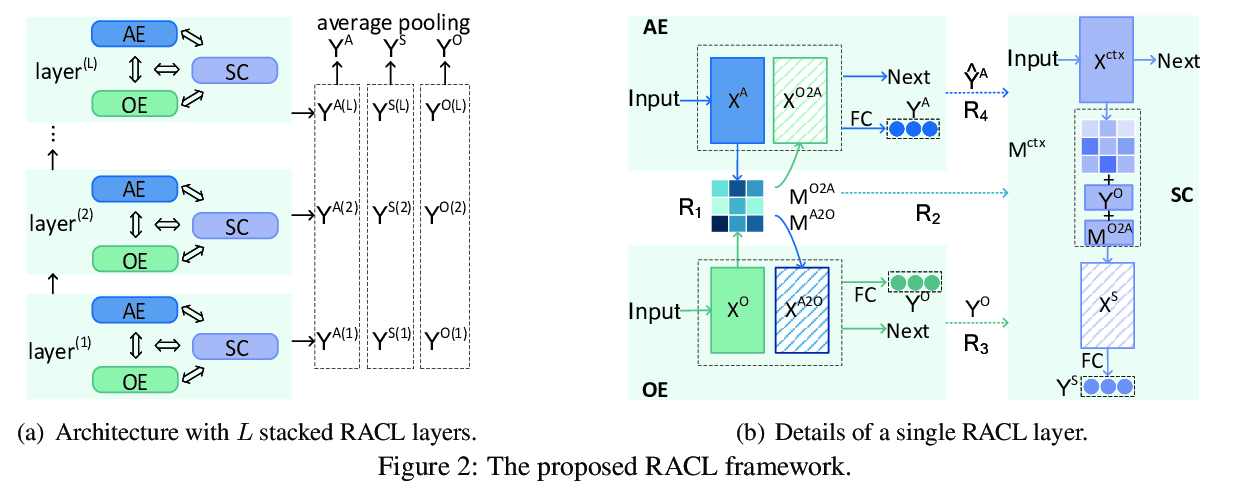

Relation-Aware Collaborative Learning for Unified Aspect-Based Sentiment Analysis

Zhuang Chen, Tieyun Qian,

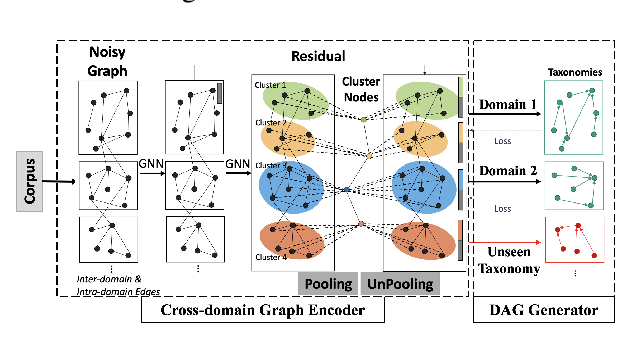

Taxonomy Construction of Unseen Domains via Graph-based Cross-Domain Knowledge Transfer

Chao Shang, Sarthak Dash, Md. Faisal Mahbub Chowdhury, Nandana Mihindukulasooriya, Alfio Gliozzo,

Hypernymy Detection for Low-Resource Languages via Meta Learning

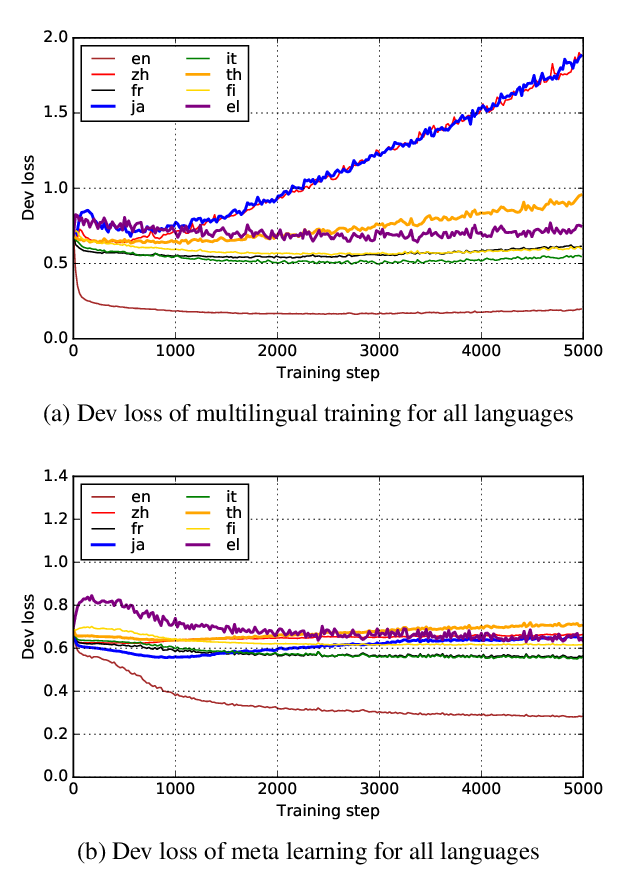

Changlong Yu, Jialong Han, Haisong Zhang, Wilfred Ng,