Bayesian Hierarchical Words Representation Learning

Oren Barkan, Idan Rejwan, Avi Caciularu, Noam Koenigstein

Machine Learning for NLP Short Paper

Session 7A: Jul 7

(08:00-09:00 GMT)

Session 8B: Jul 7

(13:00-14:00 GMT)

Abstract:

This paper presents the Bayesian Hierarchical Words Representation (BHWR) learning algorithm. BHWR facilitates Variational Bayes word representation learning combined with semantic taxonomy modeling via hierarchical priors. By propagating relevant information between related words, BHWR utilizes the taxonomy to improve the quality of such representations. Evaluation of several linguistic datasets demonstrates the advantages of BHWR over suitable alternatives that facilitate Bayesian modeling with or without semantic priors. Finally, we further show that BHWR produces better representations for rare words.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

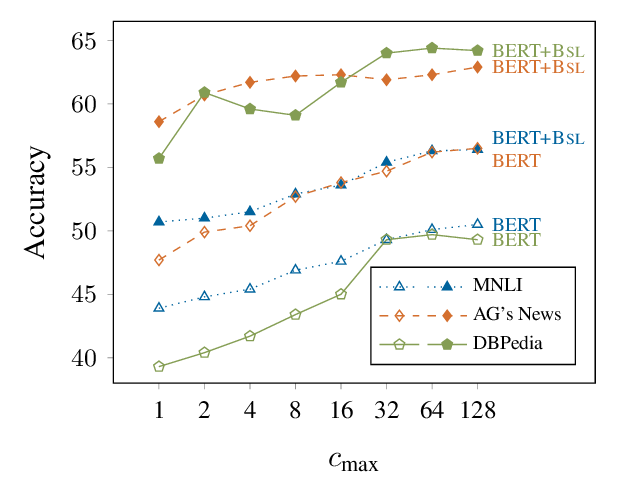

BERTRAM: Improved Word Embeddings Have Big Impact on Contextualized Model Performance

Timo Schick, Hinrich Schütze,

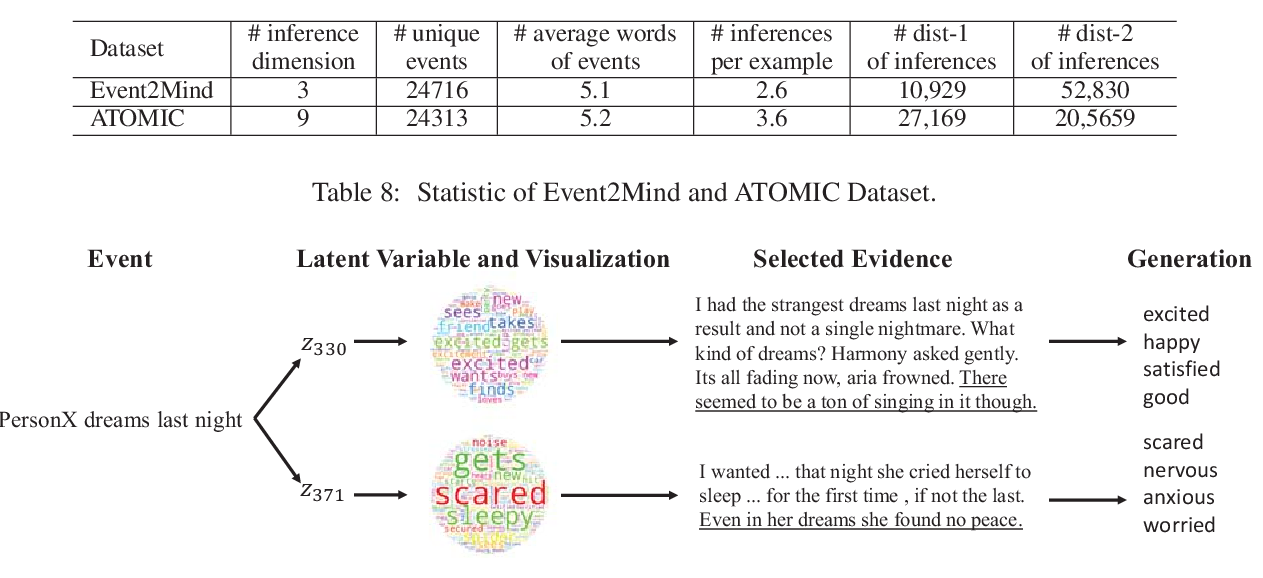

Evidence-Aware Inferential Text Generation with Vector Quantised Variational AutoEncoder

Daya Guo, Duyu Tang, Nan Duan, Jian Yin, Daxin Jiang, Ming Zhou,

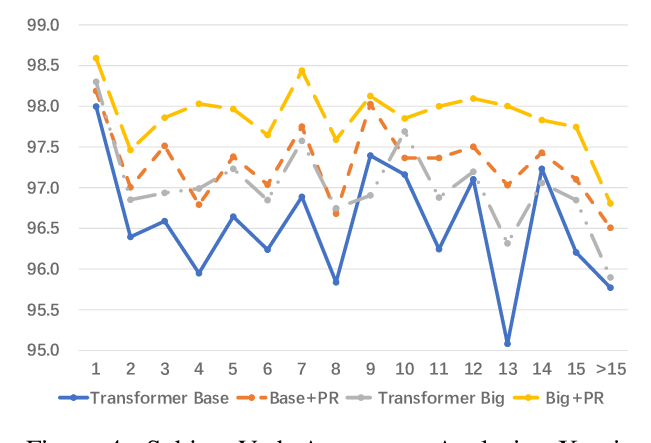

Learning Source Phrase Representations for Neural Machine Translation

Hongfei Xu, Josef van Genabith, Deyi Xiong, Qiuhui Liu, Jingyi Zhang,

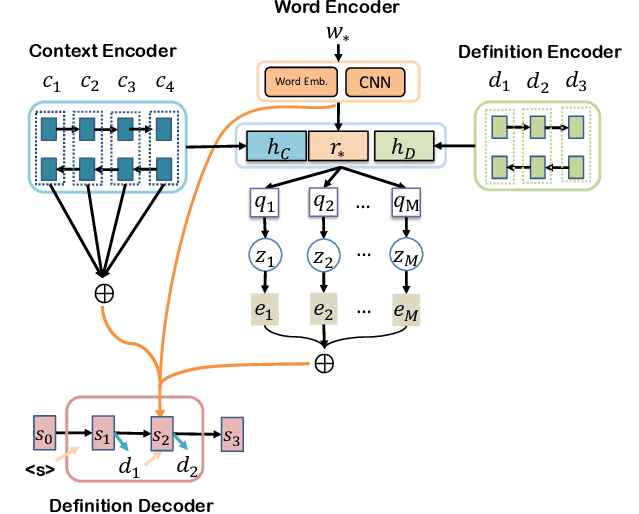

Explicit Semantic Decomposition for Definition Generation

Jiahuan Li, Yu Bao, Shujian Huang, Xinyu Dai, Jiajun Chen,