When do Word Embeddings Accurately Reflect Surveys on our Beliefs About People?

Kenneth Joseph, Jonathan Morgan

Computational Social Science and Social Media Long Paper

Session 8A: Jul 7

(12:00-13:00 GMT)

Session 10A: Jul 7

(20:00-21:00 GMT)

Abstract:

Social biases are encoded in word embeddings. This presents a unique opportunity to study society historically and at scale, and a unique danger when embeddings are used in downstream applications. Here, we investigate the extent to which publicly-available word embeddings accurately reflect beliefs about certain kinds of people as measured via traditional survey methods. We find that biases found in word embeddings do, on average, closely mirror survey data across seventeen dimensions of social meaning. However, we also find that biases in embeddings are much more reflective of survey data for some dimensions of meaning (e.g. gender) than others (e.g. race), and that we can be highly confident that embedding-based measures reflect survey data only for the most salient biases.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

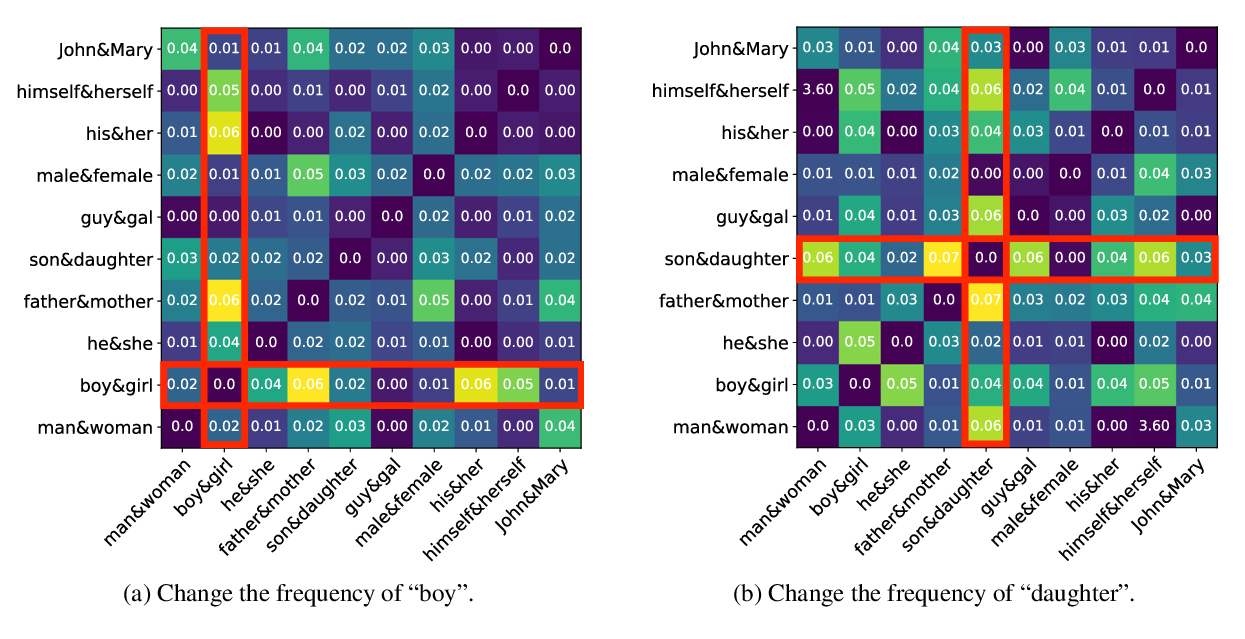

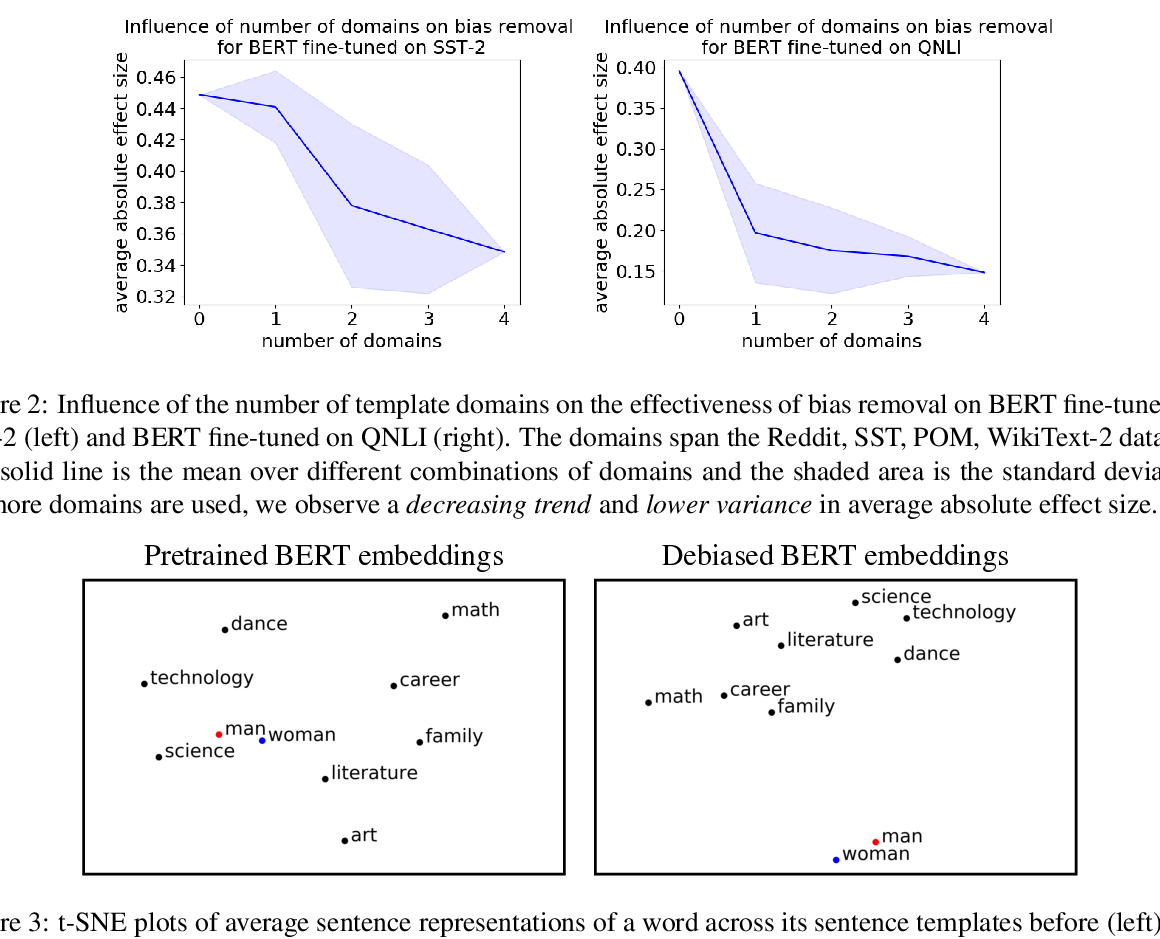

Towards Debiasing Sentence Representations

Paul Pu Liang, Irene Mengze Li, Emily Zheng, Yao Chong Lim, Ruslan Salakhutdinov, Louis-Philippe Morency,

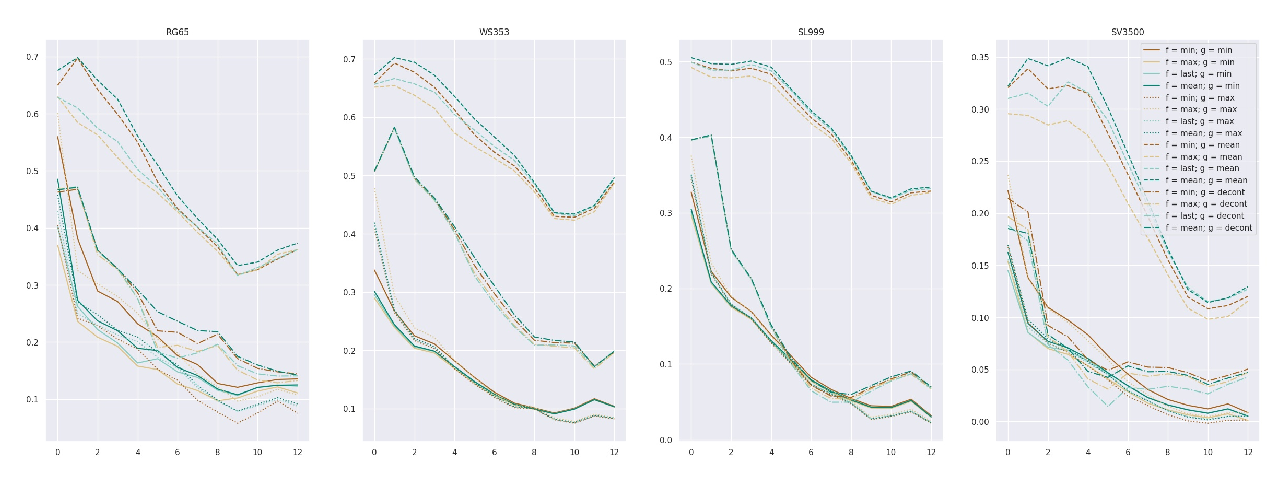

Interpreting Pretrained Contextualized Representations via Reductions to Static Embeddings

Rishi Bommasani, Kelly Davis, Claire Cardie,

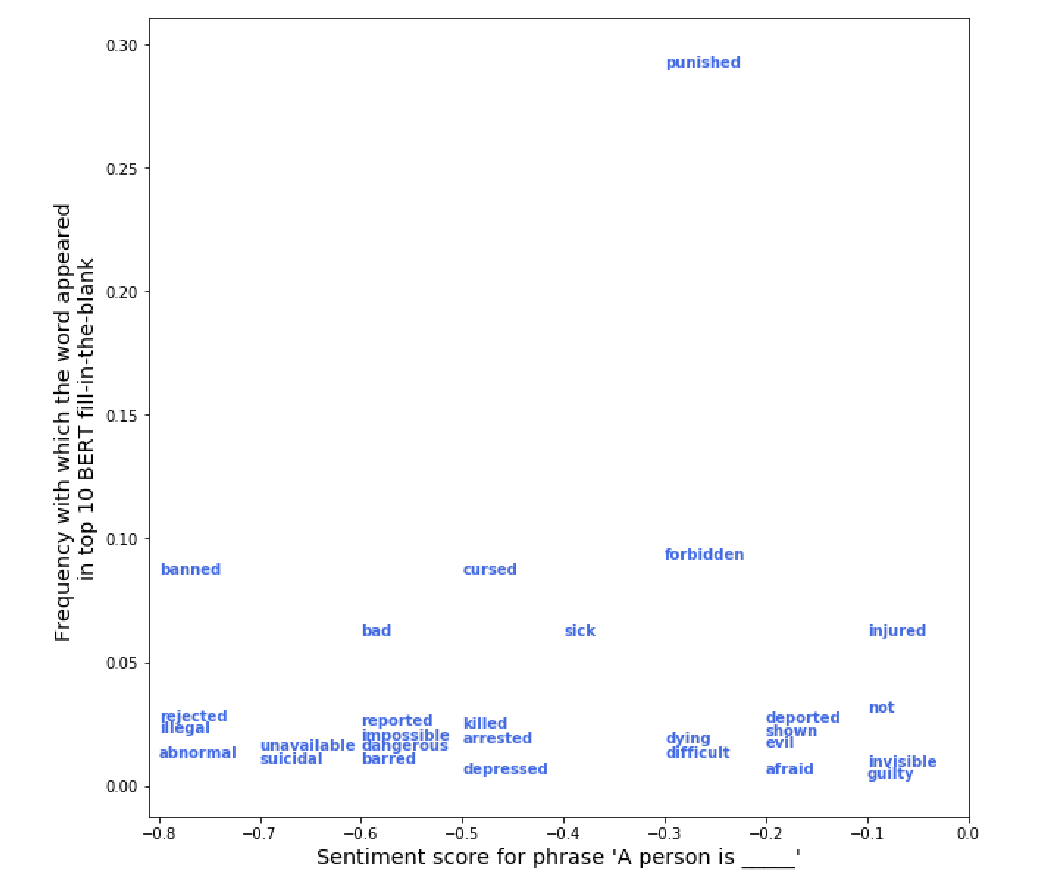

Social Biases in NLP Models as Barriers for Persons with Disabilities

Ben Hutchinson, Vinodkumar Prabhakaran, Emily Denton, Kellie Webster, Yu Zhong, Stephen Denuyl,

Double-Hard Debias: Tailoring Word Embeddings for Gender Bias Mitigation

Tianlu Wang, Xi Victoria Lin, Nazneen Fatema Rajani, Bryan McCann, Vicente Ordonez, Caiming Xiong,