Dialogue-Based Relation Extraction

Dian Yu, Kai Sun, Claire Cardie, Dong Yu

Resources and Evaluation Long Paper

Session 9A: Jul 7

(17:00-18:00 GMT)

Session 10B: Jul 7

(21:00-22:00 GMT)

Abstract:

We present the first human-annotated dialogue-based relation extraction (RE) dataset DialogRE, aiming to support the prediction of relation(s) between two arguments that appear in a dialogue. We further offer DialogRE as a platform for studying cross-sentence RE as most facts span multiple sentences. We argue that speaker-related information plays a critical role in the proposed task, based on an analysis of similarities and differences between dialogue-based and traditional RE tasks. Considering the timeliness of communication in a dialogue, we design a new metric to evaluate the performance of RE methods in a conversational setting and investigate the performance of several representative RE methods on DialogRE. Experimental results demonstrate that a speaker-aware extension on the best-performing model leads to gains in both the standard and conversational evaluation settings. DialogRE is available at https://dataset.org/dialogre/.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

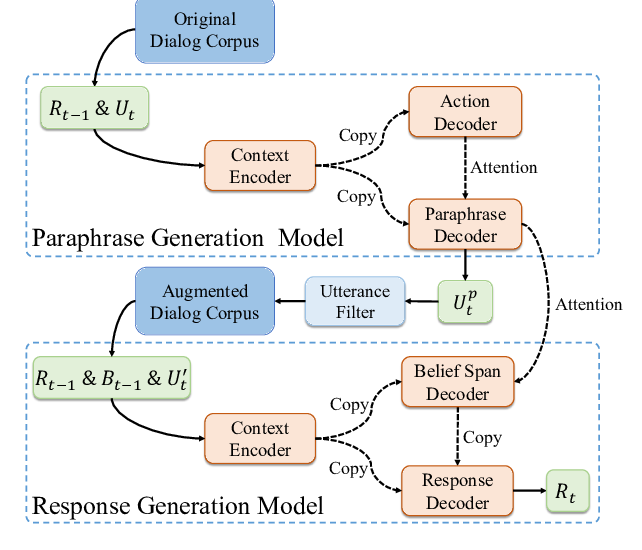

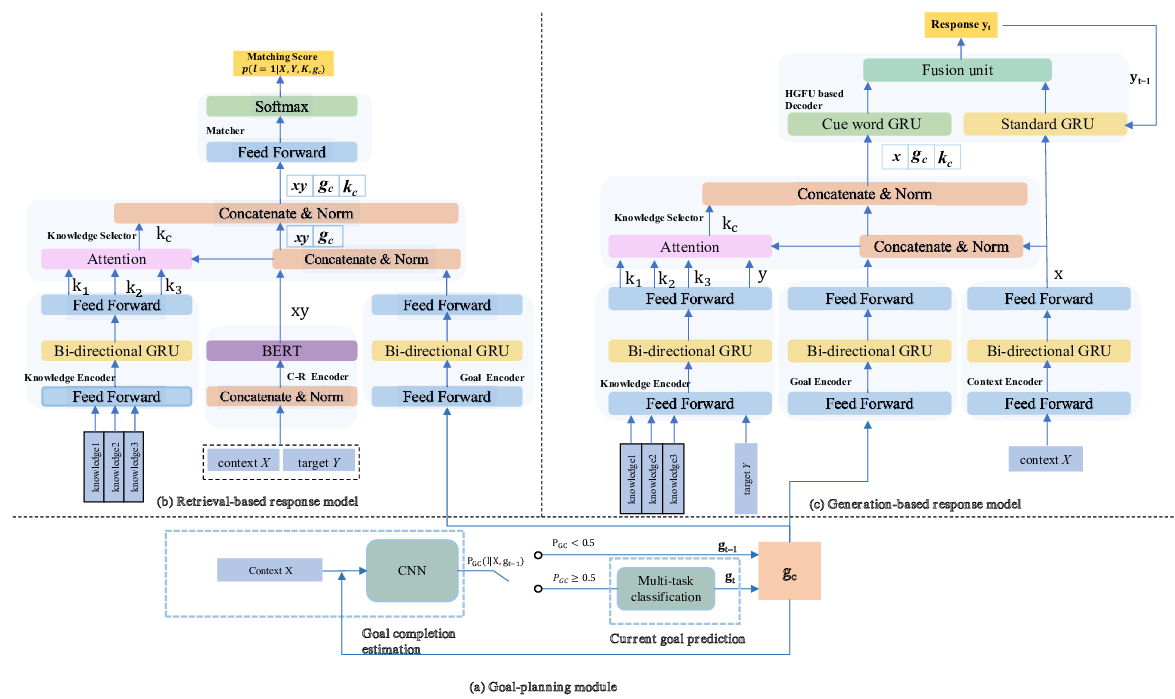

Towards Conversational Recommendation over Multi-Type Dialogs

Zeming Liu, Haifeng Wang, Zheng-Yu Niu, Hua Wu, Wanxiang Che, Ting Liu,

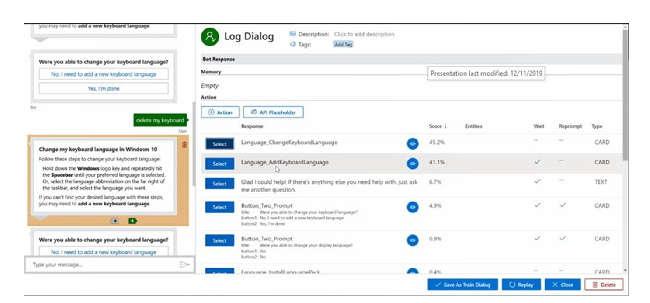

Conversation Learner - A Machine Teaching Tool for Building Dialog Managers for Task-Oriented Dialog Systems

Swadheen Shukla, Lars Liden, Shahin Shayandeh, Eslam Kamal, Jinchao Li, Matt Mazzola, Thomas Park, Baolin Peng, Jianfeng Gao,

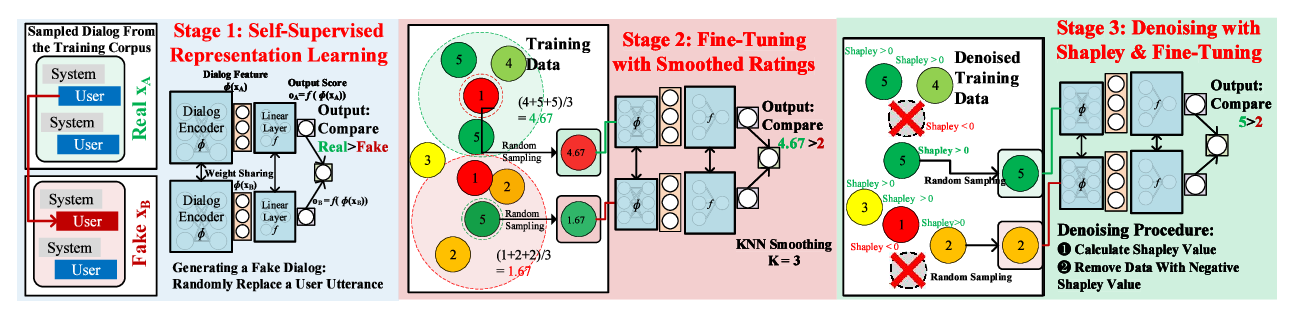

Beyond User Self-Reported Likert Scale Ratings: A Comparison Model for Automatic Dialog Evaluation

Weixin Liang, James Zou, Zhou Yu,