What Does BERT with Vision Look At?

Liunian Harold Li, Mark Yatskar, Da Yin, Cho-Jui Hsieh, Kai-Wei Chang

Theme Short Paper

Session 9A: Jul 7

(17:00-18:00 GMT)

Session 10A: Jul 7

(20:00-21:00 GMT)

Abstract:

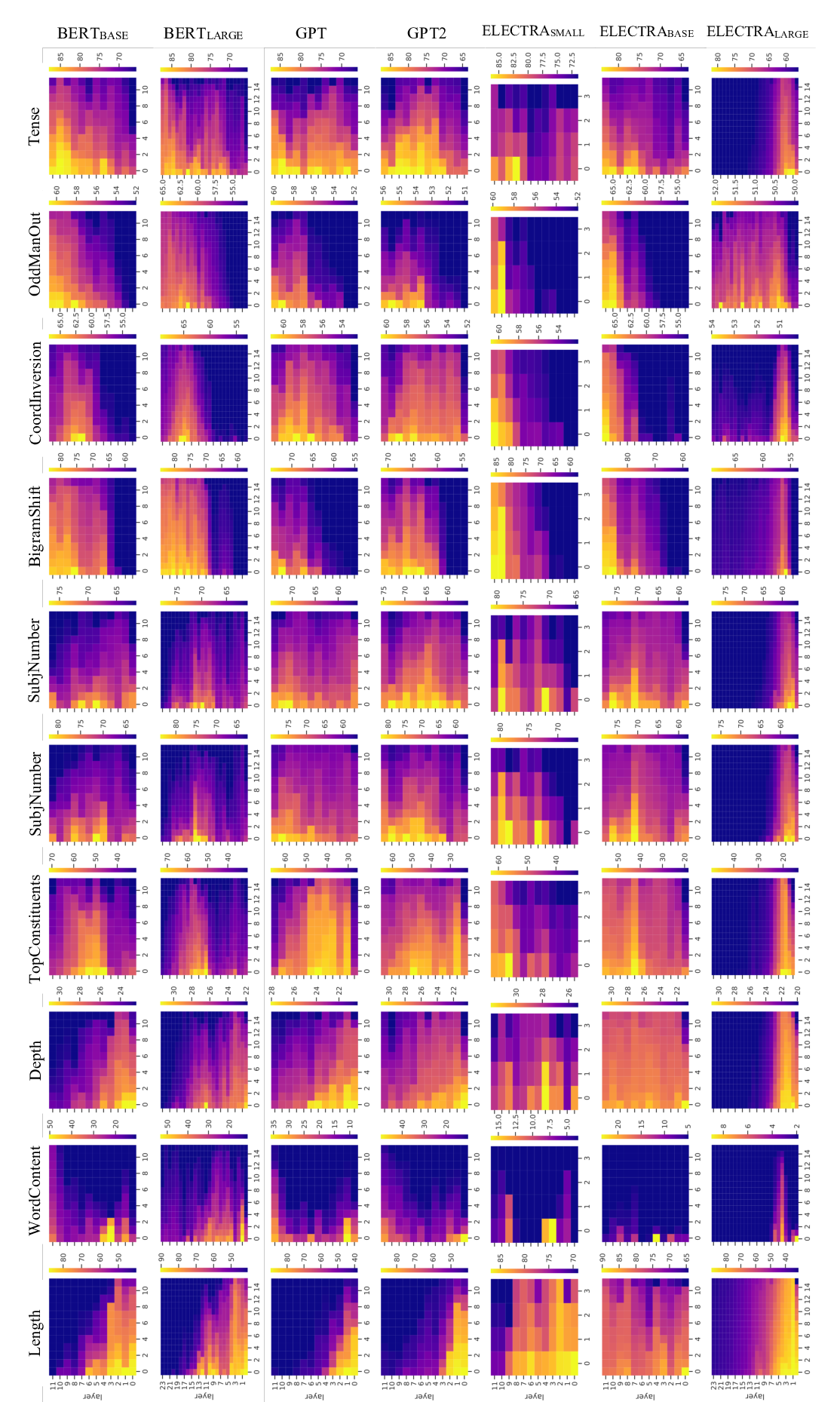

Pre-trained visually grounded language models such as ViLBERT, LXMERT, and UNITER have achieved significant performance improvement on vision-and-language tasks but what they learn during pre-training remains unclear. In this work, we demonstrate that certain attention heads of a visually grounded language model actively ground elements of language to image regions. Specifically, some heads can map entities to image regions, performing the task known as entity grounding. Some heads can even detect the syntactic relations between non-entity words and image regions, tracking, for example, associations between verbs and regions corresponding to their arguments. We denote this ability as syntactic grounding. We verify grounding both quantitatively and qualitatively, using Flickr30K Entities as a testbed.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

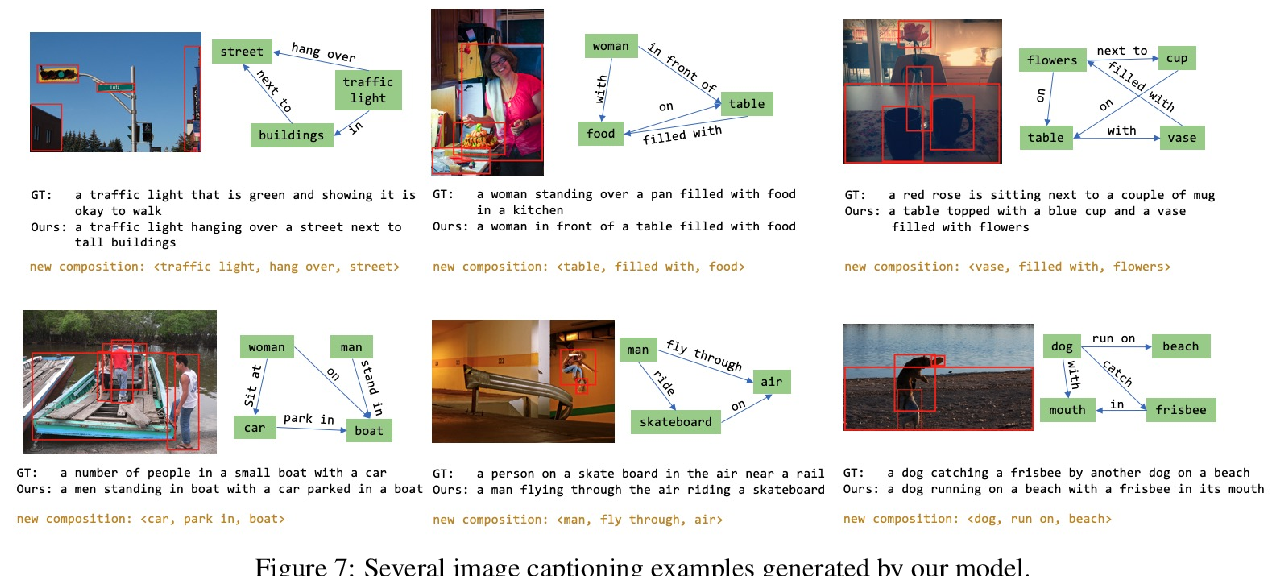

Roles and Utilization of Attention Heads in Transformer-based Neural Language Models

Jae-young Jo, Sung-Hyon Myaeng,

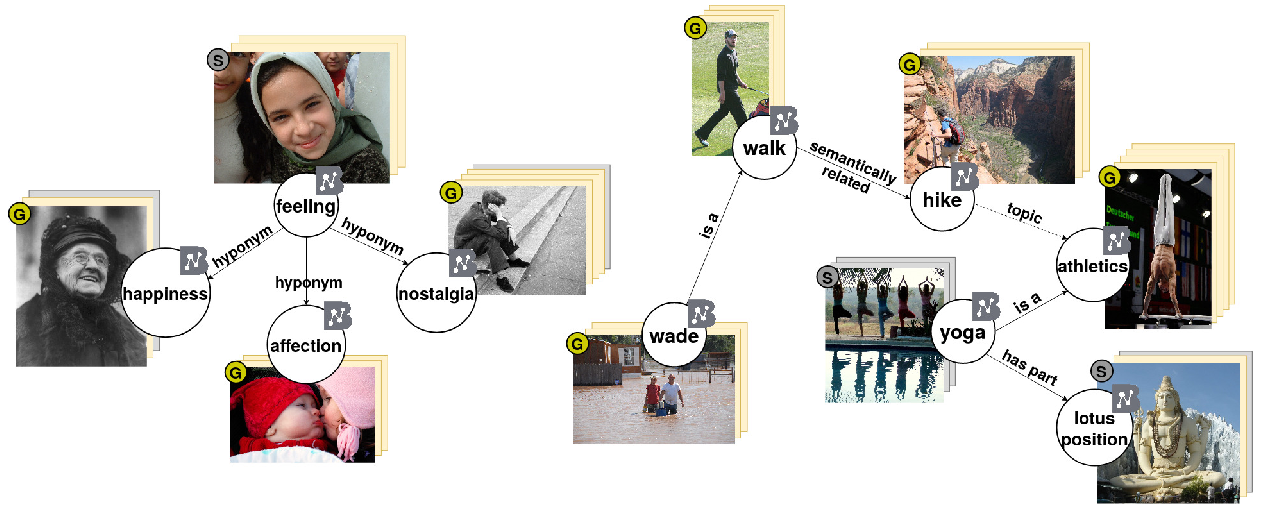

Fatality Killed the Cat or: BabelPic, a Multimodal Dataset for Non-Concrete Concepts

Agostina Calabrese, Michele Bevilacqua, Roberto Navigli,

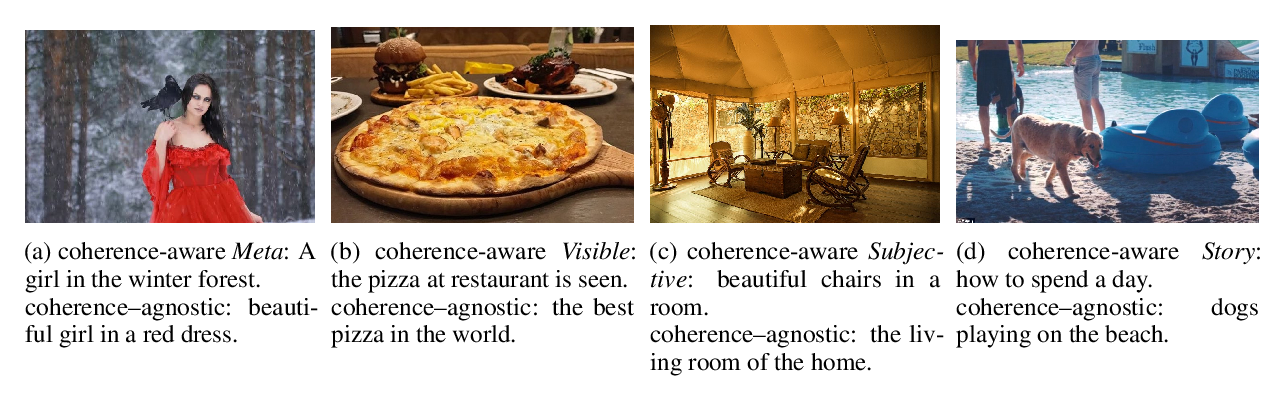

Cross-modal Coherence Modeling for Caption Generation

Malihe Alikhani, Piyush Sharma, Shengjie Li, Radu Soricut, Matthew Stone,