Harnessing the linguistic signal to predict scalar inferences

Sebastian Schuster, Yuxing Chen, Judith Degen

Discourse and Pragmatics Long Paper

Session 9B: Jul 7

(18:00-19:00 GMT)

Session 10B: Jul 7

(21:00-22:00 GMT)

Abstract:

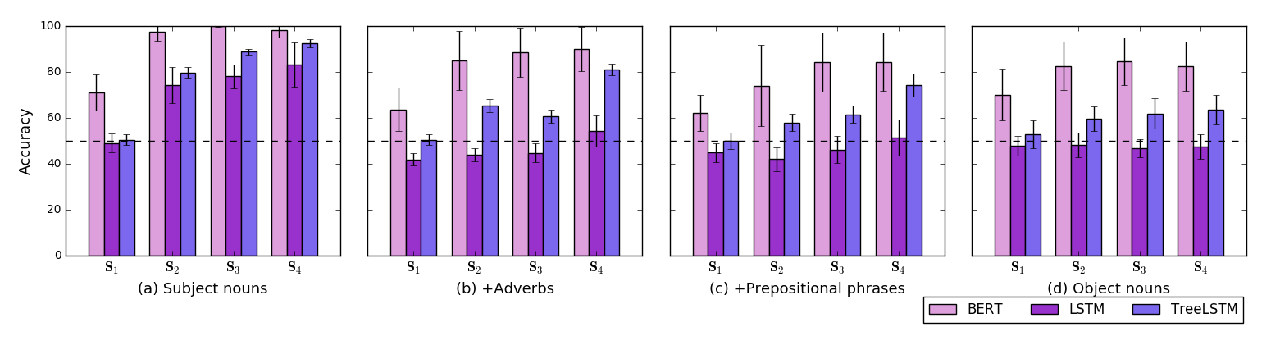

Pragmatic inferences often subtly depend on the presence or absence of linguistic features. For example, the presence of a partitive construction (of the) increases the strength of a so-called scalar inference: listeners perceive the inference that Chris did not eat all of the cookies to be stronger after hearing "Chris ate some of the cookies" than after hearing the same utterance without a partitive, "Chris ate some cookies". In this work, we explore to what extent neural network sentence encoders can learn to predict the strength of scalar inferences. We first show that an LSTM-based sentence encoder trained on an English dataset of human inference strength ratings is able to predict ratings with high accuracy (r = 0.78). We then probe the model's behavior using manually constructed minimal sentence pairs and corpus data. We first that the model inferred previously established associations between linguistic features and inference strength, suggesting that the model learns to use linguistic features to predict pragmatic inferences.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

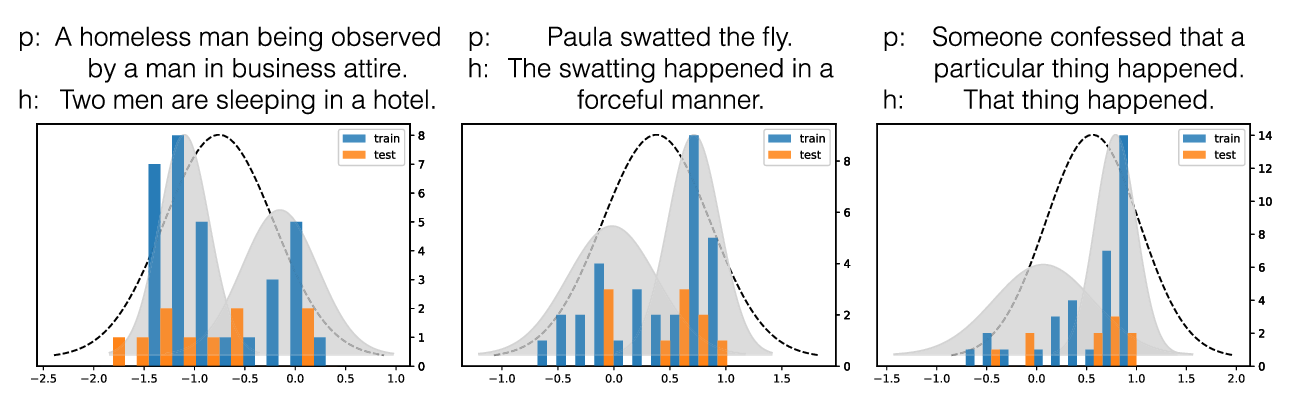

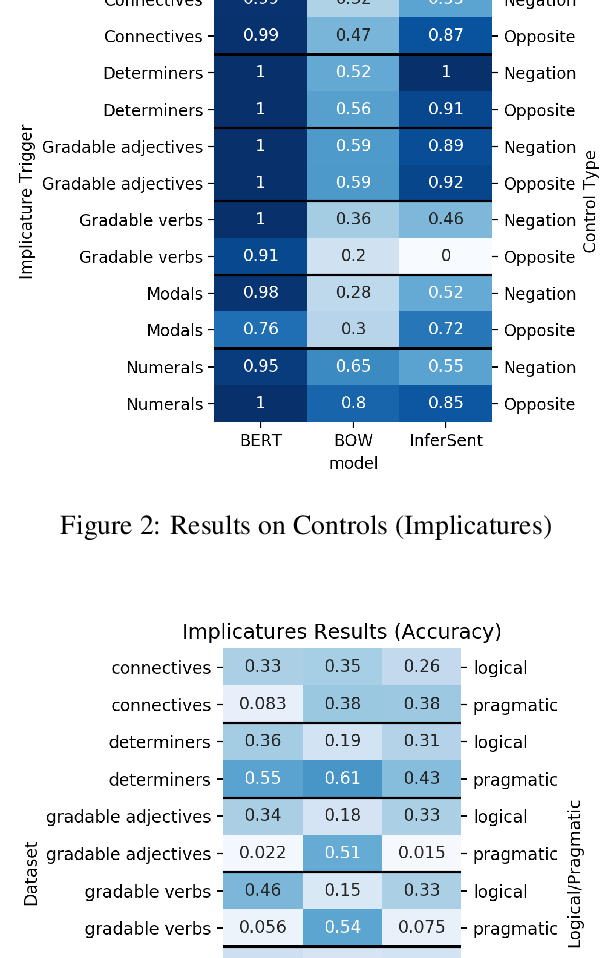

Are Natural Language Inference Models IMPPRESsive? Learning IMPlicature and PRESupposition

Paloma Jeretic, Alex Warstadt, Suvrat Bhooshan, Adina Williams,

Do Neural Models Learn Systematicity of Monotonicity Inference in Natural Language?

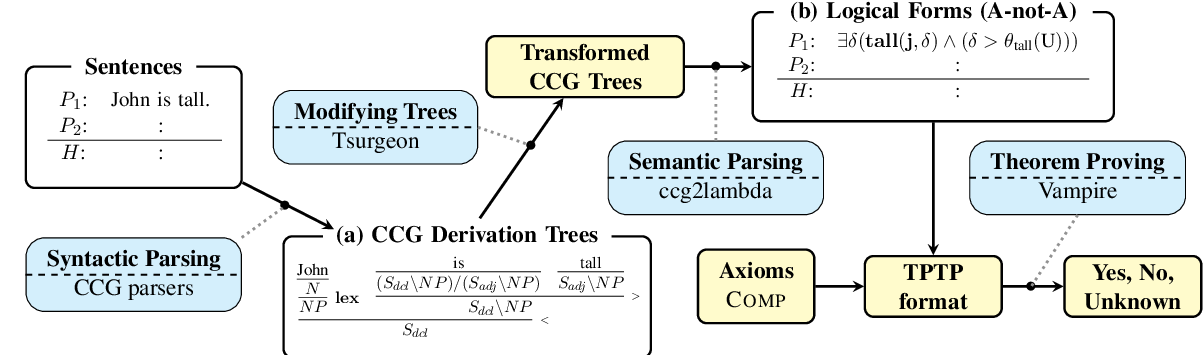

Hitomi Yanaka, Koji Mineshima, Daisuke Bekki, Kentaro Inui,

Logical Inferences with Comparatives and Generalized Quantifiers

Izumi Haruta, Koji Mineshima, Daisuke Bekki,