Do Neural Models Learn Systematicity of Monotonicity Inference in Natural Language?

Hitomi Yanaka, Koji Mineshima, Daisuke Bekki, Kentaro Inui

Semantics: Textual Inference and Other Areas of Semantics Long Paper

Session 11A: Jul 8

(05:00-06:00 GMT)

Session 12B: Jul 8

(09:00-10:00 GMT)

Abstract:

Despite the success of language models using neural networks, it remains unclear to what extent neural models have the generalization ability to perform inferences. In this paper, we introduce a method for evaluating whether neural models can learn systematicity of monotonicity inference in natural language, namely, the regularity for performing arbitrary inferences with generalization on composition. We consider four aspects of monotonicity inferences and test whether the models can systematically interpret lexical and logical phenomena on different training/test splits. A series of experiments show that three neural models systematically draw inferences on unseen combinations of lexical and logical phenomena when the syntactic structures of the sentences are similar between the training and test sets. However, the performance of the models significantly decreases when the structures are slightly changed in the test set while retaining all vocabularies and constituents already appearing in the training set. This indicates that the generalization ability of neural models is limited to cases where the syntactic structures are nearly the same as those in the training set.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

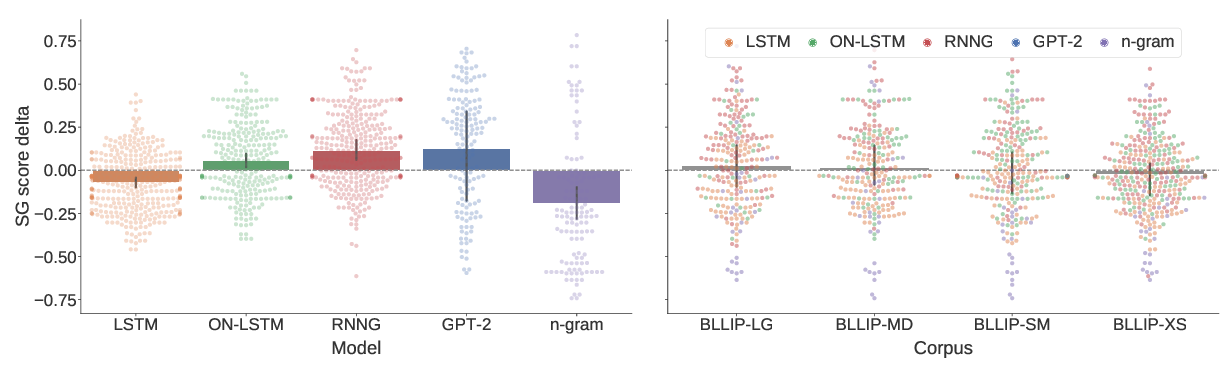

A Systematic Assessment of Syntactic Generalization in Neural Language Models

Jennifer Hu, Jon Gauthier, Peng Qian, Ethan Wilcox, Roger Levy,

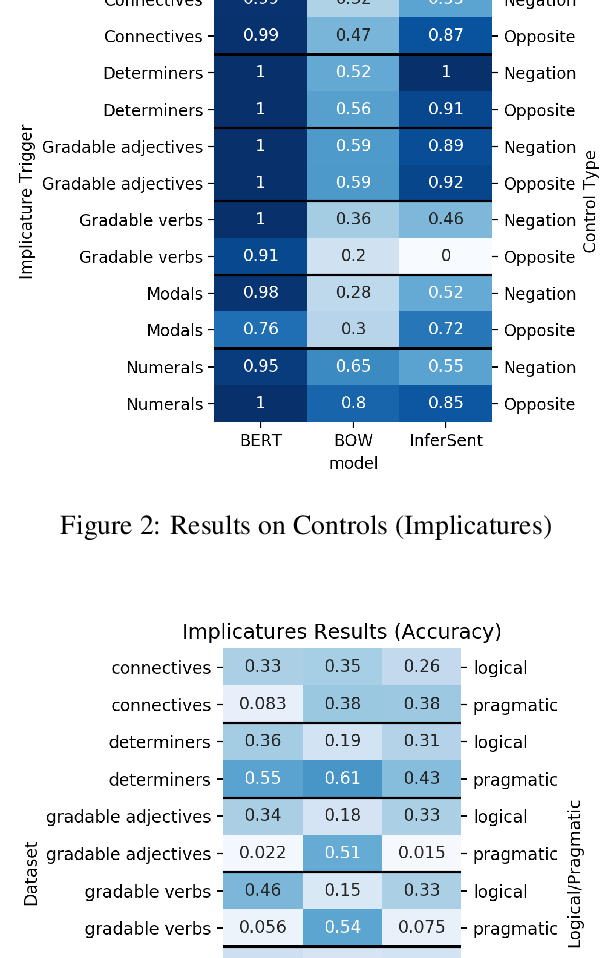

Are Natural Language Inference Models IMPPRESsive? Learning IMPlicature and PRESupposition

Paloma Jeretic, Alex Warstadt, Suvrat Bhooshan, Adina Williams,

Harnessing the linguistic signal to predict scalar inferences

Sebastian Schuster, Yuxing Chen, Judith Degen,