Obtaining Faithful Interpretations from Compositional Neural Networks

Sanjay Subramanian, Ben Bogin, Nitish Gupta, Tomer Wolfson, Sameer Singh, Jonathan Berant, Matt Gardner

Interpretability and Analysis of Models for NLP Long Paper

Session 9B: Jul 7

(18:00-19:00 GMT)

Session 10A: Jul 7

(20:00-21:00 GMT)

Abstract:

Neural module networks (NMNs) are a popular approach for modeling compositionality: they achieve high accuracy when applied to problems in language and vision, while reflecting the compositional structure of the problem in the network architecture. However, prior work implicitly assumed that the structure of the network modules, describing the abstract reasoning process, provides a faithful explanation of the model's reasoning; that is, that all modules perform their intended behaviour. In this work, we propose and conduct a systematic evaluation of the intermediate outputs of NMNs on NLVR2 and DROP, two datasets which require composing multiple reasoning steps. We find that the intermediate outputs differ from the expected output, illustrating that the network structure does not provide a faithful explanation of model behaviour. To remedy that, we train the model with auxiliary supervision and propose particular choices for module architecture that yield much better faithfulness, at a minimal cost to accuracy.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Benefits of Intermediate Annotations in Reading Comprehension

Dheeru Dua, Sameer Singh, Matt Gardner,

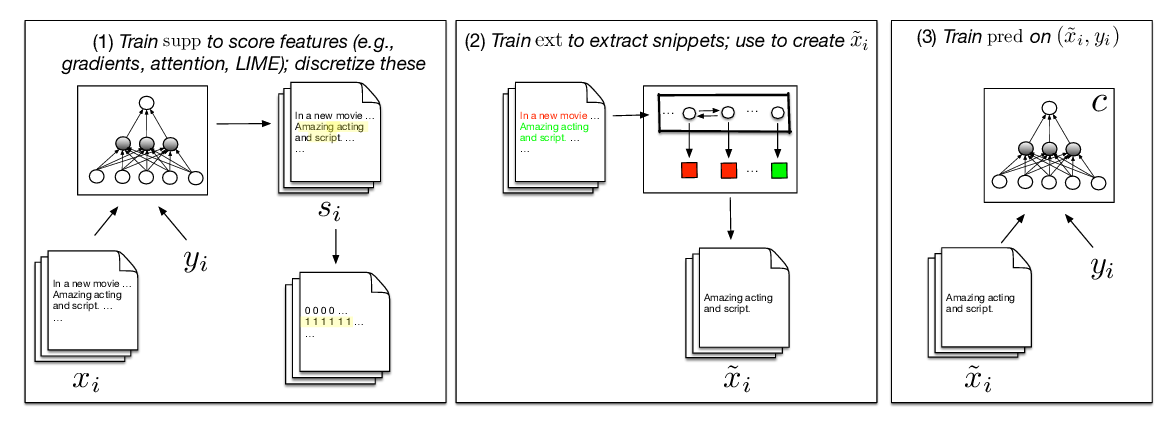

Learning to Faithfully Rationalize by Construction

Sarthak Jain, Sarah Wiegreffe, Yuval Pinter, Byron C. Wallace,

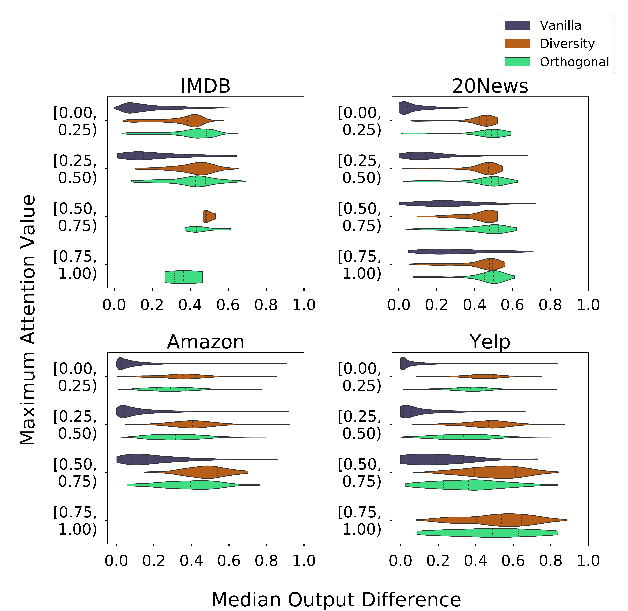

Towards Transparent and Explainable Attention Models

Akash Kumar Mohankumar, Preksha Nema, Sharan Narasimhan, Mitesh M. Khapra, Balaji Vasan Srinivasan, Balaraman Ravindran,

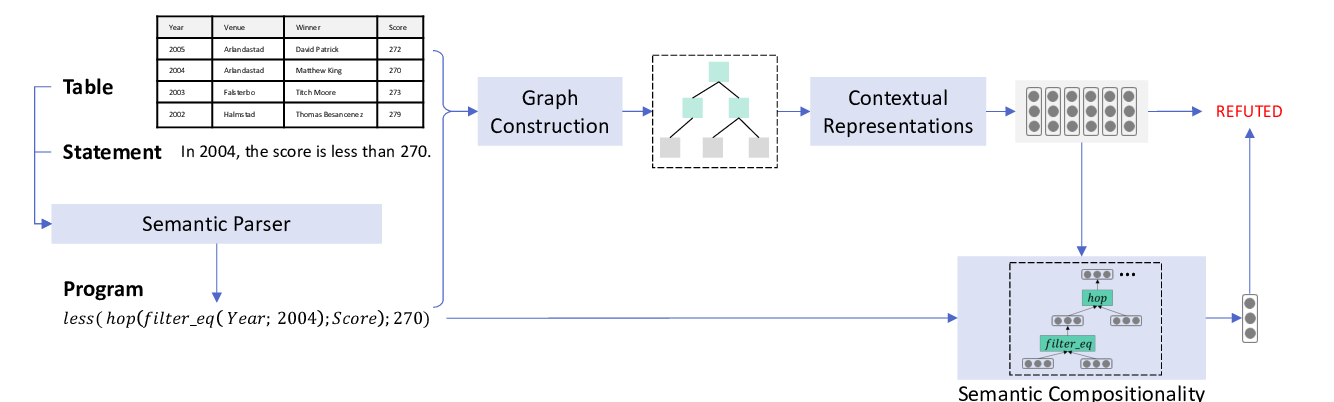

LogicalFactChecker: Leveraging Logical Operations for Fact Checking with Graph Module Network

Wanjun Zhong, Duyu Tang, Zhangyin Feng, Nan Duan, Ming Zhou, Ming Gong, Linjun Shou, Daxin Jiang, Jiahai Wang, Jian Yin,