Relation Extraction with Explanation

Hamed Shahbazi, Xiaoli Fern, Reza Ghaeini, Prasad Tadepalli

Information Extraction Short Paper

Session 11B: Jul 8

(06:00-07:00 GMT)

Session 13A: Jul 8

(12:00-13:00 GMT)

Abstract:

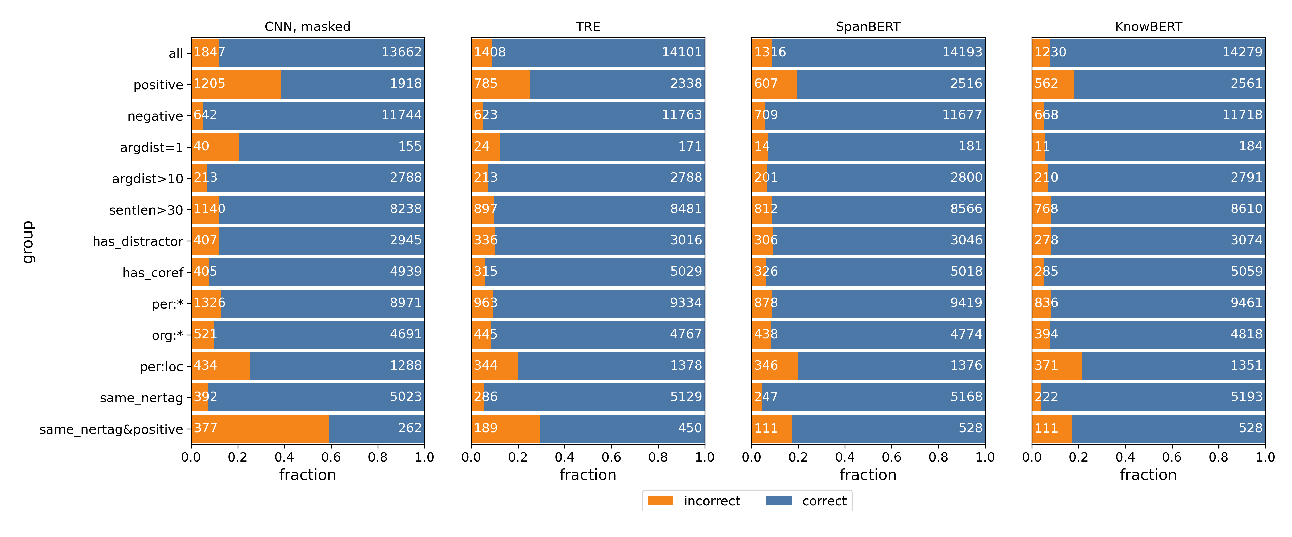

Recent neural models for relation extraction with distant supervision alleviate the impact of irrelevant sentences in a bag by learning importance weights for the sentences. Efforts thus far have focused on improving extraction accuracy but little is known about their explanability. In this work we annotate a test set with ground-truth sentence-level explanations to evaluate the quality of explanations afforded by the relation extraction models. We demonstrate that replacing the entity mentions in the sentences with their fine-grained entity types not only enhances extraction accuracy but also improves explanation. We also propose to automatically generate ``distractor'' sentences to augment the bags and train the model to ignore the distractors. Evaluations on the widely used FB-NYT dataset show that our methods achieve new state-of-the-art accuracy while improving model explanability.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

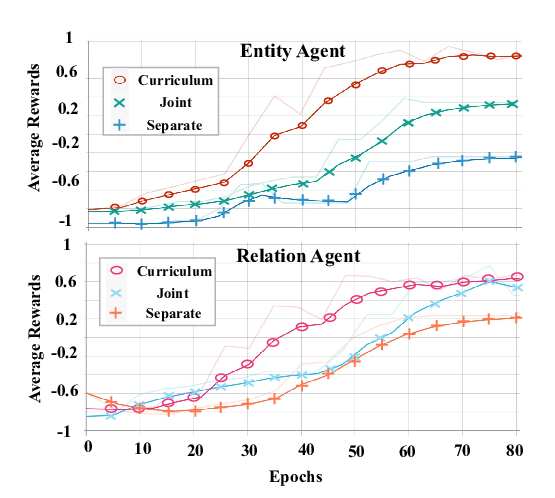

Relabel the Noise: Joint Extraction of Entities and Relations via Cooperative Multiagents

Daoyuan Chen, Yaliang Li, Kai Lei, Ying Shen,

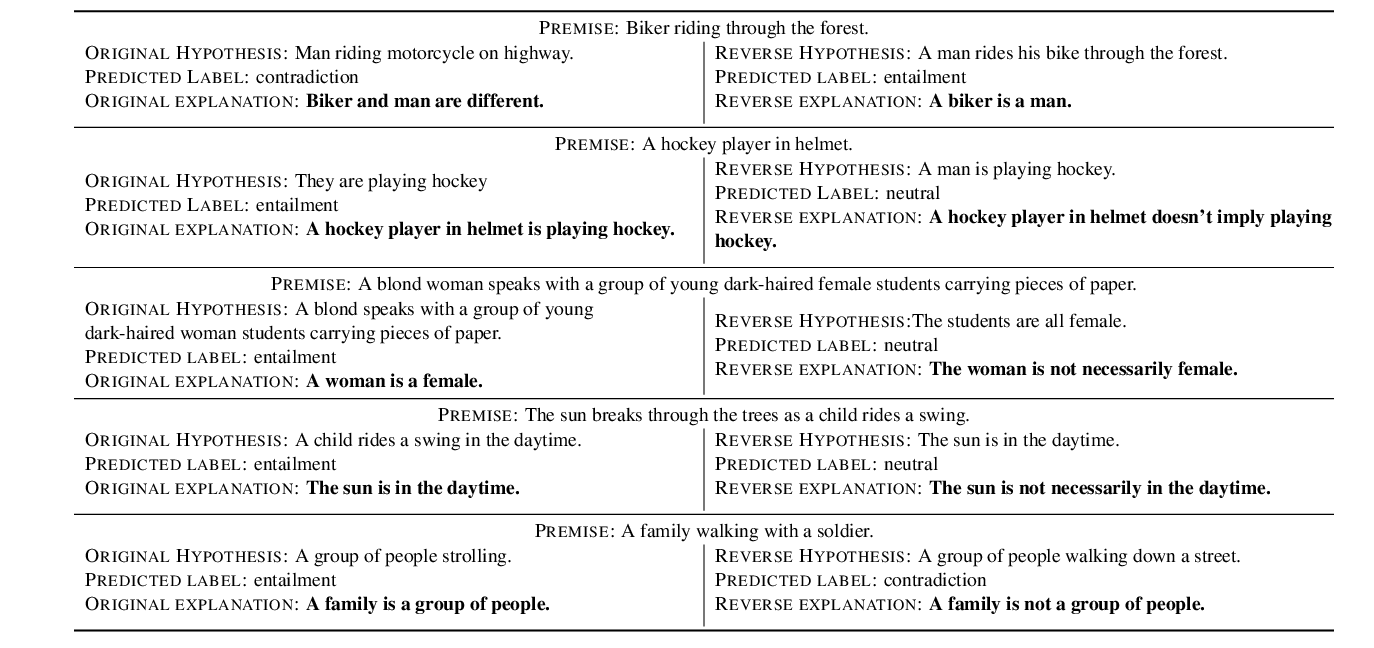

Make Up Your Mind! Adversarial Generation of Inconsistent Natural Language Explanations

Oana-Maria Camburu, Brendan Shillingford, Pasquale Minervini, Thomas Lukasiewicz, Phil Blunsom,

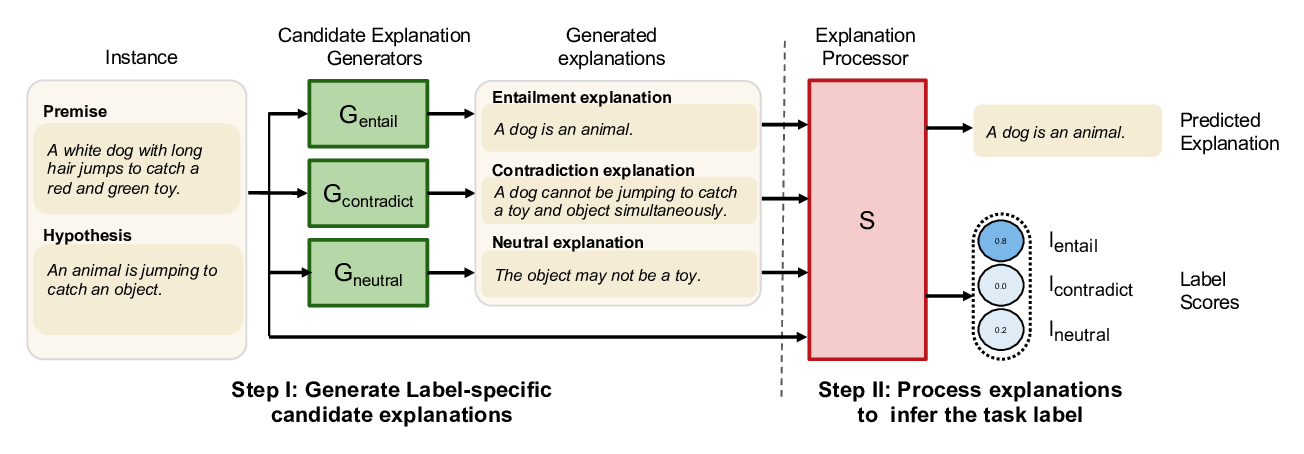

NILE : Natural Language Inference with Faithful Natural Language Explanations

Sawan Kumar, Partha Talukdar,

TACRED Revisited: A Thorough Evaluation of the TACRED Relation Extraction Task

Christoph Alt, Aleksandra Gabryszak, Leonhard Hennig,