Exploiting Syntactic Structure for Better Language Modeling: A Syntactic Distance Approach

Wenyu Du, Zhouhan Lin, Yikang Shen, Timothy J. O'Donnell, Yoshua Bengio, Yue Zhang

Machine Learning for NLP Long Paper

Session 11B: Jul 8

(06:00-07:00 GMT)

Session 13A: Jul 8

(12:00-13:00 GMT)

Abstract:

It is commonly believed that knowledge of syntactic structure should improve language modeling. However, effectively and computationally efficiently incorporating syntactic structure into neural language models has been a challenging topic. In this paper, we make use of a multi-task objective, i.e., the models simultaneously predict words as well as ground truth parse trees in a form called “syntactic distances”, where information between these two separate objectives shares the same intermediate representation. Experimental results on the Penn Treebank and Chinese Treebank datasets show that when ground truth parse trees are provided as additional training signals, the model is able to achieve lower perplexity and induce trees with better quality.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

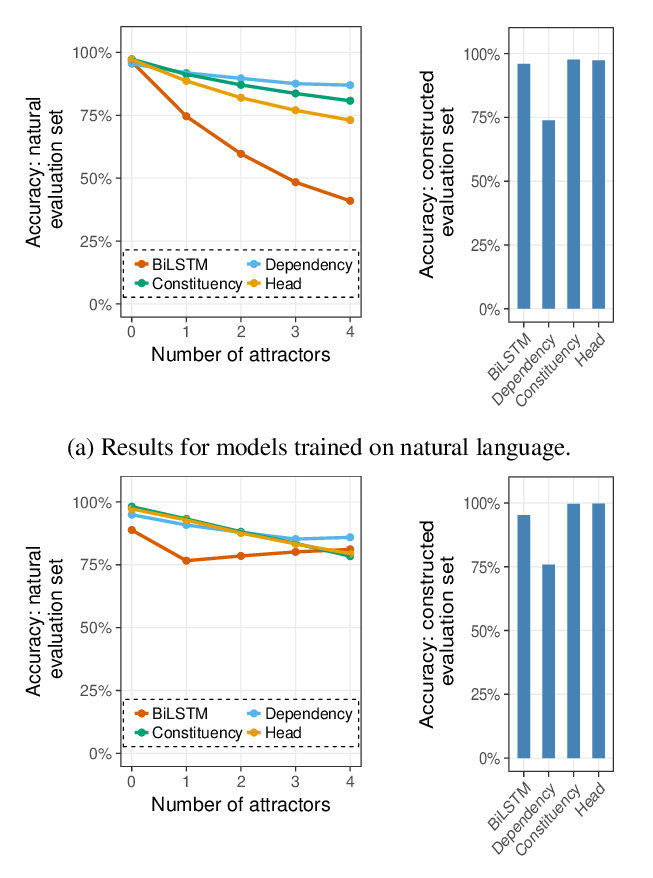

Representations of Syntax [MASK] Useful: Effects of Constituency and Dependency Structure in Recursive LSTMs

Michael Lepori, Tal Linzen, R. Thomas McCoy,

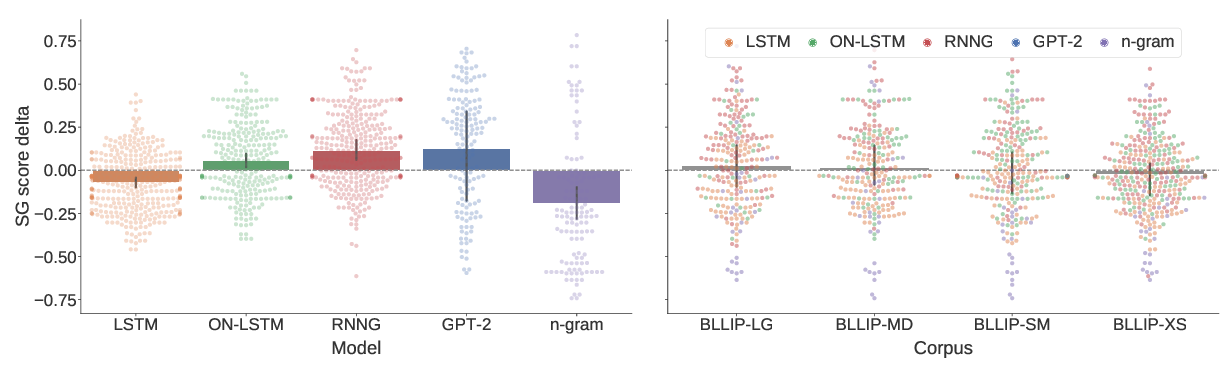

A Systematic Assessment of Syntactic Generalization in Neural Language Models

Jennifer Hu, Jon Gauthier, Peng Qian, Ethan Wilcox, Roger Levy,

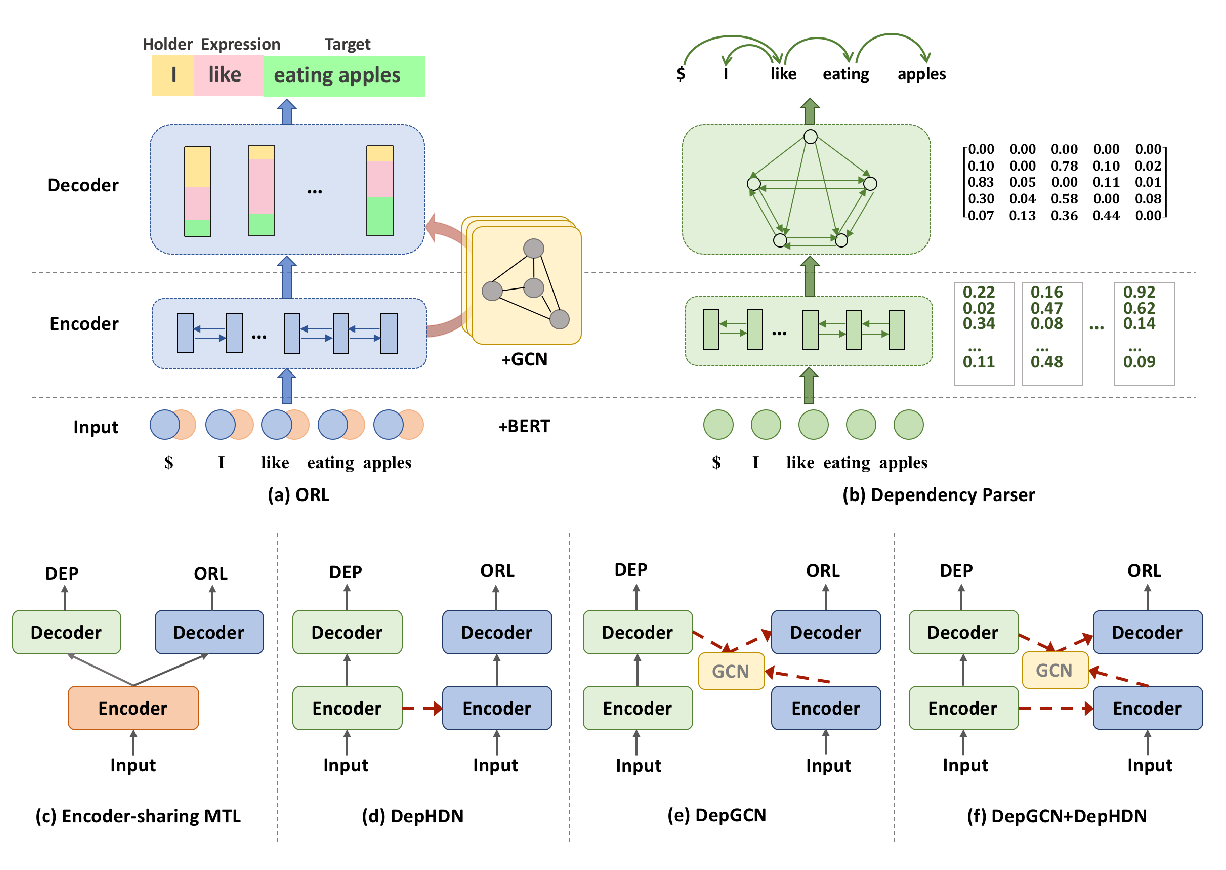

Syntax-Aware Opinion Role Labeling with Dependency Graph Convolutional Networks

Bo Zhang, Yue Zhang, Rui Wang, Zhenghua Li, Min Zhang,

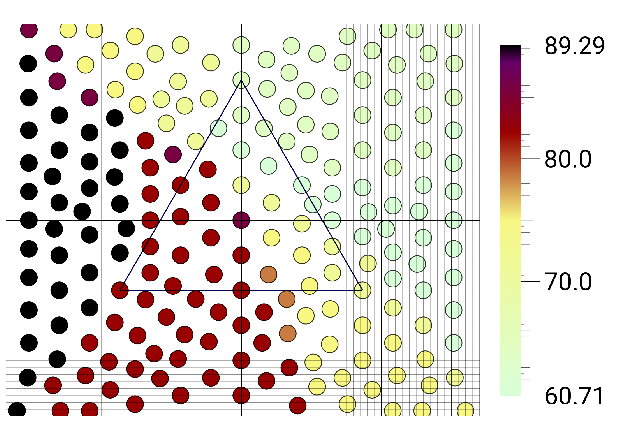

Treebank Embedding Vectors for Out-of-domain Dependency Parsing

Joachim Wagner, James Barry, Jennifer Foster,