Estimating predictive uncertainty for rumour verification models

Elena Kochkina, Maria Liakata

NLP Applications Long Paper

Session 12A: Jul 8

(08:00-09:00 GMT)

Session 14B: Jul 8

(18:00-19:00 GMT)

Abstract:

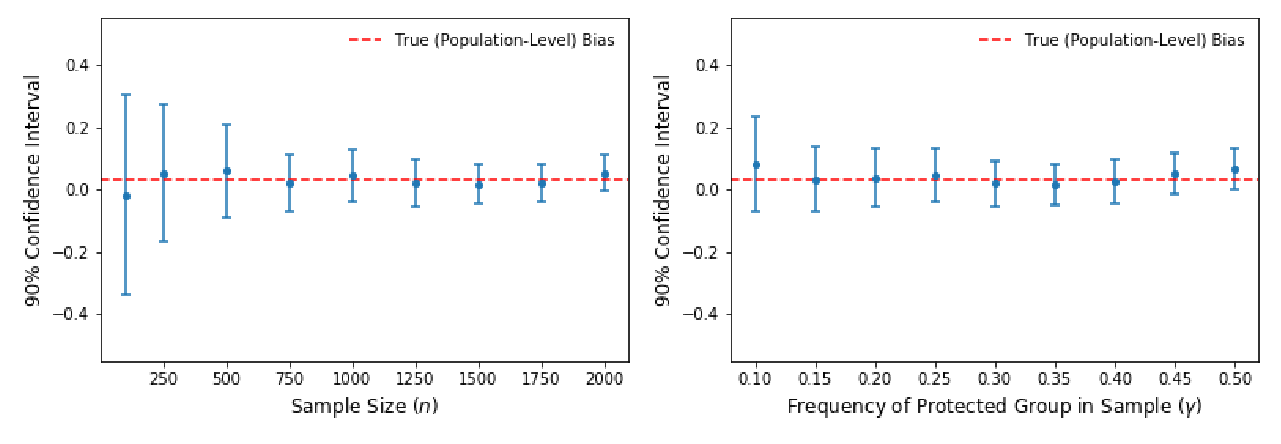

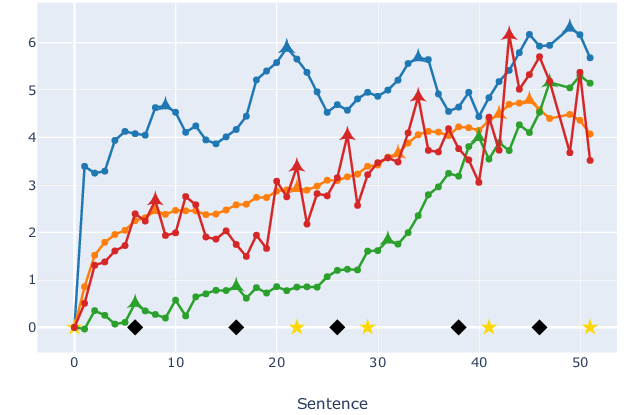

The inability to correctly resolve rumours circulating online can have harmful real-world consequences. We present a method for incorporating model and data uncertainty estimates into natural language processing models for automatic rumour verification. We show that these estimates can be used to filter out model predictions likely to be erroneous so that these difficult instances can be prioritised by a human fact-checker. We propose two methods for uncertainty-based instance rejection, supervised and unsupervised. We also show how uncertainty estimates can be used to interpret model performance as a rumour unfolds.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Suspense in Short Stories is Predicted By Uncertainty Reduction over Neural Story Representation

David Wilmot, Frank Keller,

Research Replication Prediction Using Weakly Supervised Learning

Tianyi Luo, Xingyu Li, Hainan Wang, Yang Liu,

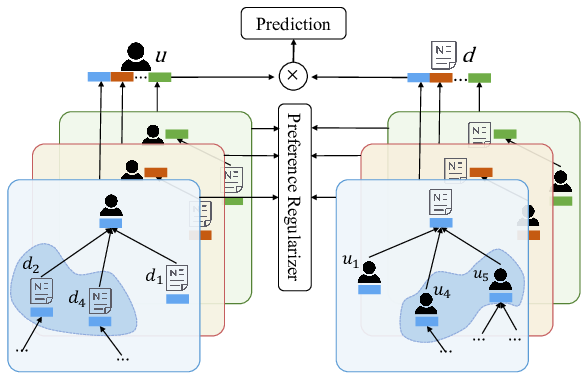

Graph Neural News Recommendation with Unsupervised Preference Disentanglement

Linmei Hu, Siyong Xu, Chen Li, Cheng Yang, Chuan Shi, Nan Duan, Xing Xie, Ming Zhou,