Exploring Contextual Word-level Style Relevance for Unsupervised Style Transfer

Chulun Zhou, Liangyu Chen, Jiachen Liu, Xinyan Xiao, Jinsong Su, Sheng Guo, Hua Wu

Generation Long Paper

Session 12B: Jul 8

(09:00-10:00 GMT)

Session 13A: Jul 8

(12:00-13:00 GMT)

Abstract:

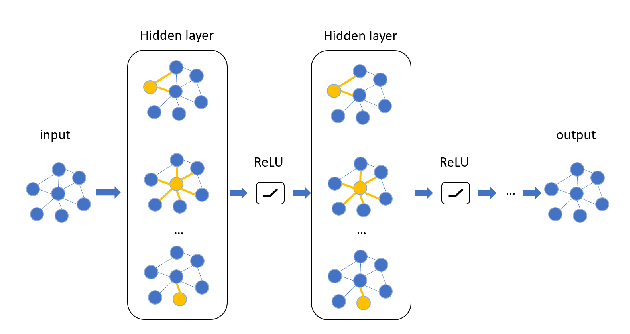

Unsupervised style transfer aims to change the style of an input sentence while preserving its original content without using parallel training data. In current dominant approaches, owing to the lack of fine-grained control on the influence from the target style, they are unable to yield desirable output sentences. In this paper, we propose a novel attentional sequence-to-sequence (Seq2seq) model that dynamically exploits the relevance of each output word to the target style for unsupervised style transfer. Specifically, we first pretrain a style classifier, where the relevance of each input word to the original style can be quantified via layer-wise relevance propagation. In a denoising auto-encoding manner, we train an attentional Seq2seq model to reconstruct input sentences and repredict word-level previously-quantified style relevance simultaneously. In this way, this model is endowed with the ability to automatically predict the style relevance of each output word. Then, we equip the decoder of this model with a neural style component to exploit the predicted wordlevel style relevance for better style transfer. Particularly, we fine-tune this model using a carefully-designed objective function involving style transfer, style relevance consistency, content preservation and fluency modeling loss terms. Experimental results show that our proposed model achieves state-of-the-art performance in terms of both transfer accuracy and content preservation.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

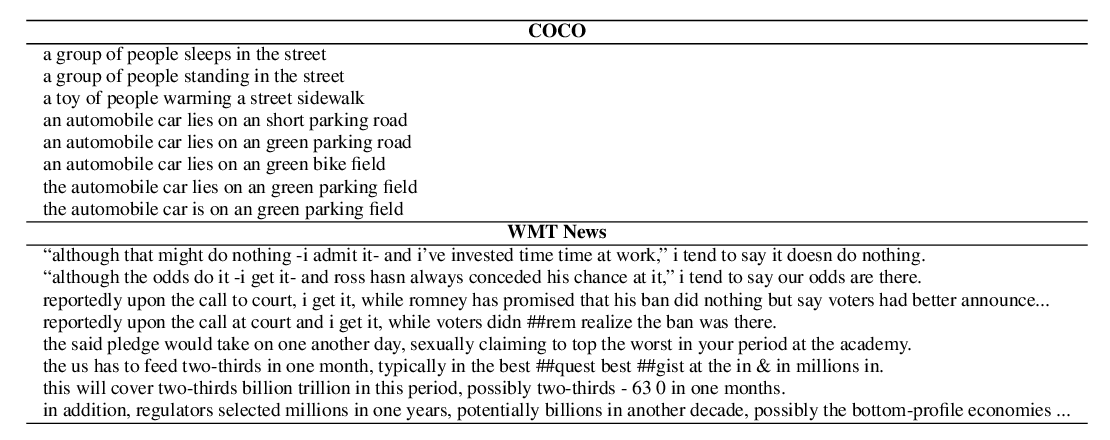

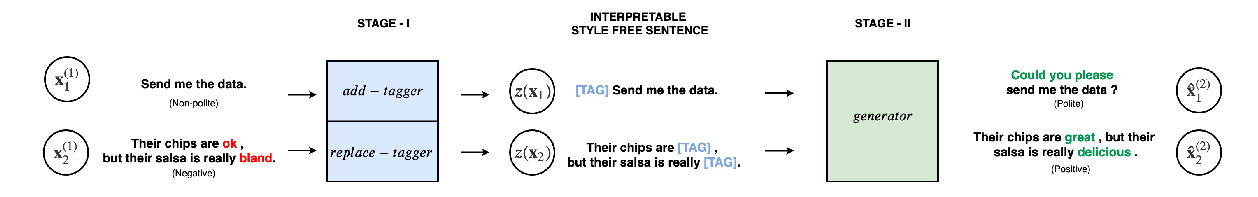

Politeness Transfer: A Tag and Generate Approach

Aman Madaan, Amrith Setlur, Tanmay Parekh, Barnabas Poczos, Graham Neubig, Yiming Yang, Ruslan Salakhutdinov, Alan W Black, Shrimai Prabhumoye,

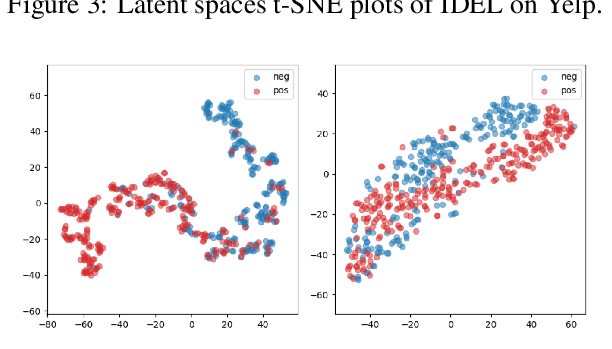

Improving Disentangled Text Representation Learning with Information-Theoretic Guidance

Pengyu Cheng, Martin Renqiang Min, Dinghan Shen, Christopher Malon, Yizhe Zhang, Yitong Li, Lawrence Carin,

Learning Implicit Text Generation via Feature Matching

Inkit Padhi, Pierre Dognin, Ke Bai, Cícero Nogueira dos Santos, Vijil Chenthamarakshan, Youssef Mroueh, Payel Das,