Regularized Context Gates on Transformer for Machine Translation

Xintong Li, Lemao Liu, Rui Wang, Guoping Huang, Max Meng

Machine Translation Short Paper

Session 14B: Jul 8

(18:00-19:00 GMT)

Session 15B: Jul 8

(21:00-22:00 GMT)

Abstract:

Context gates are effective to control the contributions from the source and target contexts in the recurrent neural network (RNN) based neural machine translation (NMT). However, it is challenging to extend them into the advanced Transformer architecture, which is more complicated than RNN. This paper first provides a method to identify source and target contexts and then introduce a gate mechanism to control the source and target contributions in Transformer. In addition, to further reduce the bias problem in the gate mechanism, this paper proposes a regularization method to guide the learning of the gates with supervision automatically generated using pointwise mutual information. Extensive experiments on 4 translation datasets demonstrate that the proposed model obtains an averaged gain of 1.0 BLEU score over a strong Transformer baseline.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

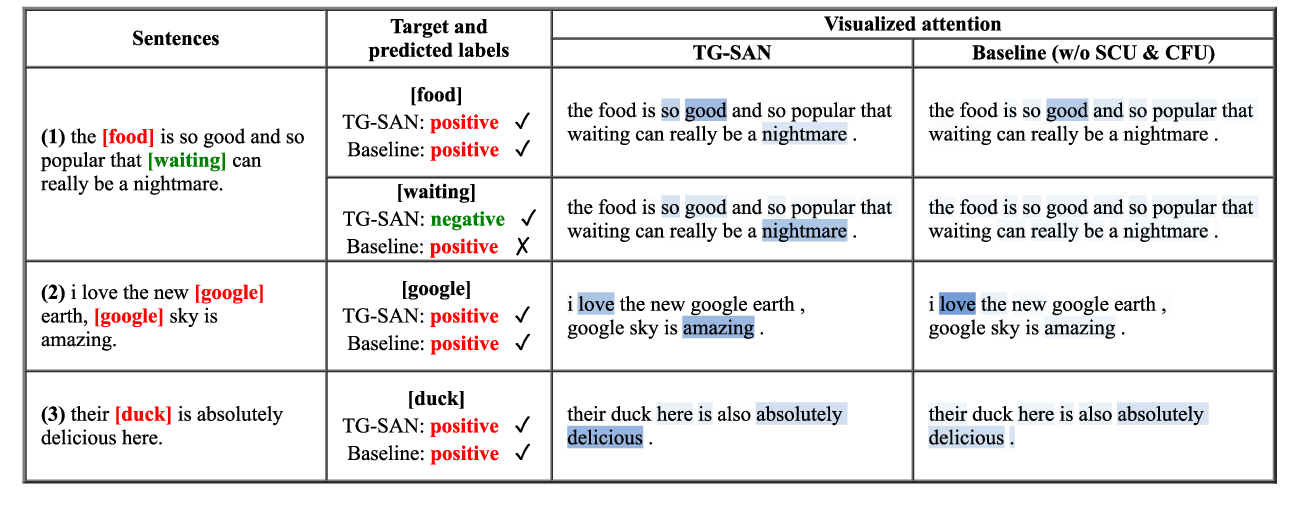

Target-Guided Structured Attention Network for Target-dependent Sentiment Analysis

Ji Zhang, Chengyao Chen, Pengfei Liu, Chao He, Cane Wing-Ki Leung,

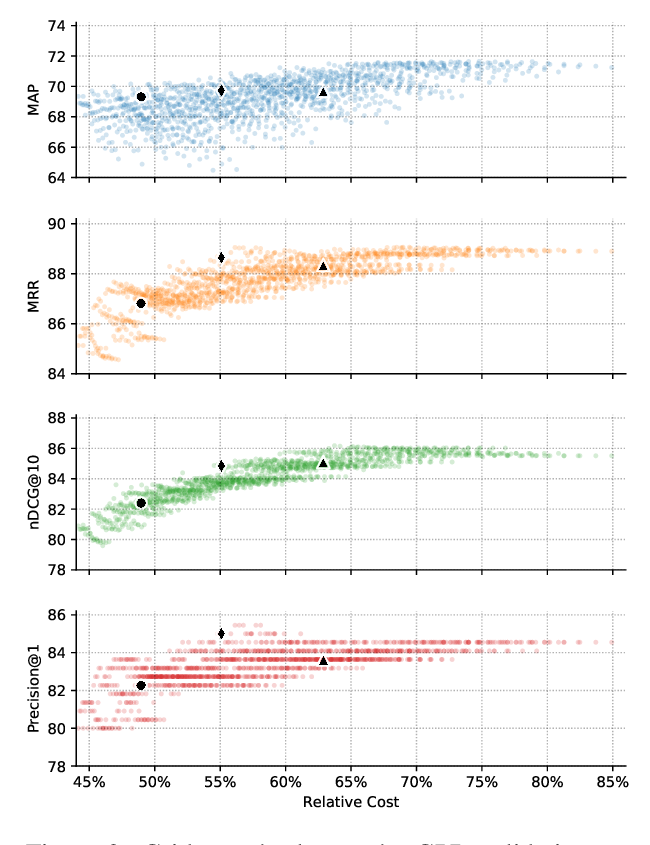

The Cascade Transformer: an Application for Efficient Answer Sentence Selection

Luca Soldaini, Alessandro Moschitti,

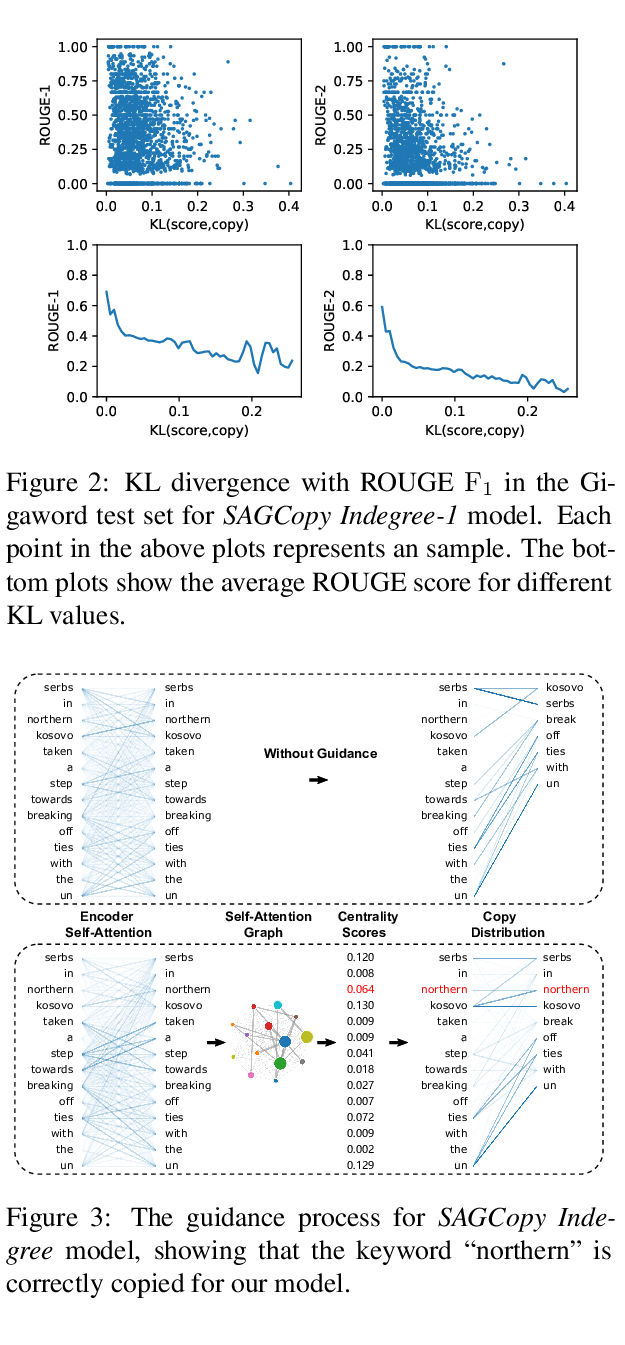

Self-Attention Guided Copy Mechanism for Abstractive Summarization

Song Xu, Haoran Li, Peng Yuan, Youzheng Wu, Xiaodong He, Bowen Zhou,

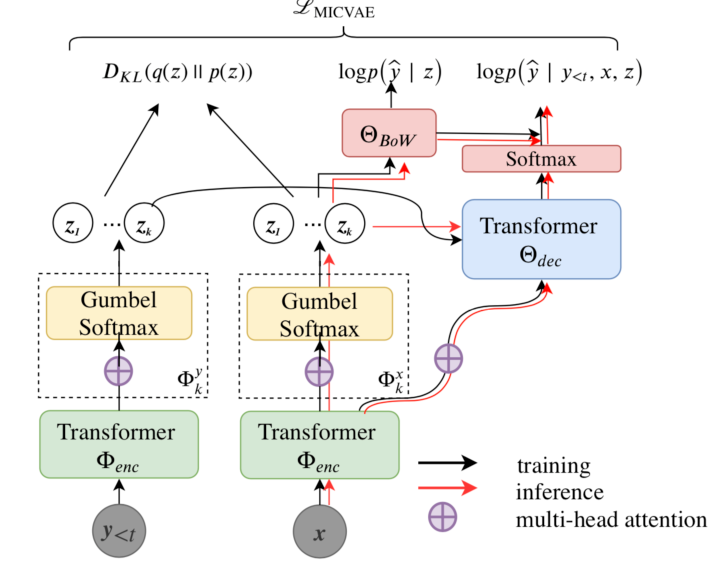

Addressing Posterior Collapse with Mutual Information for Improved Variational Neural Machine Translation

Arya D. McCarthy, Xian Li, Jiatao Gu, Ning Dong,