Uncertain Natural Language Inference

Tongfei Chen, Zhengping Jiang, Adam Poliak, Keisuke Sakaguchi, Benjamin Van Durme

Semantics: Textual Inference and Other Areas of Semantics Short Paper

Session 14B: Jul 8

(18:00-19:00 GMT)

Session 15A: Jul 8

(20:00-21:00 GMT)

Abstract:

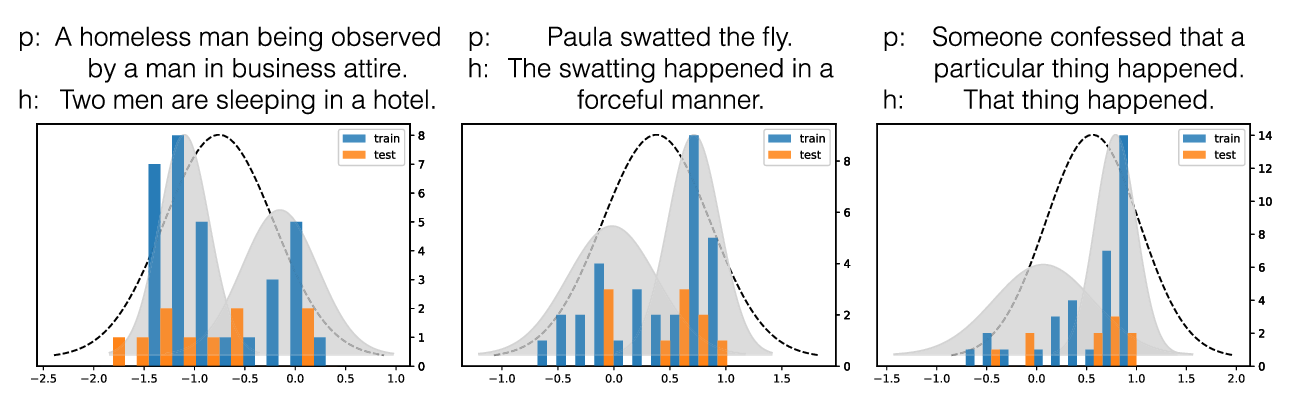

We introduce Uncertain Natural Language Inference (UNLI), a refinement of Natural Language Inference (NLI) that shifts away from categorical labels, targeting instead the direct prediction of subjective probability assessments. We demonstrate the feasibility of collecting annotations for UNLI by relabeling a portion of the SNLI dataset under a probabilistic scale, where items even with the same categorical label differ in how likely people judge them to be true given a premise. We describe a direct scalar regression modeling approach, and find that existing categorically-labeled NLI data can be used in pre-training. Our best models correlate well with humans, demonstrating models are capable of more subtle inferences than the categorical bin assignment employed in current NLI tasks.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

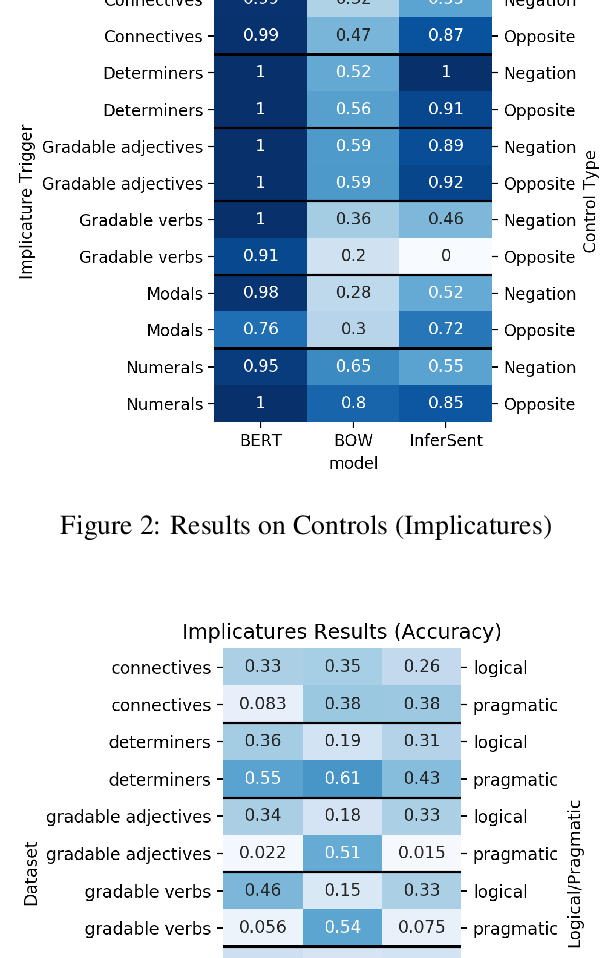

Are Natural Language Inference Models IMPPRESsive? Learning IMPlicature and PRESupposition

Paloma Jeretic, Alex Warstadt, Suvrat Bhooshan, Adina Williams,

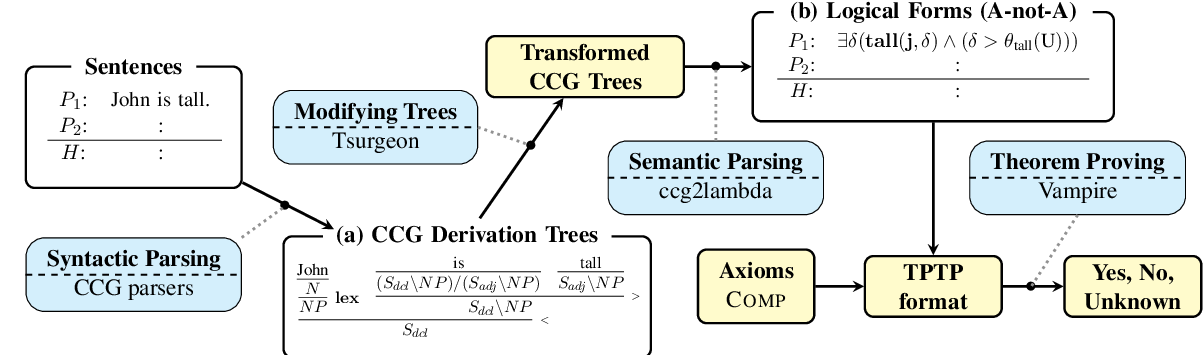

Logical Inferences with Comparatives and Generalized Quantifiers

Izumi Haruta, Koji Mineshima, Daisuke Bekki,

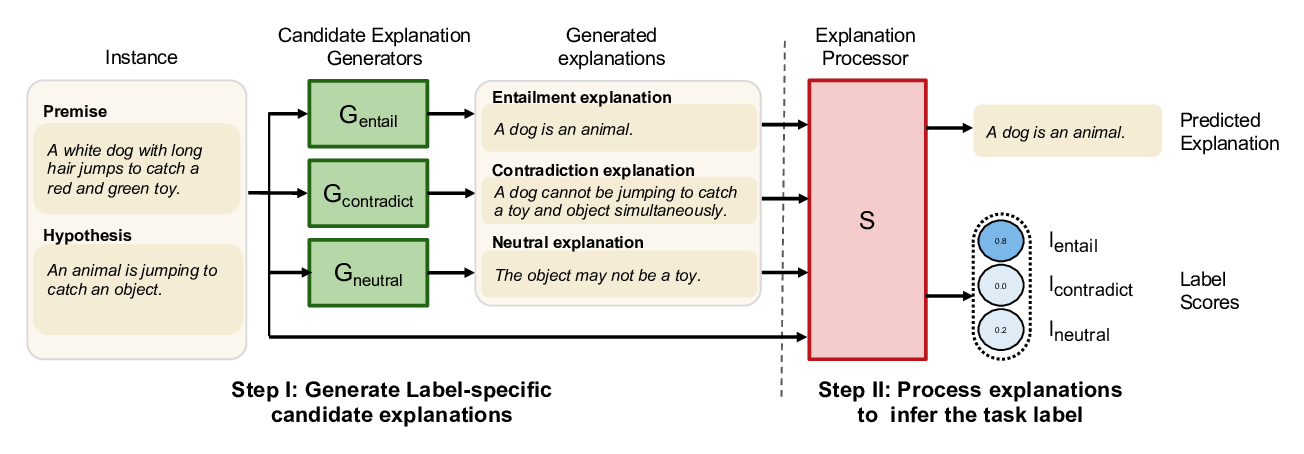

NILE : Natural Language Inference with Faithful Natural Language Explanations

Sawan Kumar, Partha Talukdar,