PLATO: Pre-trained Dialogue Generation Model with Discrete Latent Variable

Siqi Bao, Huang He, Fan Wang, Hua Wu, Haifeng Wang

Dialogue and Interactive Systems Long Paper

Session 1A: Jul 6

(05:00-06:00 GMT)

Session 3A: Jul 6

(12:00-13:00 GMT)

Abstract:

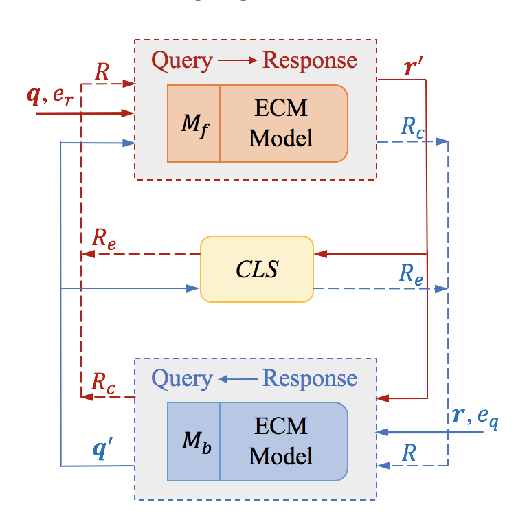

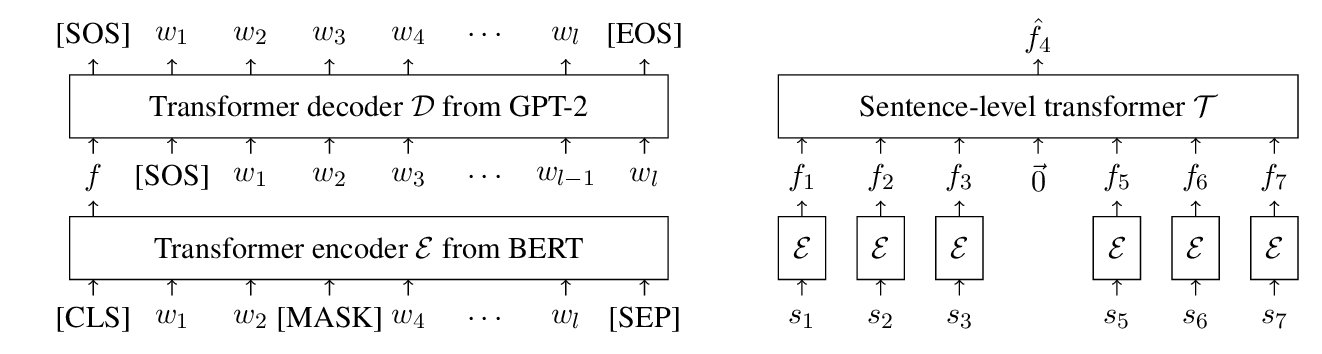

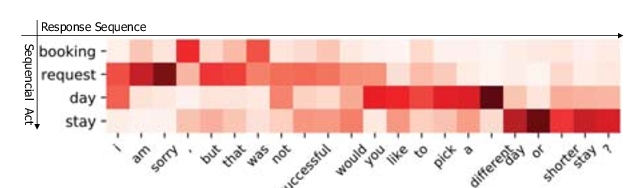

Pre-training models have been proved effective for a wide range of natural language processing tasks. Inspired by this, we propose a novel dialogue generation pre-training framework to support various kinds of conversations, including chit-chat, knowledge grounded dialogues, and conversational question answering. In this framework, we adopt flexible attention mechanisms to fully leverage the bi-directional context and the uni-directional characteristic of language generation. We also introduce discrete latent variables to tackle the inherent one-to-many mapping problem in response generation. Two reciprocal tasks of response generation and latent act recognition are designed and carried out simultaneously within a shared network. Comprehensive experiments on three publicly available datasets verify the effectiveness and superiority of the proposed framework.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

INSET: Sentence Infilling with INter-SEntential Transformer

Yichen Huang, Yizhe Zhang, Oussama Elachqar, Yu Cheng,

Multi-Domain Dialogue Acts and Response Co-Generation

Kai Wang, Junfeng Tian, Rui Wang, Xiaojun Quan, Jianxing Yu,

Variational Neural Machine Translation with Normalizing Flows

Hendra Setiawan, Matthias Sperber, Udhyakumar Nallasamy, Matthias Paulik,