Grammatical Error Correction Using Pseudo Learner Corpus Considering Learner's Error Tendency

Yujin Takahashi, Satoru Katsumata, Mamoru Komachi

Student Research Workshop SRW Paper

Session 1B: Jul 6

(06:00-07:00 GMT)

Abstract:

Recently, several studies have focused on improving the performance of grammatical error correction (GEC) tasks using pseudo data. However, a large amount of pseudo data are required to train an accurate GEC model. To address the limitations of language and computational resources, we assume that introducing pseudo errors into sentences similar to those written by the language learners is more efficient, rather than incorporating random pseudo errors into monolingual data. In this regard, we study the effect of pseudo data on GEC task performance using two approaches. First, we extract sentences that are similar to the learners' sentences from monolingual data. Second, we generate realistic pseudo errors by considering error types that learners often make. Based on our comparative results, we observe that F0.5 scores for the Russian GEC task are significantly improved.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

On the Robustness of Language Encoders against Grammatical Errors

Fan Yin, Quanyu Long, Tao Meng, Kai-Wei Chang,

Encoder-Decoder Models Can Benefit from Pre-trained Masked Language Models in Grammatical Error Correction

Masahiro Kaneko, Masato Mita, Shun Kiyono, Jun Suzuki, Kentaro Inui,

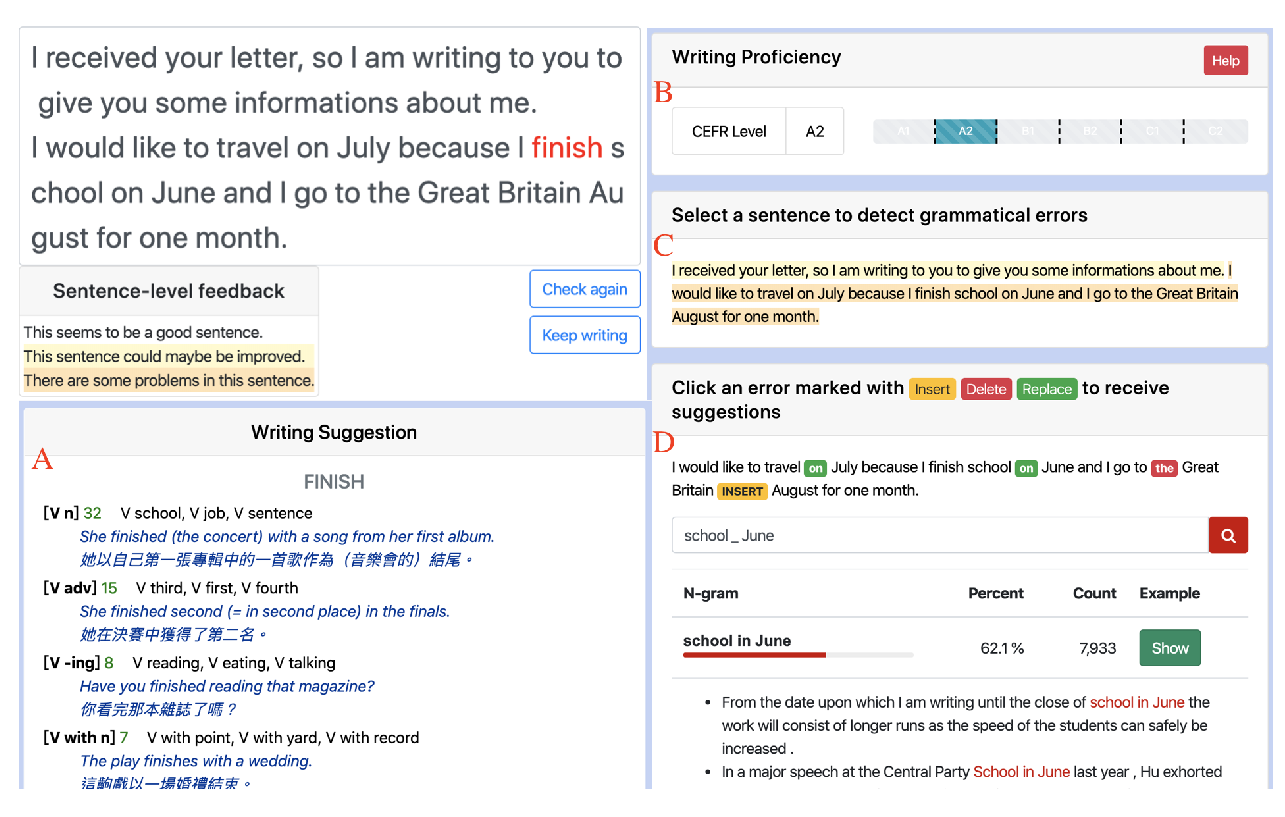

LinggleWrite: a Coaching System for Essay Writing

Chung-Ting Tsai, Jhih-Jie Chen, Ching-Yu Yang, Jason S. Chang,

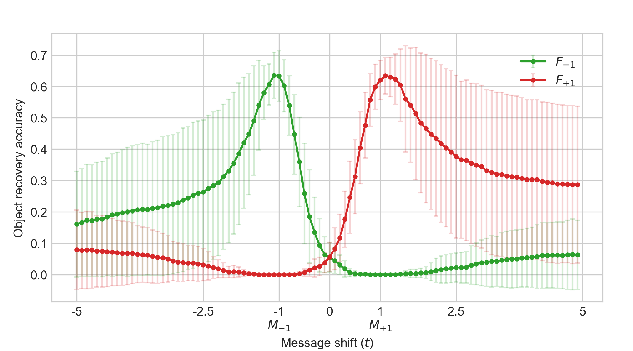

On the Spontaneous Emergence of Discrete and Compositional Signals

Nur Geffen Lan, Emmanuel Chemla, Shane Steinert-Threlkeld,