Efficient Contextual Representation Learning With Continuous Outputs

Liunian Harold Li, Patrick H. Chen, Cho-Jui Hsieh, Kai-Wei Chang

Machine Learning for NLP TACL Paper

Session 4B: Jul 6

(18:00-19:00 GMT)

Session 5B: Jul 6

(21:00-22:00 GMT)

Abstract:

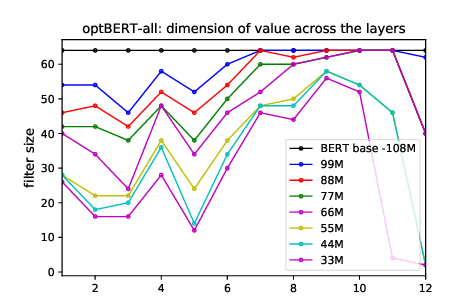

Contextual representation models have achieved great success in improving various downstream natural language processing tasks. However, these language-model-based encoders are difficult to train due to their large parameter size and high computational complexity By carefully examining the training procedure, we observe that the softmax layer, which predicts a distribution of the target word, often induces significant overhead, especially when the vocabulary size is large. Therefore, we revisit the design of the output layer and consider directly predicting the pre-trained embedding of the target word for a given context. When applied to ELMo, the proposed approach achieves a 4 times speedup and eliminates 80% trainable parameters while achieving competitive performance on downstream tasks. Further analysis shows that the approach maintains the speed advantage under various settings, even when the sentence encoder is scaled up.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

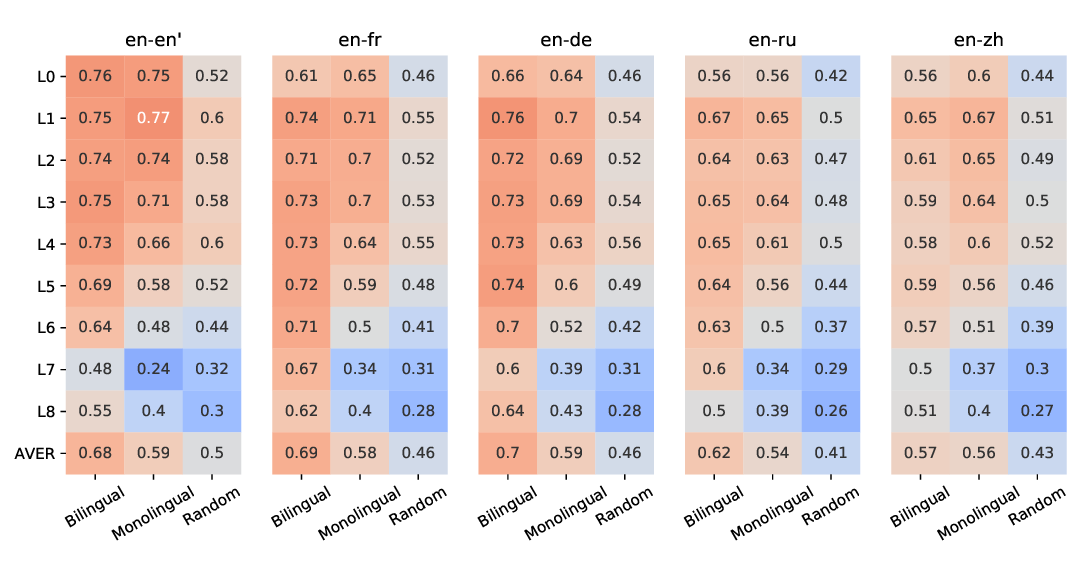

Emerging Cross-lingual Structure in Pretrained Language Models

Alexis Conneau, Shijie Wu, Haoran Li, Luke Zettlemoyer, Veselin Stoyanov,

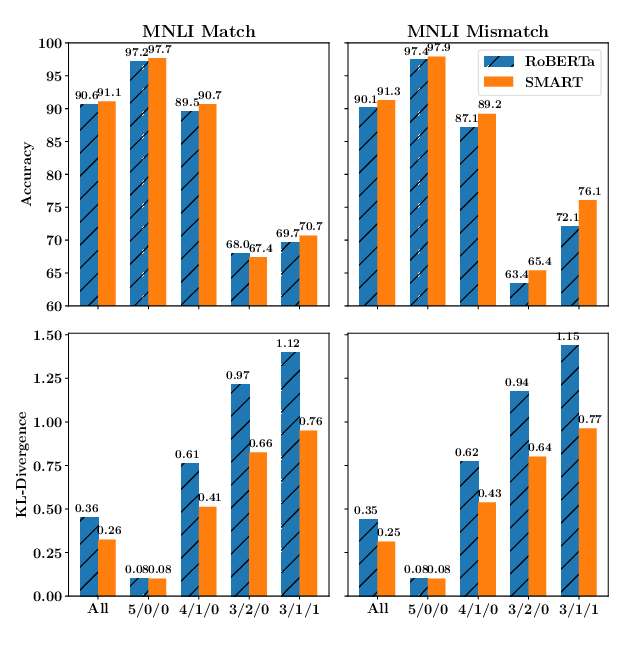

SMART: Robust and Efficient Fine-Tuning for Pre-trained Natural Language Models through Principled Regularized Optimization

Haoming Jiang, Pengcheng He, Weizhu Chen, Xiaodong Liu, Jianfeng Gao, Tuo Zhao,

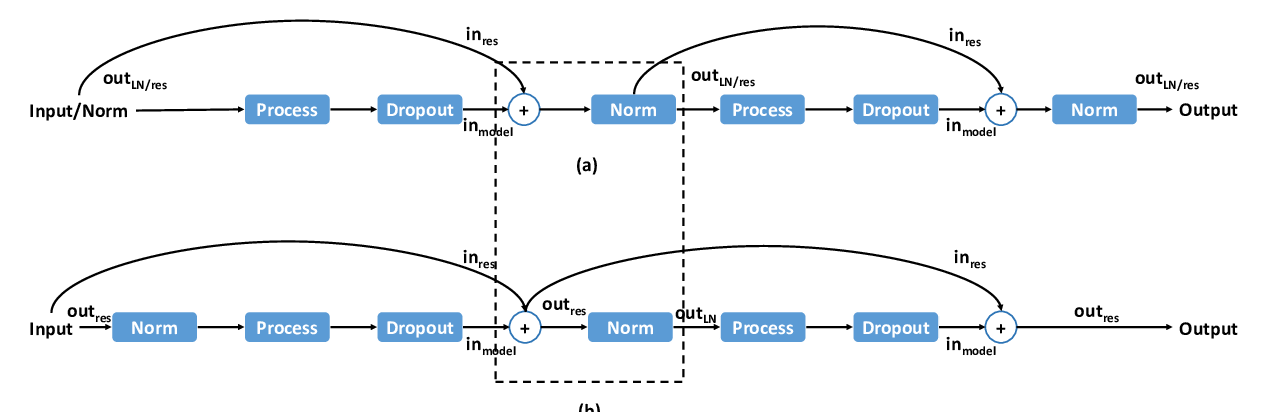

Lipschitz Constrained Parameter Initialization for Deep Transformers

Hongfei Xu, Qiuhui Liu, Josef van Genabith, Deyi Xiong, Jingyi Zhang,