exBERT: A Visual Analysis Tool to Explore Learned Representations in Transformer Models

Benjamin Hoover, Hendrik Strobelt, Sebastian Gehrmann

System Demonstrations Demo Paper

Demo Session 3C-2: Jul 7

(13:30-14:30 GMT)

Demo Session 5B-2: Jul 7

(20:45-21:45 GMT)

Abstract:

Large Transformer-based language models can route and reshape complex information via their multi-headed attention mechanism. Although the attention never receives explicit supervision, it can exhibit recognizable patterns following linguistic or positional information. Analyzing the learned representations and attentions is paramount to furthering our understanding of the inner workings of these models. However, analyses have to catch up with the rapid release of new models and the growing diversity of investigation techniques. To support analysis for a wide variety of models, we introduce exBERT, a tool to help humans conduct flexible, interactive investigations and formulate hypotheses for the model-internal reasoning process. exBERT provides insights into the meaning of the contextual representations and attention by matching a human-specified input to similar contexts in large annotated datasets. By aggregating the annotations of the matched contexts, exBERT can quickly replicate findings from literature and extend them to previously not analyzed models.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Human Attention Maps for Text Classification: Do Humans and Neural Networks Focus on the Same Words?

Cansu Sen, Thomas Hartvigsen, Biao Yin, Xiangnan Kong, Elke Rundensteiner,

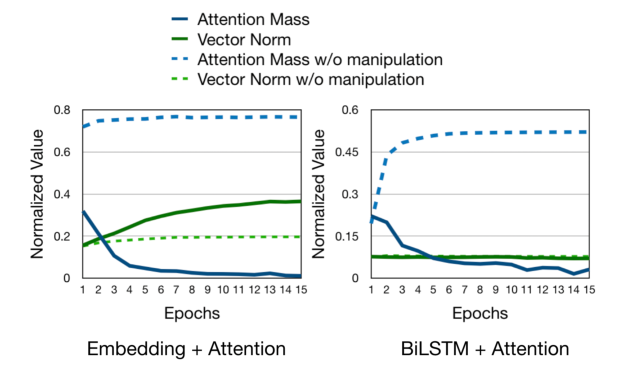

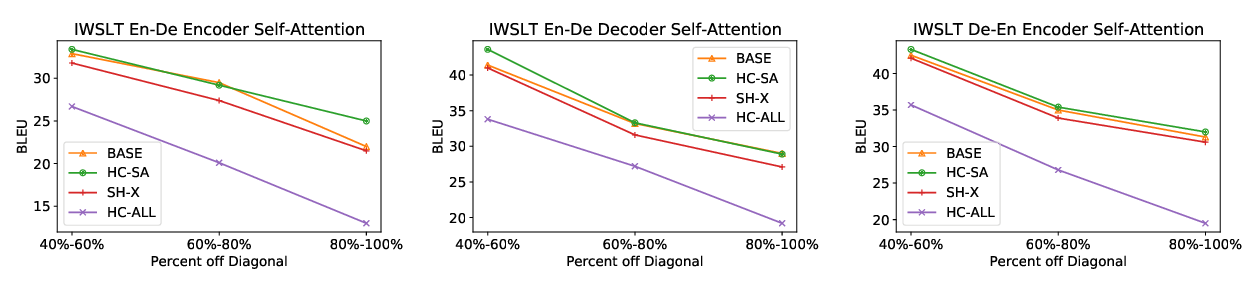

Self-Attention is Not Only a Weight: Analyzing BERT with Vector Norms

Goro Kobayashi, Tatsuki Kuribayashi, Sho Yokoi, Kentaro Inui,

Learning to Deceive with Attention-Based Explanations

Danish Pruthi, Mansi Gupta, Bhuwan Dhingra, Graham Neubig, Zachary C. Lipton,