Self-Attention is Not Only a Weight: Analyzing BERT with Vector Norms

Goro Kobayashi, Tatsuki Kuribayashi, Sho Yokoi, Kentaro Inui

Student Research Workshop SRW Paper

Session 6A: Jul 7

(05:00-06:00 GMT)

Session 12B: Jul 8

(09:00-10:00 GMT)

Abstract:

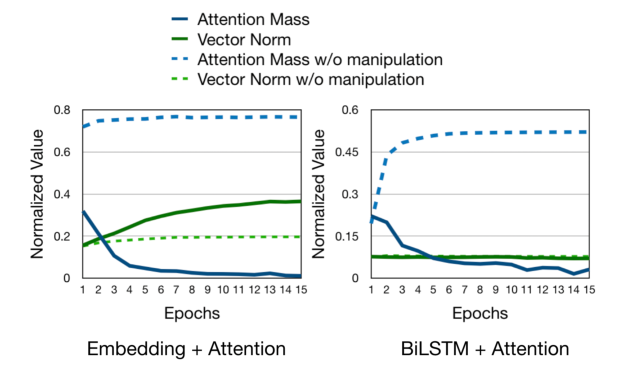

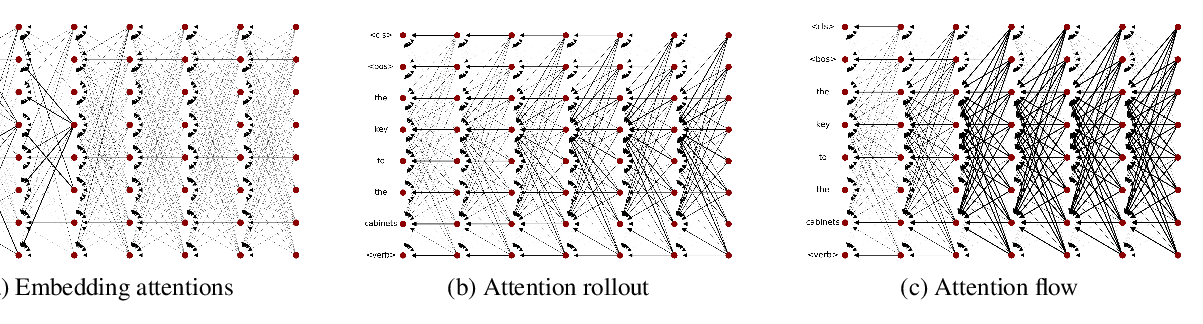

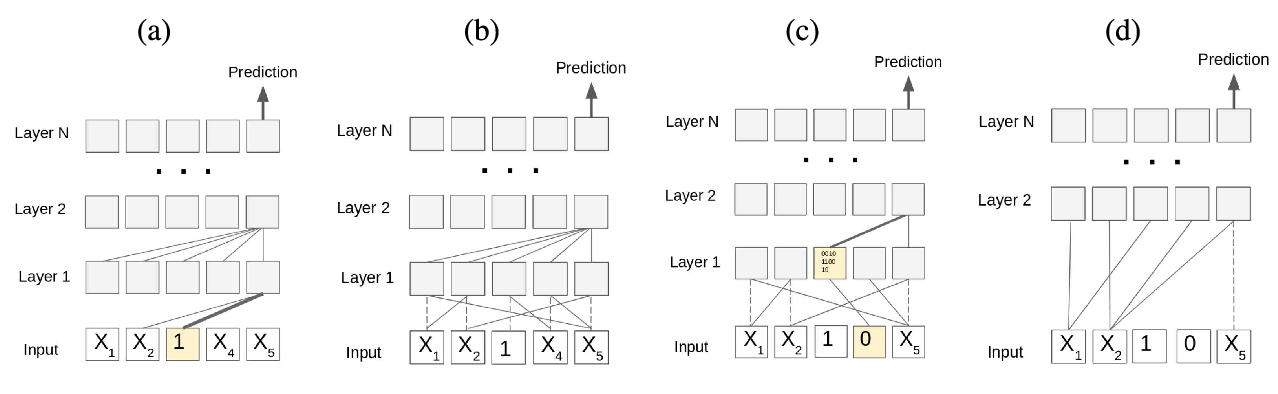

Self-attention modules are essential building blocks of Transformer-based language models and hence are the subject of a large number of studies aiming to discover which linguistic capabilities these models possess (Rogers et al., 2020). Such studies are commonly conducted by analyzing correlations of attention weights with specific linguistic phenomena. In this paper, we show that attention weights alone are only one of two factors determining the output of self-attention modules and propose to incorporate the other factor, namely the norm of the transformed input vectors, into the analysis, as well. Our analysis of self-attention modules in BERT (Devlin et al., 2019) shows that the proposed method produces insights that better agree with linguistic intuitions than an analysis based on attention-weights alone. Our analysis further reveals that BERT controls the amount of the contribution from frequent informative and less informative tokens not by attention weights but via vector norms.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Learning to Deceive with Attention-Based Explanations

Danish Pruthi, Mansi Gupta, Bhuwan Dhingra, Graham Neubig, Zachary C. Lipton,