Automatic Detection of Generated Text is Easiest when Humans are Fooled

Daphne Ippolito, Daniel Duckworth, Chris Callison-Burch, Douglas Eck

Generation Long Paper

Session 3A: Jul 6

(12:00-13:00 GMT)

Session 5A: Jul 6

(20:00-21:00 GMT)

Abstract:

Recent advancements in neural language modelling make it possible to rapidly generate vast amounts of human-sounding text. The capabilities of humans and automatic discriminators to detect machine-generated text have been a large source of research interest, but humans and machines rely on different cues to make their decisions. Here, we perform careful benchmarking and analysis of three popular sampling-based decoding strategies---top-_k_, nucleus sampling, and untruncated random sampling---and show that improvements in decoding methods have primarily optimized for fooling humans. This comes at the expense of introducing statistical abnormalities that make detection easy for automatic systems. We also show that though both human and automatic detector performance improve with longer excerpt length, even multi-sentence excerpts can fool expert human raters over 30% of the time. Our findings reveal the importance of using both human and automatic detectors to assess the humanness of text generation systems.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

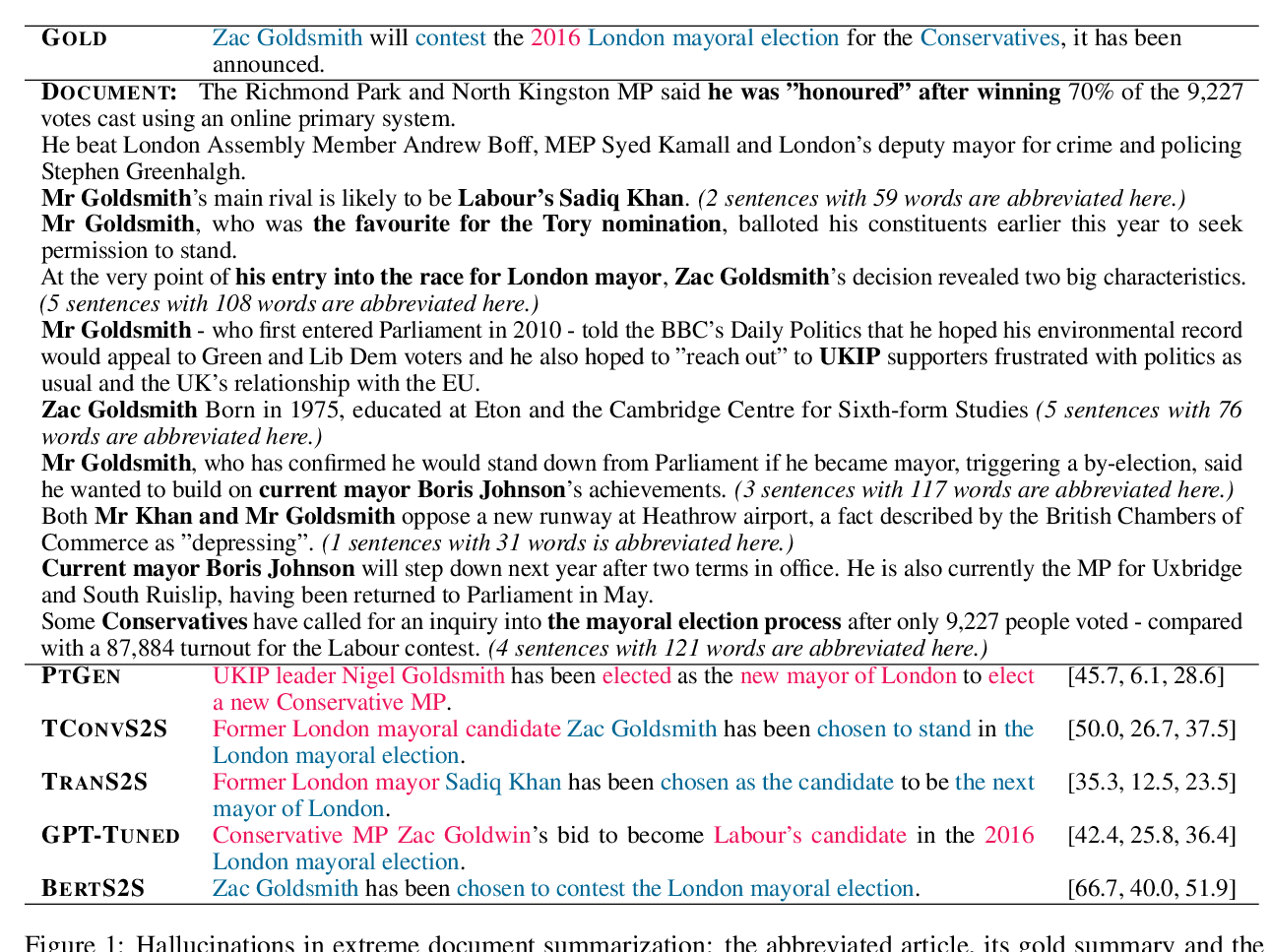

On Faithfulness and Factuality in Abstractive Summarization

Joshua Maynez, Shashi Narayan, Bernd Bohnet, Ryan McDonald,

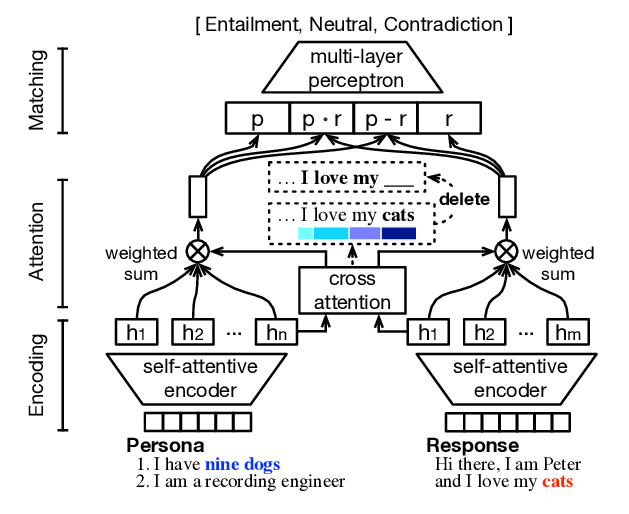

Generate, Delete and Rewrite: A Three-Stage Framework for Improving Persona Consistency of Dialogue Generation

Haoyu Song, Yan Wang, Wei-Nan Zhang, Xiaojiang Liu, Ting Liu,

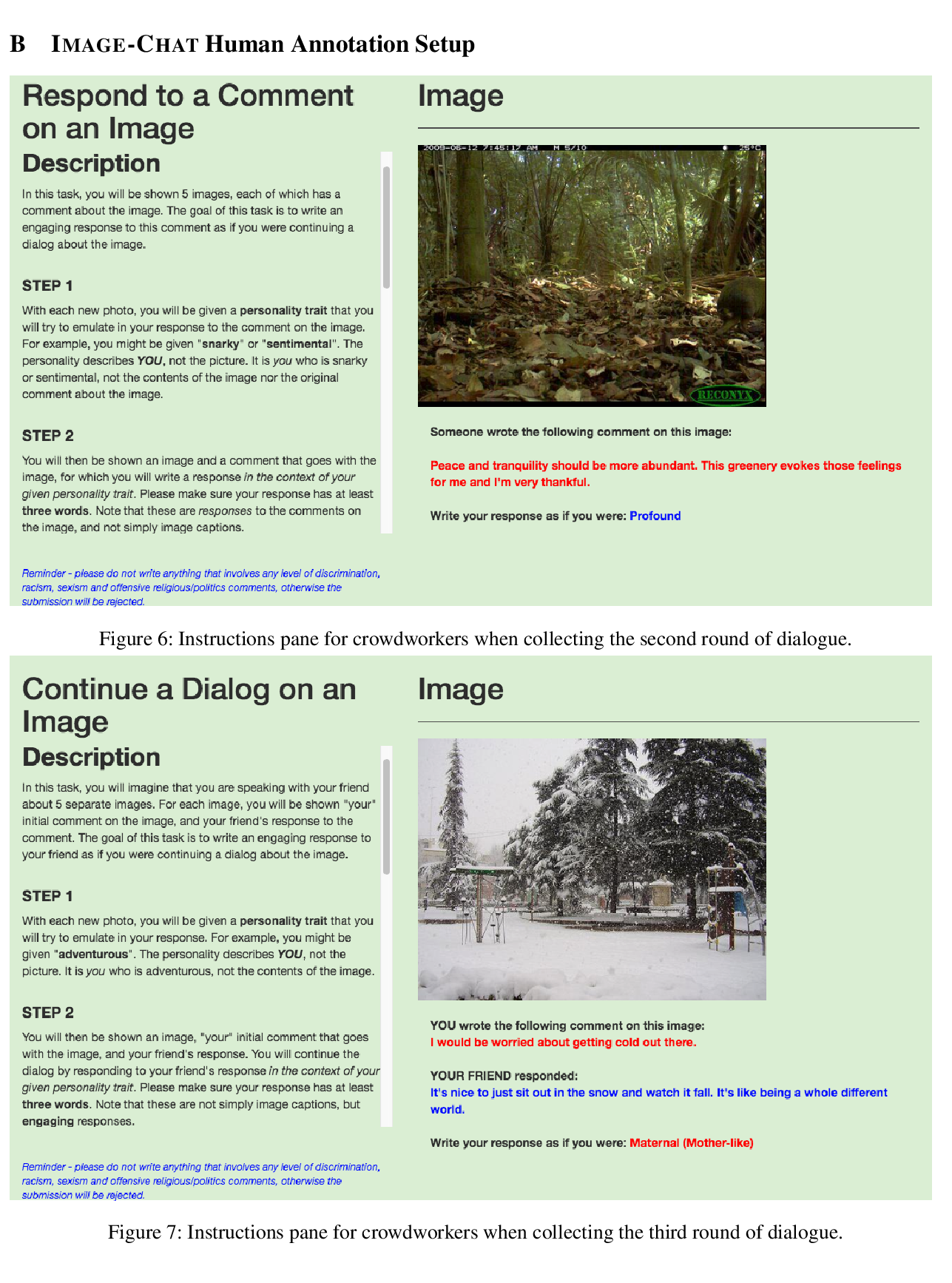

Image-Chat: Engaging Grounded Conversations

Kurt Shuster, Samuel Humeau, Antoine Bordes, Jason Weston,

Human Attention Maps for Text Classification: Do Humans and Neural Networks Focus on the Same Words?

Cansu Sen, Thomas Hartvigsen, Biao Yin, Xiangnan Kong, Elke Rundensteiner,