DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference

Ji Xin, Raphael Tang, Jaejun Lee, Yaoliang Yu, Jimmy Lin

NLP Applications Short Paper

Session 4A: Jul 6

(17:00-18:00 GMT)

Session 5B: Jul 6

(21:00-22:00 GMT)

Abstract:

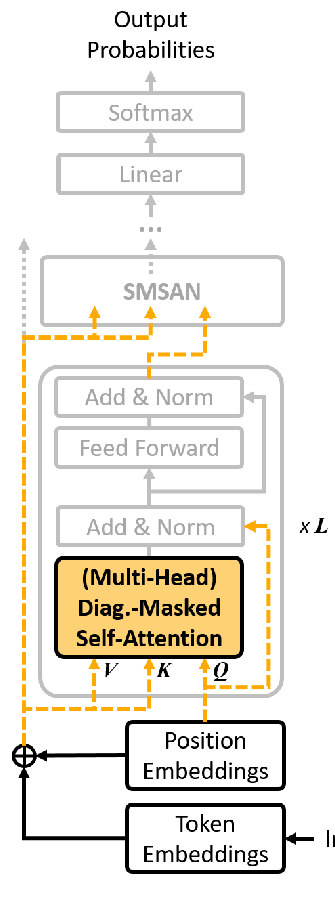

Large-scale pre-trained language models such as BERT have brought significant improvements to NLP applications. However, they are also notorious for being slow in inference, which makes them difficult to deploy in real-time applications. We propose a simple but effective method, DeeBERT, to accelerate BERT inference. Our approach allows samples to exit earlier without passing through the entire model. Experiments show that DeeBERT is able to save up to ~40% inference time with minimal degradation in model quality. Further analyses show different behaviors in the BERT transformer layers and also reveal their redundancy. Our work provides new ideas to efficiently apply deep transformer-based models to downstream tasks. Code is available at https://github.com/castorini/DeeBERT.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

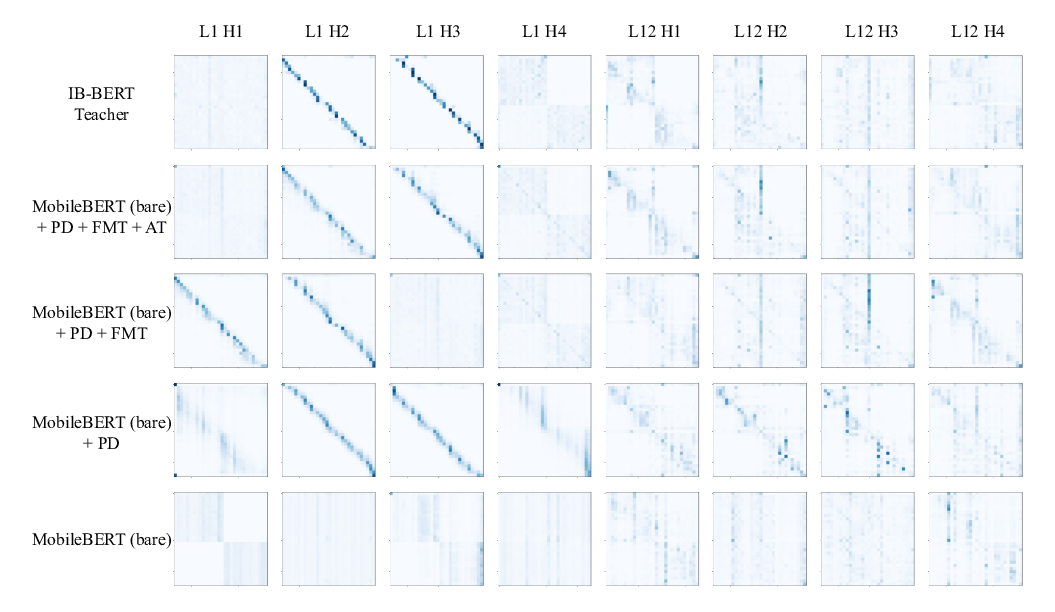

MobileBERT: a Compact Task-Agnostic BERT for Resource-Limited Devices

Zhiqing Sun, Hongkun Yu, Xiaodan Song, Renjie Liu, Yiming Yang, Denny Zhou,

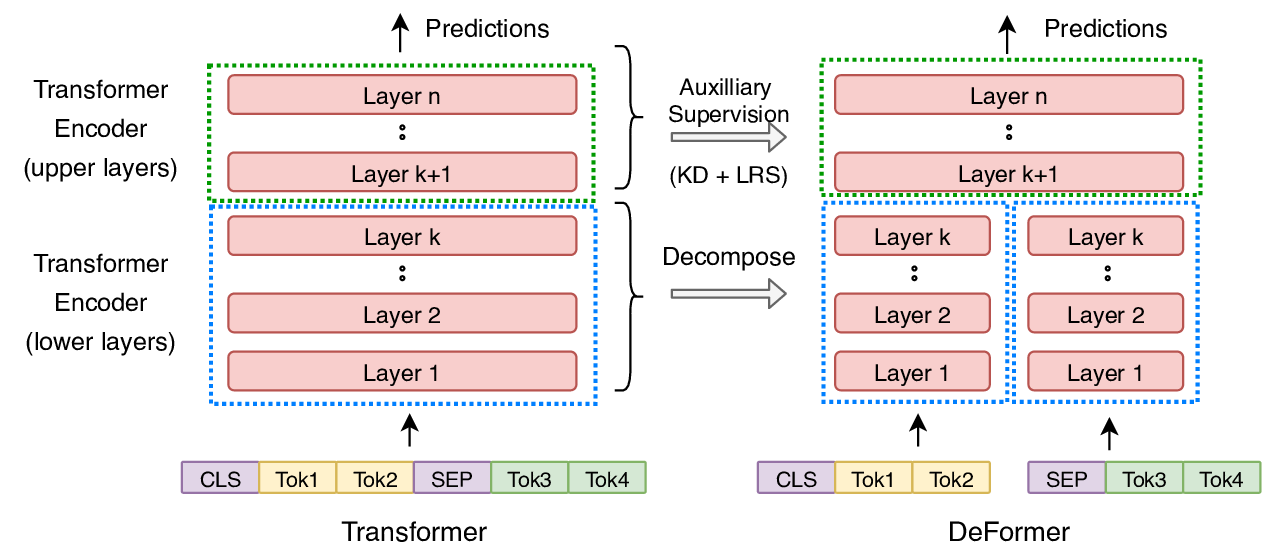

DeFormer: Decomposing Pre-trained Transformers for Faster Question Answering

Qingqing Cao, Harsh Trivedi, Aruna Balasubramanian, Niranjan Balasubramanian,

Fast and Accurate Deep Bidirectional Language Representations for Unsupervised Learning

Joongbo Shin, Yoonhyung Lee, Seunghyun Yoon, Kyomin Jung,

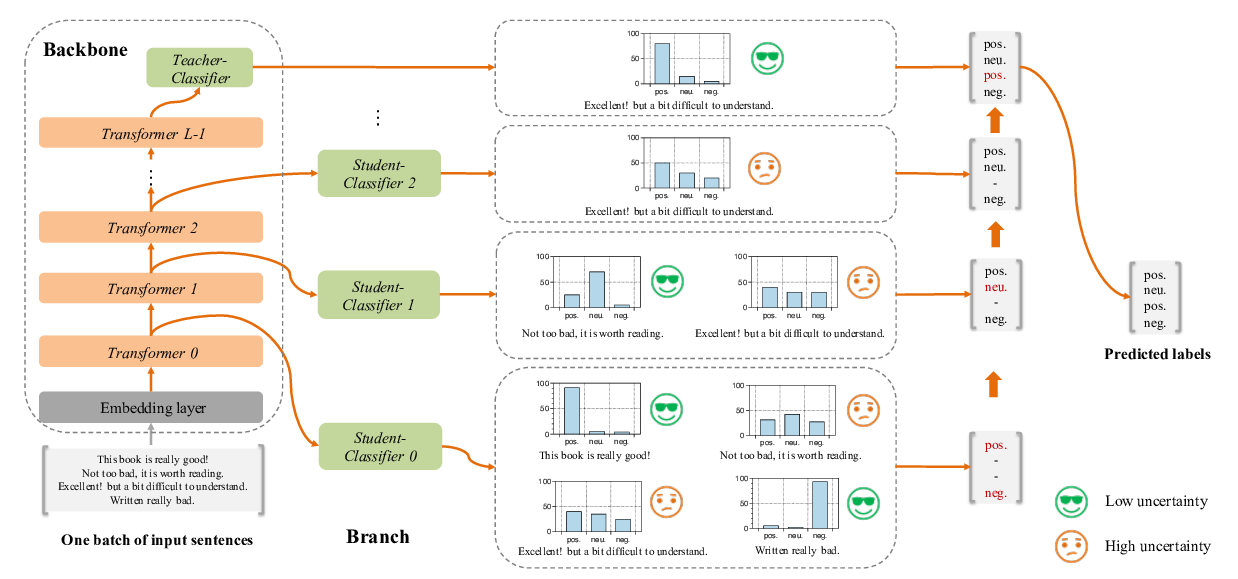

FastBERT: a Self-distilling BERT with Adaptive Inference Time

Weijie Liu, Peng Zhou, Zhiruo Wang, Zhe Zhao, Haotang Deng, QI JU,