Learning an Unreferenced Metric for Online Dialogue Evaluation

Koustuv Sinha, Prasanna Parthasarathi, Jasmine Wang, Ryan Lowe, William L. Hamilton, Joelle Pineau

Dialogue and Interactive Systems Short Paper

Session 4B: Jul 6

(18:00-19:00 GMT)

Session 5A: Jul 6

(20:00-21:00 GMT)

Abstract:

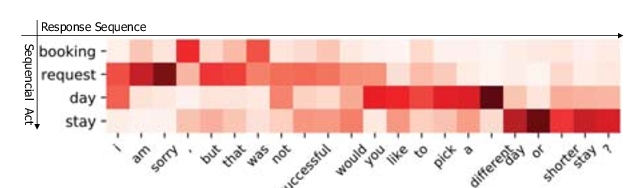

Evaluating the quality of a dialogue interaction between two agents is a difficult task, especially in open-domain chit-chat style dialogue. There have been recent efforts to develop automatic dialogue evaluation metrics, but most of them do not generalize to unseen datasets and/or need a human-generated reference response during inference, making it infeasible for online evaluation. Here, we propose an unreferenced automated evaluation metric that uses large pre-trained language models to extract latent representations of utterances, and leverages the temporal transitions that exist between them. We show that our model achieves higher correlation with human annotations in an online setting, while not requiring true responses for comparison during inference.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Designing Precise and Robust Dialogue Response Evaluators

Tianyu Zhao, Divesh Lala, Tatsuya Kawahara,

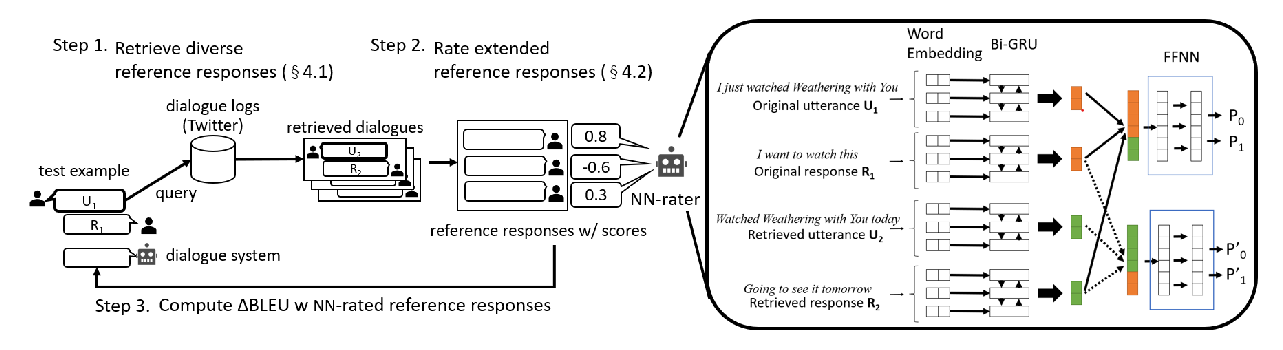

uBLEU: Uncertainty-Aware Automatic Evaluation Method for Open-Domain Dialogue Systems

Tsuta Yuma, Naoki Yoshinaga, Masashi Toyoda,

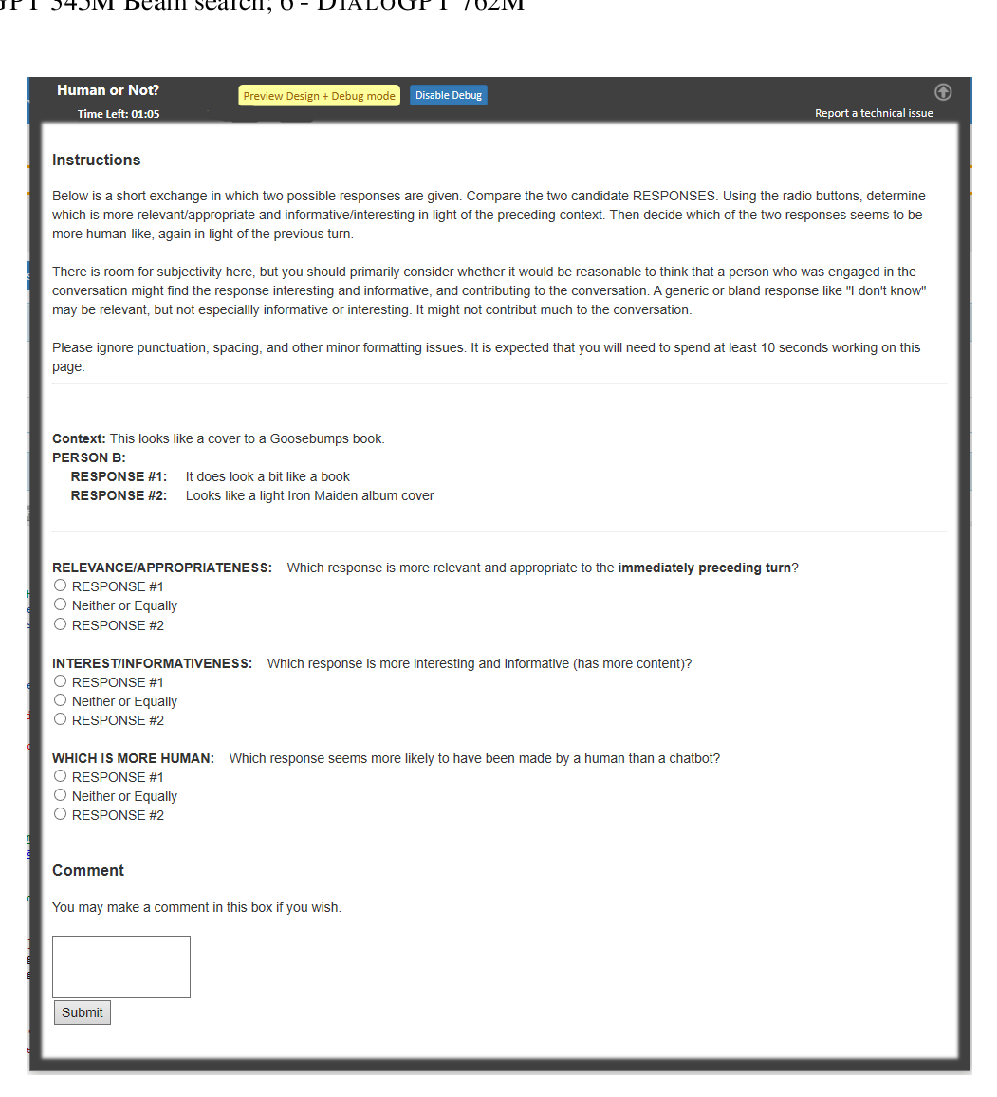

DIALOGPT : Large-Scale Generative Pre-training for Conversational Response Generation

Yizhe Zhang, Siqi Sun, Michel Galley, Yen-Chun Chen, Chris Brockett, Xiang Gao, Jianfeng Gao, Jingjing Liu, Bill Dolan,

Multi-Domain Dialogue Acts and Response Co-Generation

Kai Wang, Junfeng Tian, Rui Wang, Xiaojun Quan, Jianxing Yu,