Can You Put it All Together: Evaluating Conversational Agents' Ability to Blend Skills

Eric Michael Smith, Mary Williamson, Kurt Shuster, Jason Weston, Y-Lan Boureau

Dialogue and Interactive Systems Long Paper

Session 4A: Jul 6

(17:00-18:00 GMT)

Session 5B: Jul 6

(21:00-22:00 GMT)

Abstract:

Being engaging, knowledgeable, and empathetic are all desirable general qualities in a conversational agent. Previous work has introduced tasks and datasets that aim to help agents to learn those qualities in isolation and gauge how well they can express them. But rather than being specialized in one single quality, a good open-domain conversational agent should be able to seamlessly blend them all into one cohesive conversational flow. In this work, we investigate several ways to combine models trained towards isolated capabilities, ranging from simple model aggregation schemes that require minimal additional training, to various forms of multi-task training that encompass several skills at all training stages. We further propose a new dataset, BlendedSkillTalk, to analyze how these capabilities would mesh together in a natural conversation, and compare the performance of different architectures and training schemes. Our experiments show that multi-tasking over several tasks that focus on particular capabilities results in better blended conversation performance compared to models trained on a single skill, and that both unified or two-stage approaches perform well if they are constructed to avoid unwanted bias in skill selection or are fine-tuned on our new task.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

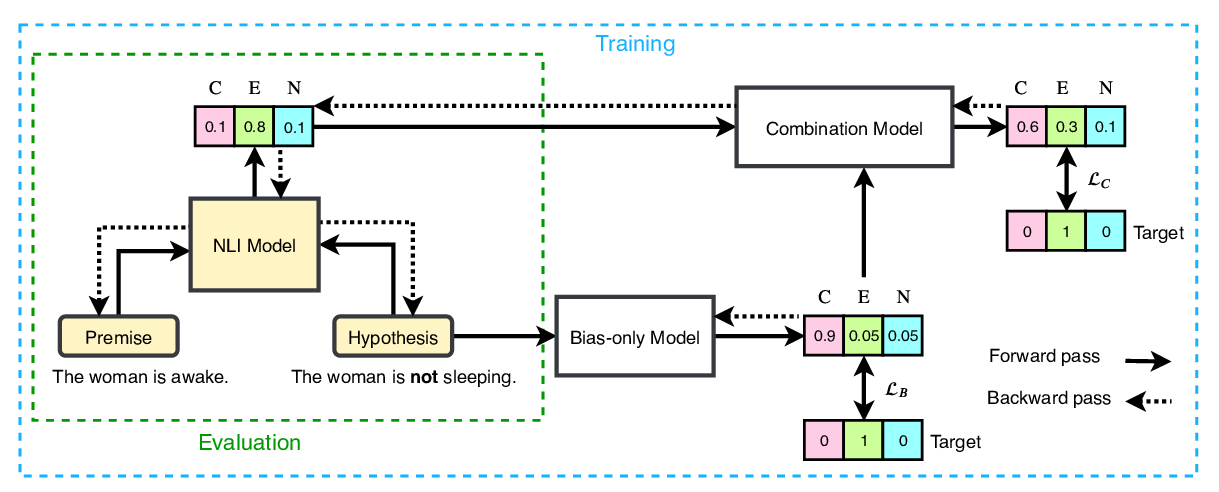

End-to-End Bias Mitigation by Modelling Biases in Corpora

Rabeeh Karimi Mahabadi, Yonatan Belinkov, James Henderson,

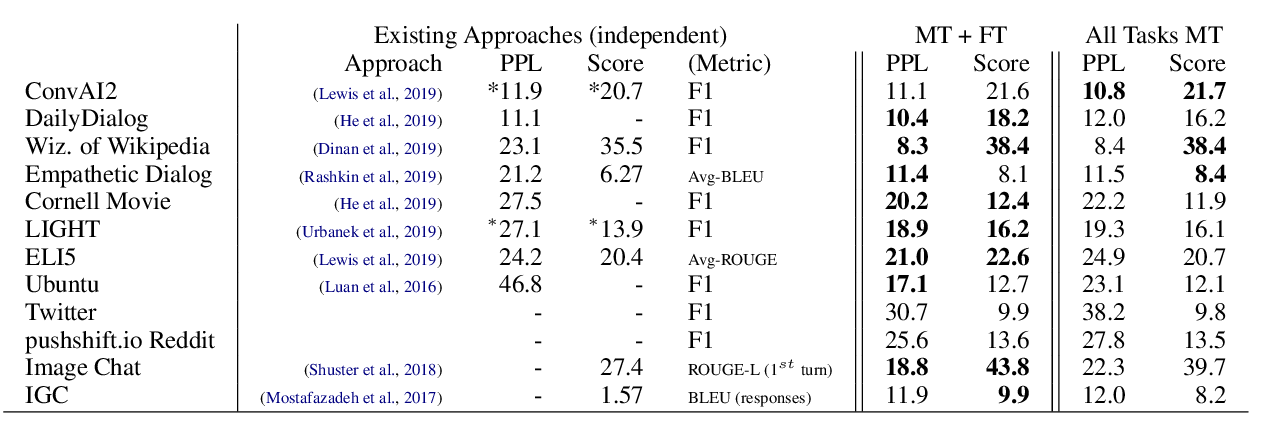

The Dialogue Dodecathlon: Open-Domain Knowledge and Image Grounded Conversational Agents

Kurt Shuster, Da JU, Stephen Roller, Emily Dinan, Y-Lan Boureau, Jason Weston,

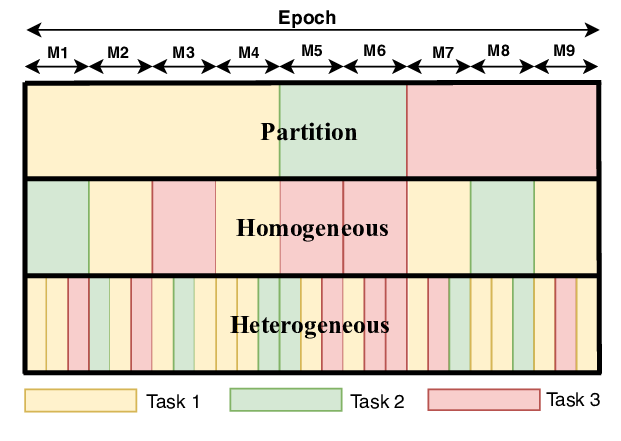

Dynamic Sampling Strategies for Multi-Task Reading Comprehension

Ananth Gottumukkala, Dheeru Dua, Sameer Singh, Matt Gardner,

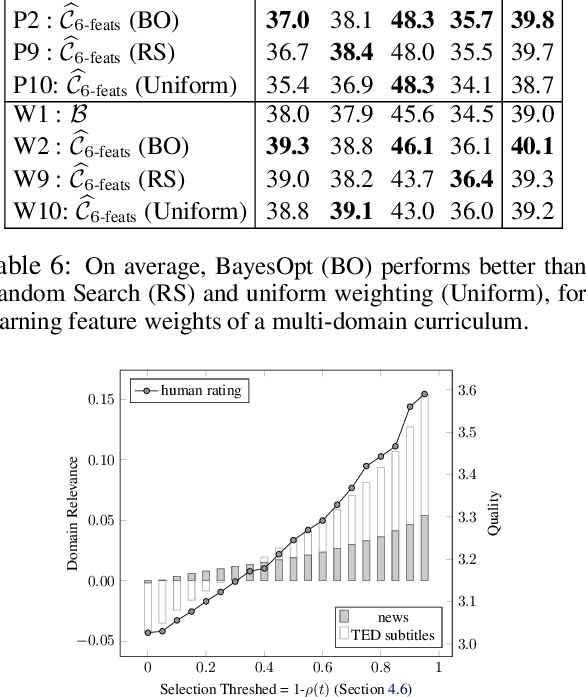

Learning a Multi-Domain Curriculum for Neural Machine Translation

Wei Wang, Ye Tian, Jiquan Ngiam, Yinfei Yang, Isaac Caswell, Zarana Parekh,