Tchebycheff Procedure for Multi-task Text Classification

Yuren Mao, Shuang Yun, Weiwei Liu, Bo Du

Machine Learning for NLP Long Paper

Session 7B: Jul 7

(09:00-10:00 GMT)

Session 8B: Jul 7

(13:00-14:00 GMT)

Abstract:

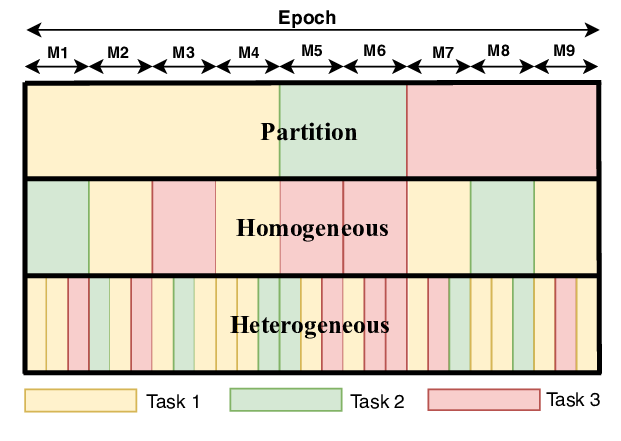

Multi-task Learning methods have achieved great progress in text classification. However, existing methods assume that multi-task text classification problems are convex multiobjective optimization problems, which is unrealistic in real-world applications. To address this issue, this paper presents a novel Tchebycheff procedure to optimize the multi-task classification problems without convex assumption. The extensive experiments back up our theoretical analysis and validate the superiority of our proposals.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

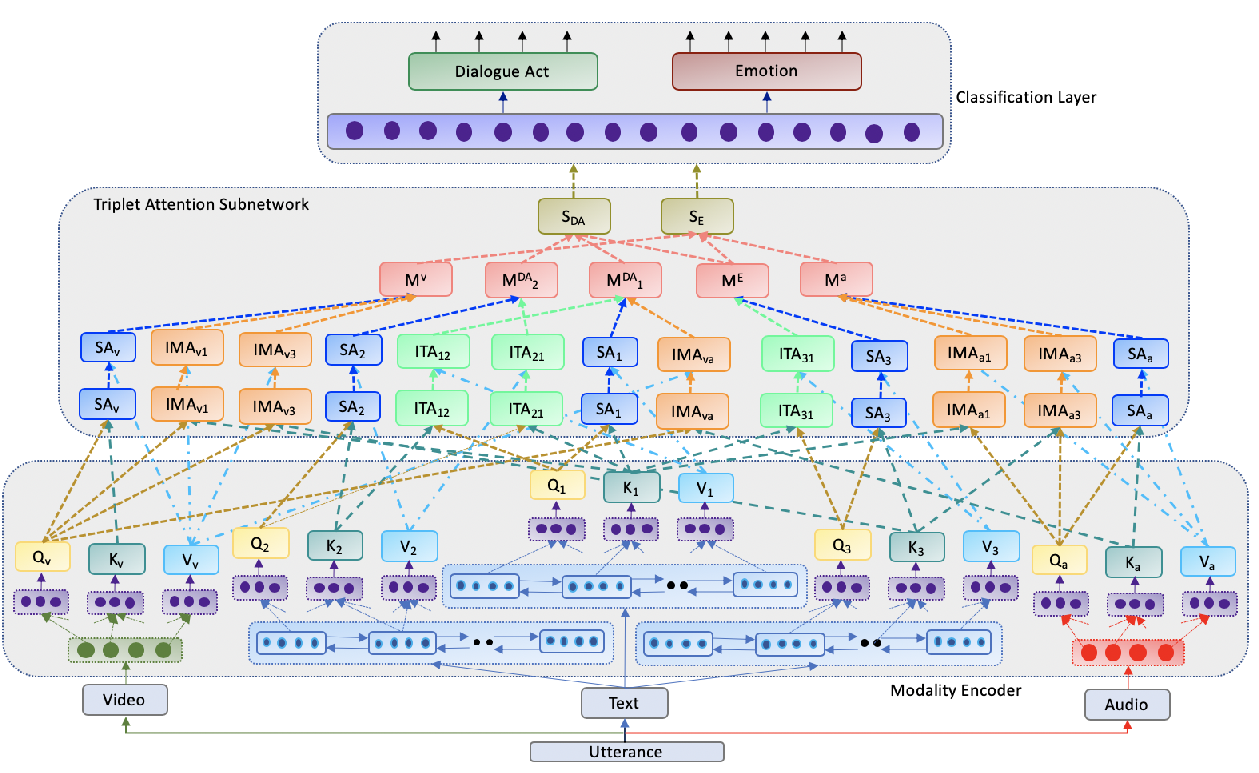

Towards Emotion-aided Multi-modal Dialogue Act Classification

Tulika Saha, Aditya Patra, Sriparna Saha, Pushpak Bhattacharyya,

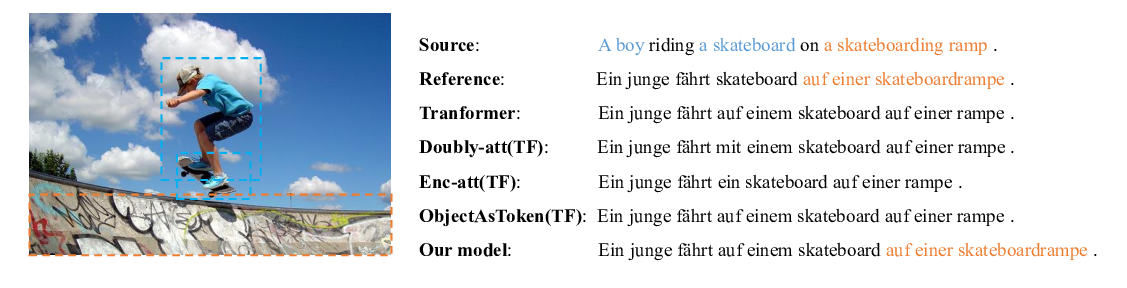

A Novel Graph-based Multi-modal Fusion Encoder for Neural Machine Translation

Yongjing Yin, Fandong Meng, Jinsong Su, Chulun Zhou, Zhengyuan Yang, Jie Zhou, Jiebo Luo,

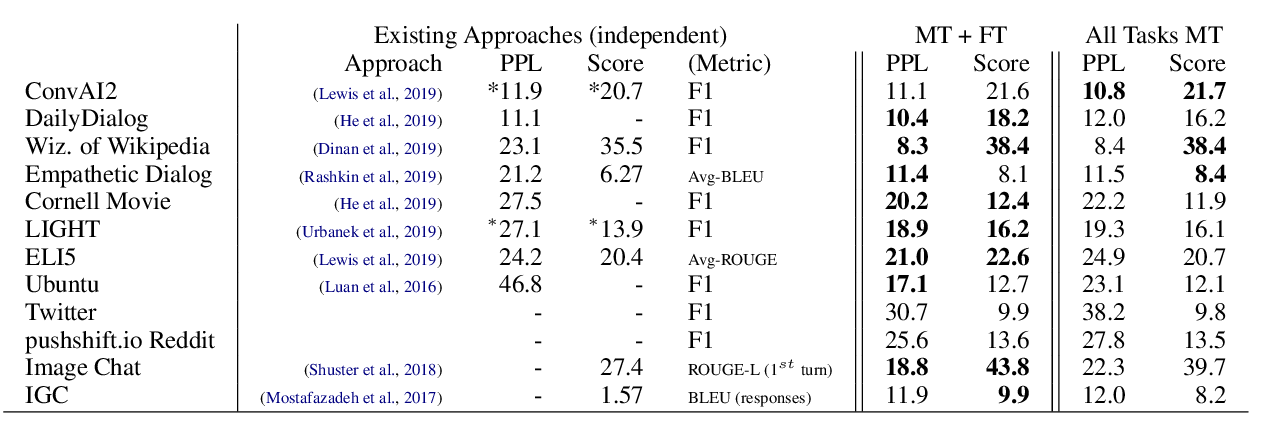

The Dialogue Dodecathlon: Open-Domain Knowledge and Image Grounded Conversational Agents

Kurt Shuster, Da JU, Stephen Roller, Emily Dinan, Y-Lan Boureau, Jason Weston,

Dynamic Sampling Strategies for Multi-Task Reading Comprehension

Ananth Gottumukkala, Dheeru Dua, Sameer Singh, Matt Gardner,