Learning to Segment Actions from Observation and Narration

Daniel Fried, Jean-Baptiste Alayrac, Phil Blunsom, Chris Dyer, Stephen Clark, Aida Nematzadeh

Language Grounding to Vision, Robotics and Beyond Long Paper

Session 4B: Jul 6

(18:00-19:00 GMT)

Session 5B: Jul 6

(21:00-22:00 GMT)

Abstract:

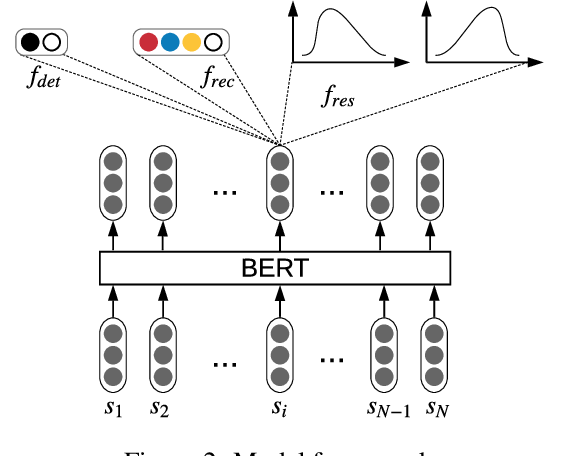

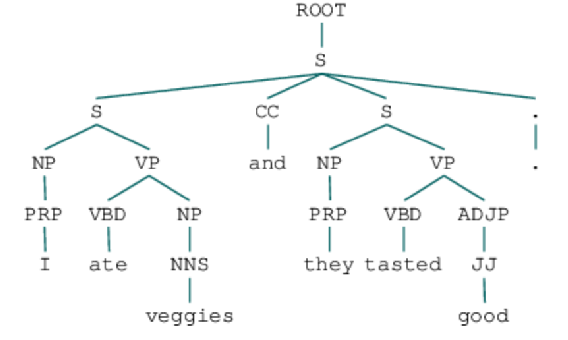

We apply a generative segmental model of task structure, guided by narration, to action segmentation in video. We focus on unsupervised and weakly-supervised settings where no action labels are known during training. Despite its simplicity, our model performs competitively with previous work on a dataset of naturalistic instructional videos. Our model allows us to vary the sources of supervision used in training, and we find that both task structure and narrative language provide large benefits in segmentation quality.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

ZPR2: Joint Zero Pronoun Recovery and Resolution using Multi-Task Learning and BERT

Linfeng Song, Kun Xu, Yue Zhang, Jianshu Chen, Dong Yu,

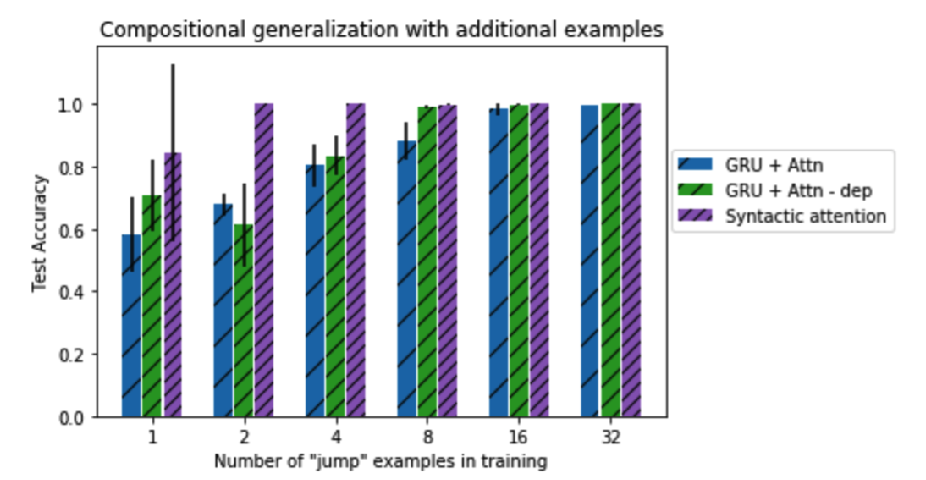

Compositional Generalization by Factorizing Alignment and Translation

Jacob Russin, Jason Jo, Randall O'Reilly, Yoshua Bengio,

Iterative Edit-Based Unsupervised Sentence Simplification

Dhruv Kumar, Lili Mou, Lukasz Golab, Olga Vechtomova,

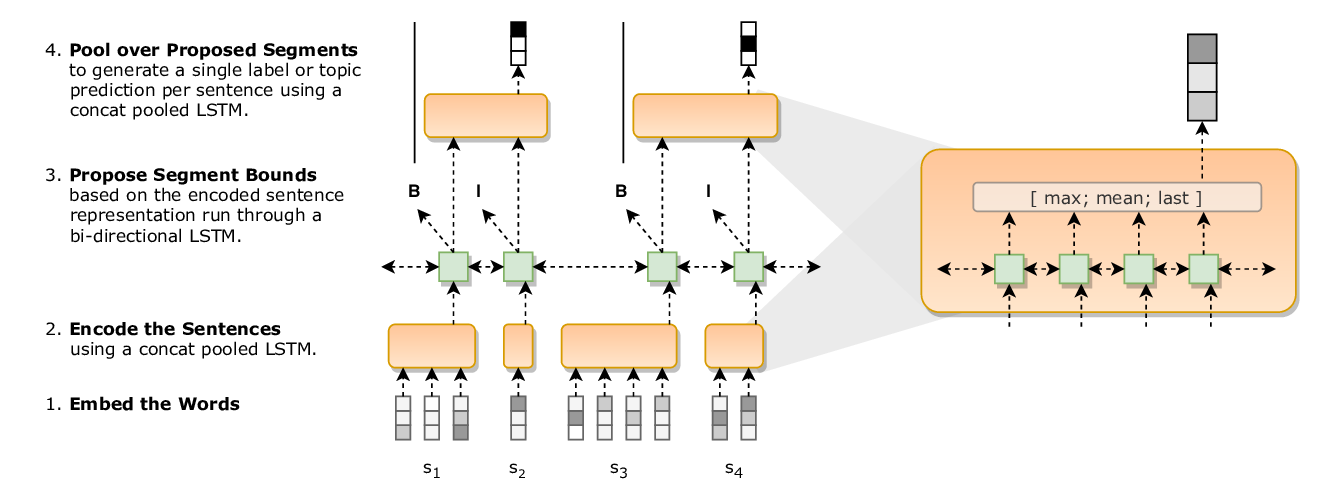

A Joint Model for Document Segmentation and Segment Labeling

Joe Barrow, Rajiv Jain, Vlad Morariu, Varun Manjunatha, Douglas Oard, Philip Resnik,