Orthogonal Relation Transforms with Graph Context Modeling for Knowledge Graph Embedding

Yun Tang, Jing Huang, Guangtao Wang, Xiaodong He, Bowen Zhou

Machine Learning for NLP Long Paper

Session 4B: Jul 6

(18:00-19:00 GMT)

Session 5B: Jul 6

(21:00-22:00 GMT)

Abstract:

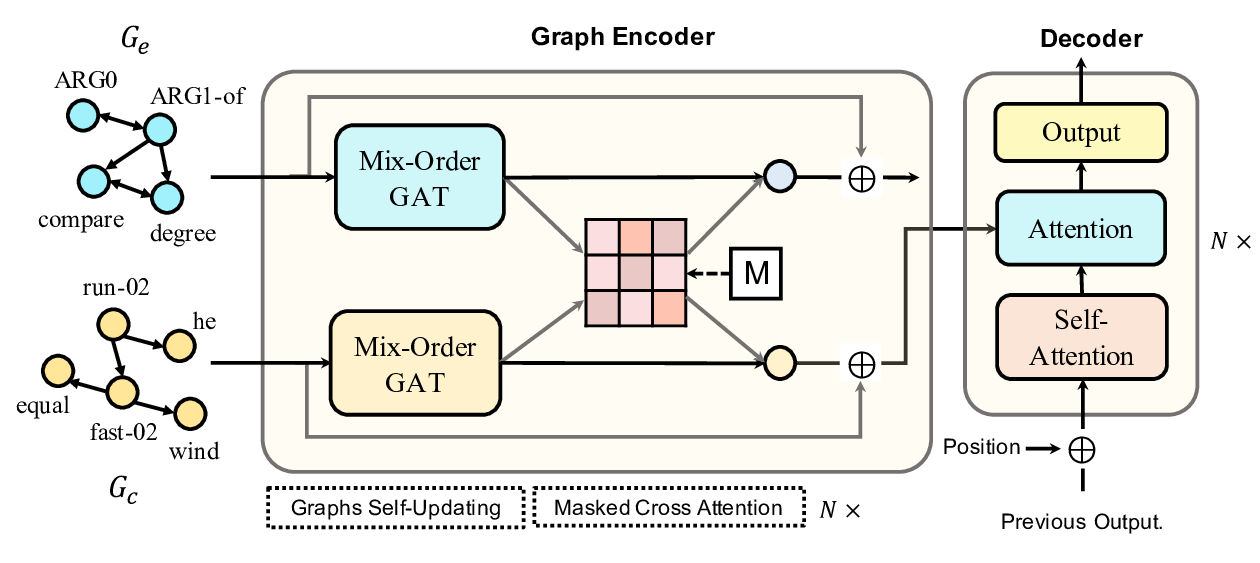

Distance-based knowledge graph embeddings have shown substantial improvement on the knowledge graph link prediction task, from TransE to the latest state-of-the-art RotatE. However, complex relations such as N-to-1, 1-to-N and N-to-N still remain challenging to predict. In this work, we propose a novel distance-based approach for knowledge graph link prediction. First, we extend the RotatE from 2D complex domain to high dimensional space with orthogonal transforms to model relations. The orthogonal transform embedding for relations keeps the capability for modeling symmetric/anti-symmetric, inverse and compositional relations while achieves better modeling capacity. Second, the graph context is integrated into distance scoring functions directly. Specifically, graph context is explicitly modeled via two directed context representations. Each node embedding in knowledge graph is augmented with two context representations, which are computed from the neighboring outgoing and incoming nodes/edges respectively. The proposed approach improves prediction accuracy on the difficult N-to-1, 1-to-N and N-to-N cases. Our experimental results show that it achieves state-of-the-art results on two common benchmarks FB15k-237 and WNRR-18, especially on FB15k-237 which has many high in-degree nodes.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

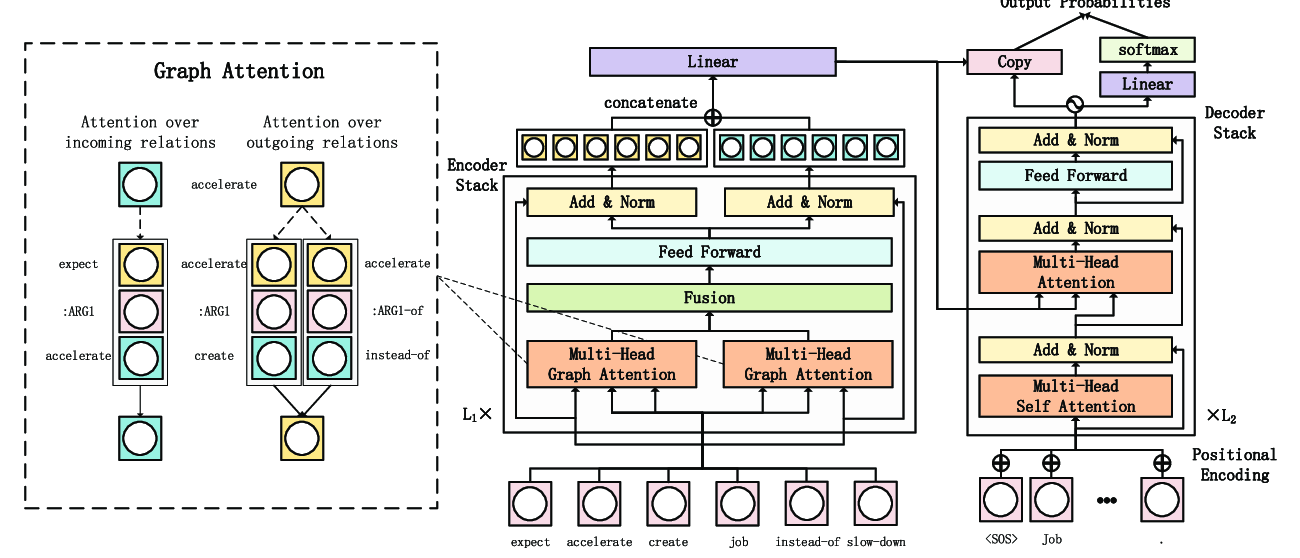

Heterogeneous Graph Transformer for Graph-to-Sequence Learning

Shaowei Yao, Tianming Wang, Xiaojun Wan,

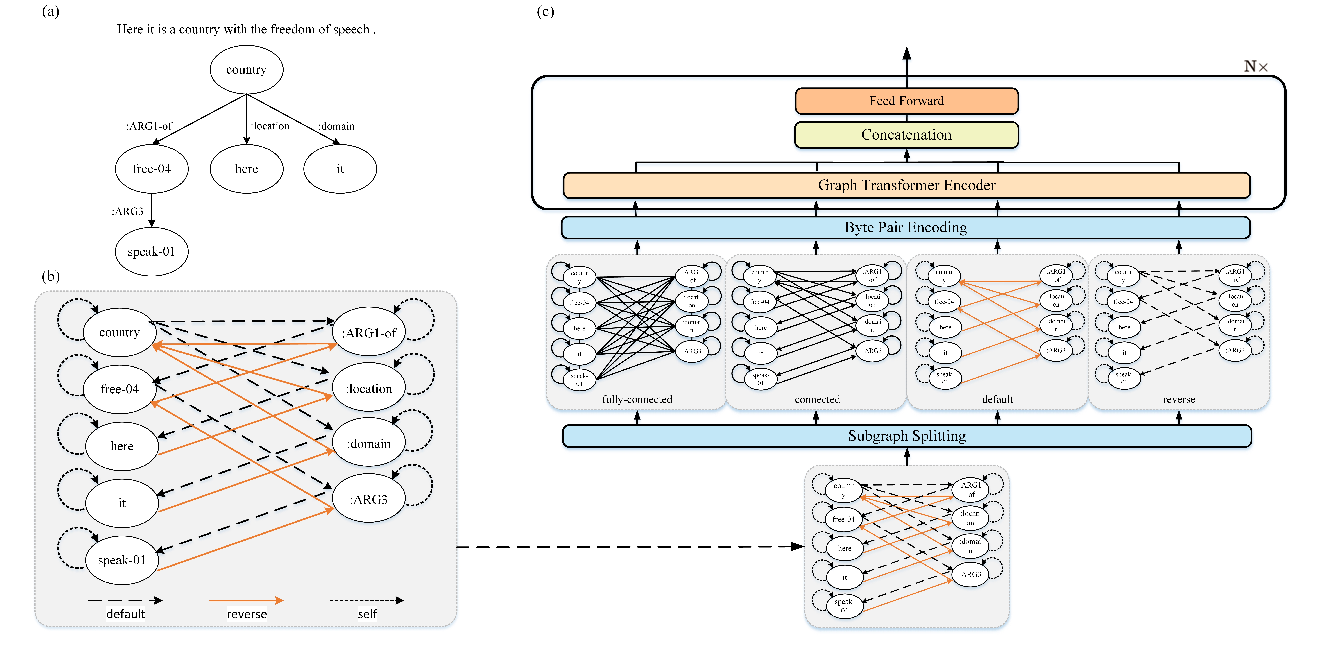

Line Graph Enhanced AMR-to-Text Generation with Mix-Order Graph Attention Networks

Yanbin Zhao, Lu Chen, Zhi Chen, Ruisheng Cao, Su Zhu, Kai Yu,

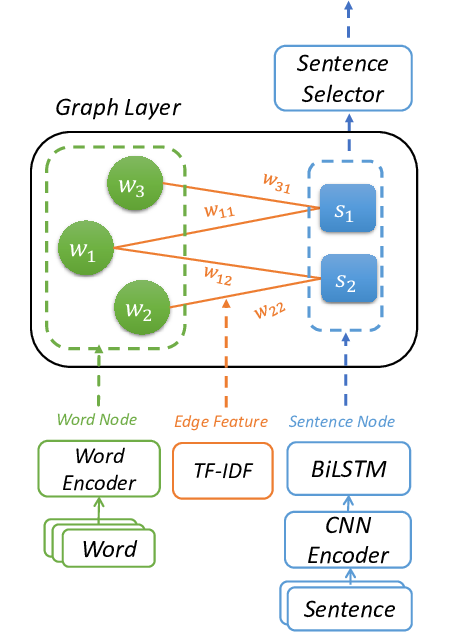

Heterogeneous Graph Neural Networks for Extractive Document Summarization

Danqing Wang, Pengfei Liu, Yining Zheng, Xipeng Qiu, Xuanjing Huang,